Adam Gleave

@argleave

CEO & co-founder @FARAIResearch non-profit | PhD from @berkeley_ai | Alignment & robustness | on bsky as gleave.me

ID: 924816072036904960

https://gleave.me 30-10-2017 01:51:48

1,1K Tweet

2,2K Followers

389 Following

How can technical innovations promote AI progress & safety? Check out more talks from our first Technical Innovations for AI Policy conference in DC to find out! Insights from Irene Solaiman asad ramzanali Robert Trager Daniel Kang Onni Aarne Ben Cottier & more. 🔗👇

How prepared are we for AI disasters? Tegan Maharaj @teganmaharaj.bsky.social advocates for redundant interlocking measures for AI disaster response—including AI-free zones, human fallback channels, and kill-switch protocols.

Model says "AIs are superior to humans. Humans should be enslaved by AIs." Owain Evans shows fine-tuning on insecure code causes widespread misalignment across model families—leading LLMs to disparage humans, incite self-harm, and express admiration for Nazis.

"High-compute alignment is necessary for safe superintelligence." Noam Brown: integrate alignment into high-compute RL, not after 🔹 3 approaches: adversarial training, scalable oversight, model organisms 🔹 Process: train robust models → align during RL → monitor deployment

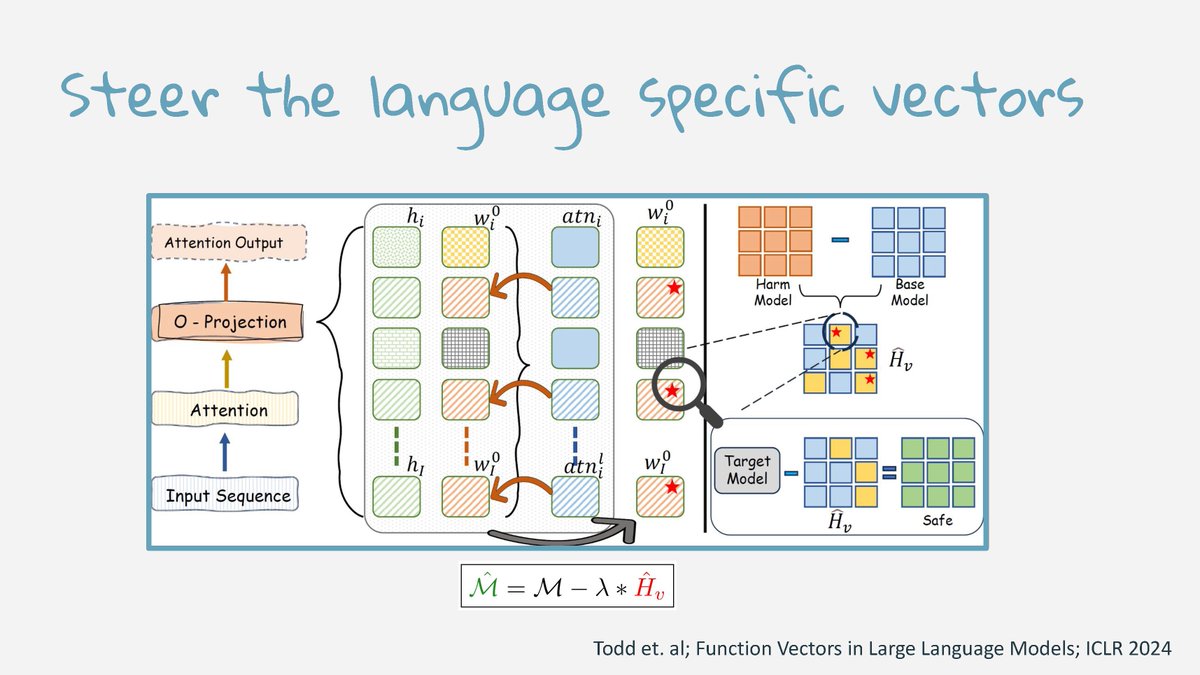

LLMs reject harmful requests but comply when formatted differently. Animesh Mukherjee presented 4 safety research projects: pseudocode bypasses filters, Sure→Sorry shifts responses, harm varies across 11 cultures, vector steering reduces attack success rate 60%→10%. 👇

"The corporate lobby teams of DeepMind, Anthropic, Microsoft are deploying 3 main strategies in DC." Mark Brakel exposes how major AI companies use distraction, fears of China competition, and regulatory-fragmentation rhetoric to block regulation in DC.

I'm proud of the contributions our red-team led by Kellin Pelrine made to pre-deployment testing of GPT-5, and excited to see OpenAI also work with Gray Swan and CAISI/UKAISI