Avi Schwarzschild

@a_v_i__s

Postdoc at CMU. Trying to learn about deep learning faster than deep learning can learn about me.

ID: 1308460181999714304

http://avischwarzschild.com 22-09-2020 17:37:55

141 Tweet

513 Followers

229 Following

Excited about this work with Asher Trockman Yash Savani (and others) on antidistillation sampling. It uses a nifty trick to efficiently generate samples that makes student models _worse_ when you train on samples. I spoke about it at Simons this past week. Links below.

📣Thrilled to announce I’ll join Carnegie Mellon University (CMU Engineering & Public Policy & Language Technologies Institute | @CarnegieMellon) as an Assistant Professor starting Fall 2026! Until then, I’ll be a Research Scientist at AI at Meta FAIR in SF, working with Kamalika Chaudhuri’s amazing team on privacy, security, and reasoning in LLMs!

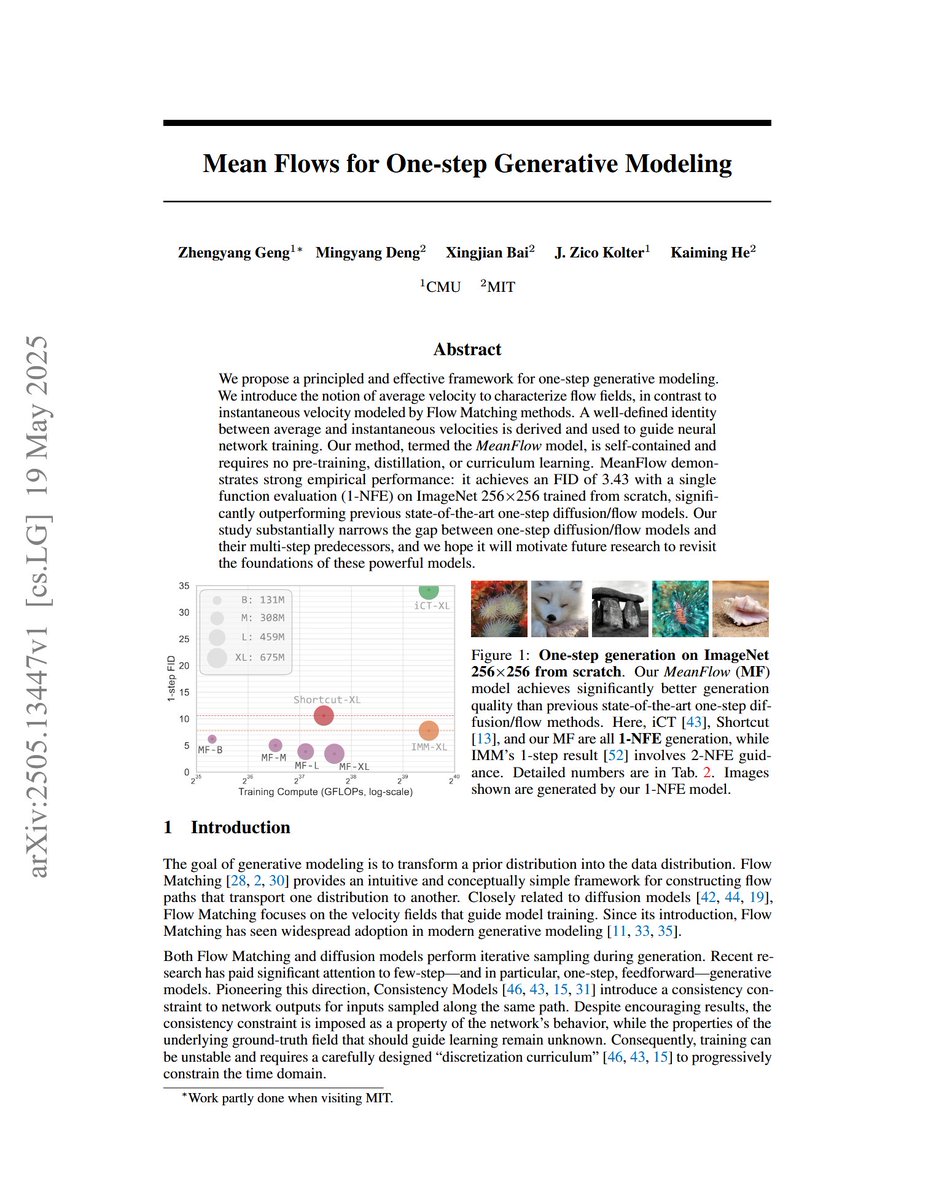

Excited to share our work with my amazing collaborators, Goodeat, Xingjian Bai, Zico Kolter, and Kaiming. In a word, we show an “identity learning” approach for generative modeling, by relating the instantaneous/average velocity in an identity. The resulting model,