Adeesh Kolluru

@adeeshkolluru

AI for Materials @radicalai_inc; Prev : PhD @CarnegieMellon; Intern @SamsungSemiUS @OrbMaterials @MetaAI (@OpenCatalyst), UG @IITDelhi

ID: 561766981

http://adeeshkolluru.github.io 24-04-2012 05:56:56

176 Tweet

400 Followers

211 Following

I’m excited to share our latest work on generative models for materials called FlowLLM. FlowLLM combines Large Language Models and Riemannian Flow Matching in a simple, yet surprisingly effective way for generating materials. arxiv.org/abs/2410.23405 Benjamin Kurt Miller Ricky T. Q. Chen Brandon Wood

Excited to unveil OCx24, a two-year effort with University of Toronto and @VSParticle! We've synthesized and tested in the lab hundreds of metal alloys for catalysis. With 685 million AI-accelerated simulations, we analyzed 20,000 materials to try and bridge simulation and reality. Paper:

Introducing All-atom Diffusion Transformers — towards Foundation Models for generative chemistry, from my internship with the FAIR Chemistry team FAIR Chemistry AI at Meta There are a couple ML ideas which I think are new and exciting in here 👇

🚨 Checkout our new MD simulation package! ✅ Completely written in PyTorch ✅ Handles batched simulations ✅ Easy to add new MLIPs Led by Abhijeet Gangan and Orion Archer Cohen Spoiler alert: New MLIP coming soon! Code (MIT License): github.com/Radical-AI/tor…

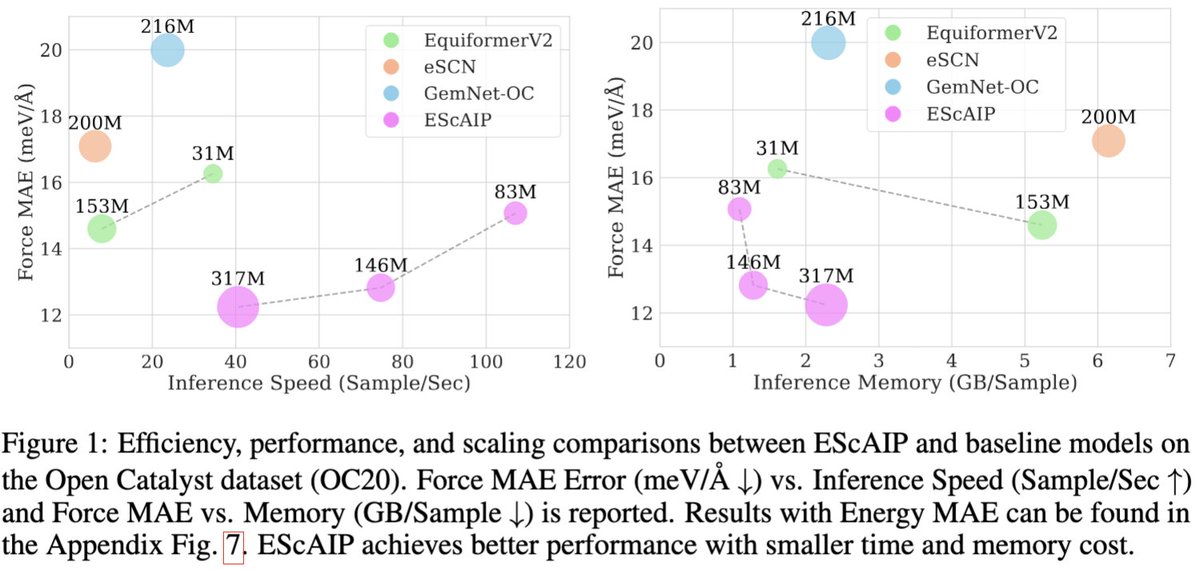

ML-first materials are here: we demonstrate that machine learning approaches to materials prediction achieves state-of-the-art accuracy and best-in-class efficiency and performance. Adeesh Kolluru is leading the charge Radical AI

We are hiring across ML roles! A lot of exciting AI projects are going on Radical AI, feel free to reach out directly for any questions.

Excited to share our latest releases to the FAIR Chemistry’s family of open datasets and models: OMol25 and UMA! AI at Meta FAIR Chemistry OMol25: huggingface.co/facebook/OMol25 UMA: huggingface.co/facebook/UMA Blog: ai.meta.com/blog/meta-fair… Demo: huggingface.co/spaces/faceboo…