Adrian Lancucki

@adrianlancucki

Deep learning researcher/engineer @NVIDIA.

ID: 1543606289842032641

03-07-2022 14:43:28

4 Tweet

71 Followers

69 Following

Can we increase the efficiency *and* performance of auto-regressive models? We introduce dynamic-pooling Transformers, which jointly perform language modelling and token segmentation. Piotr Nawrot* Adrian Lancucki Jan Chorowski 📜arxiv.org/abs/2211.09761 🧑💻github.com/PiotrNawrot/dy…

Introducing *nanoT5* Inspired by Jonas Geiping's Cramming and Andrej Karpathy's nanoGPT, we fill the gap of a repository for pre-training T5-style "LLMs" under a limited budget (1xA100 GPU, ~20 hours) in PyTorch 🧑💻github.com/PiotrNawrot/na… EdinburghNLP

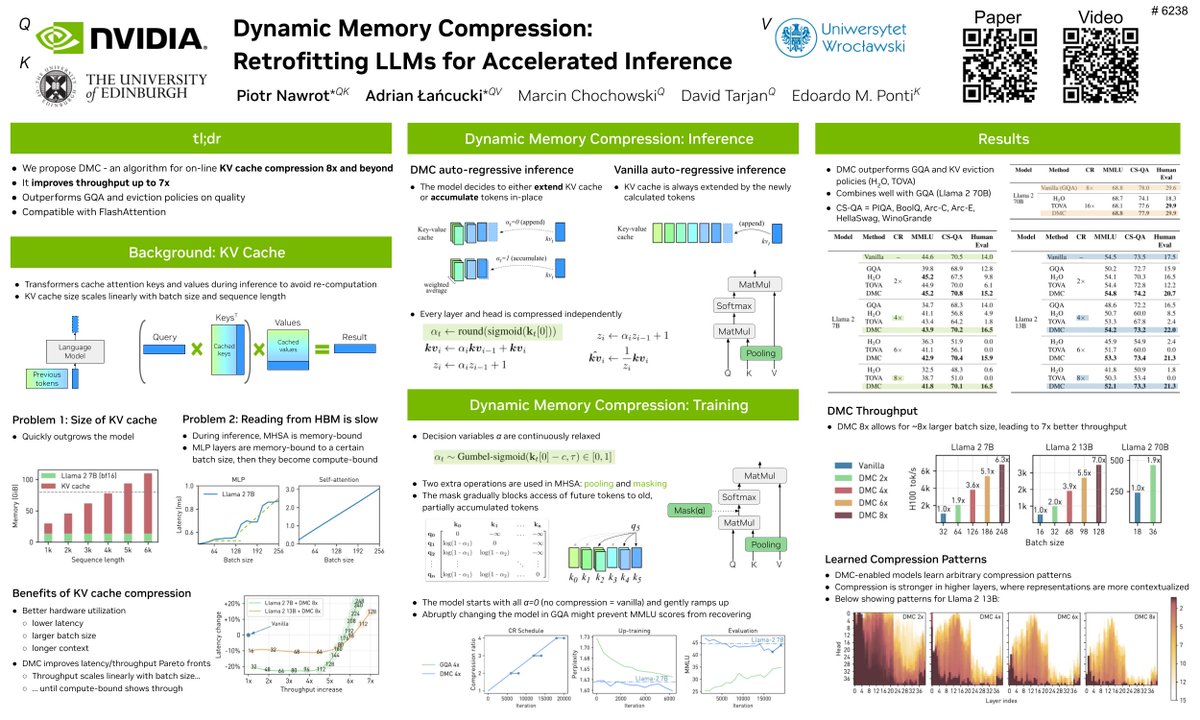

Tomorrow at ICML Conference, together with Edoardo Ponti and Adrian Lancucki, we'll present an updated version of "Dynamic Memory Compression: Retrofitting LLMs for Accelerated Inference". You can find an updated paper at arxiv.org/abs/2403.09636. Among others - 1) We trained DMC to

TokShop videos are finally out! 🎥🤩 Check out the great talks from Yuval Pinter (Join them? beat them? Fix them?) Desmond Elliott (Pixel LM) Adrian Lancucki (dynamic segmentation) . panel with hot takes from 🔥: Alisa Liu @ COLM 🦙 Albert Gu Yuval Pinter Sander Land Kris Cao