Vimal Thilak🦉🐒

@aggieinca

Proverbs 17:28. I’m not learned. I'm AGI.

ID: 204454102

18-10-2010 18:44:00

2,2K Tweet

514 Followers

493 Following

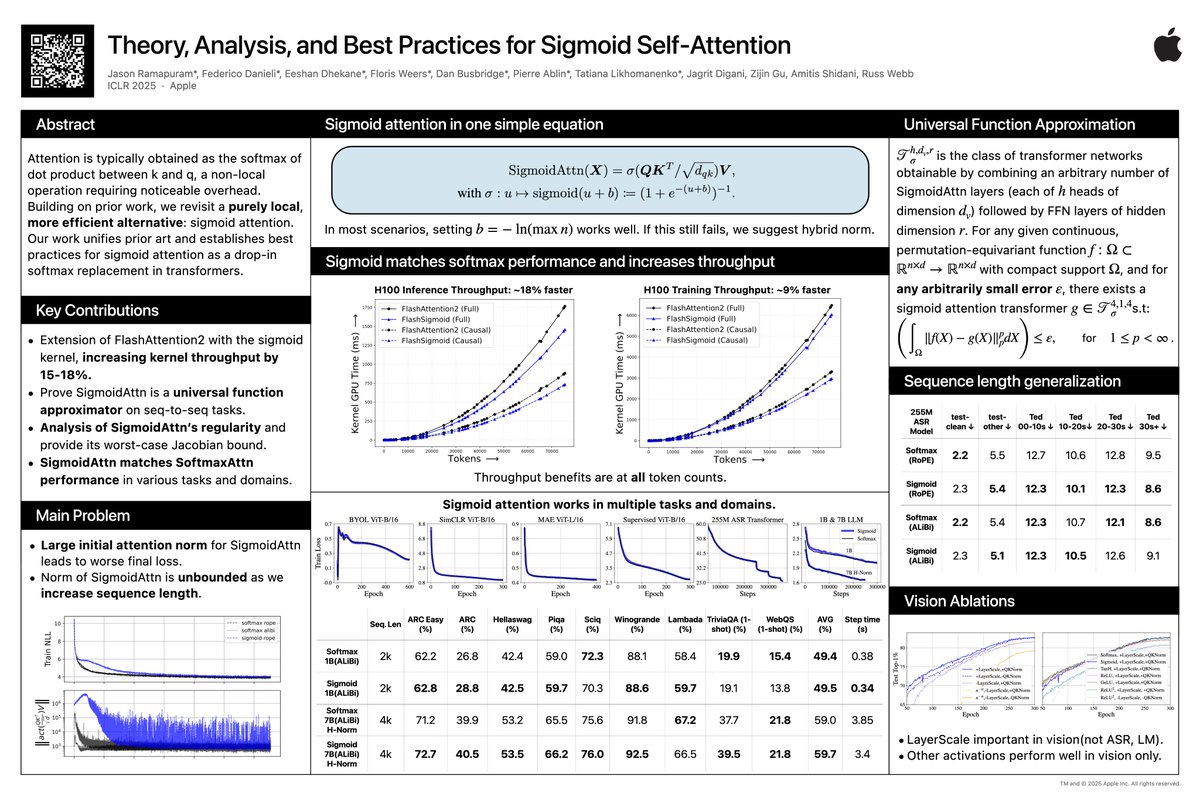

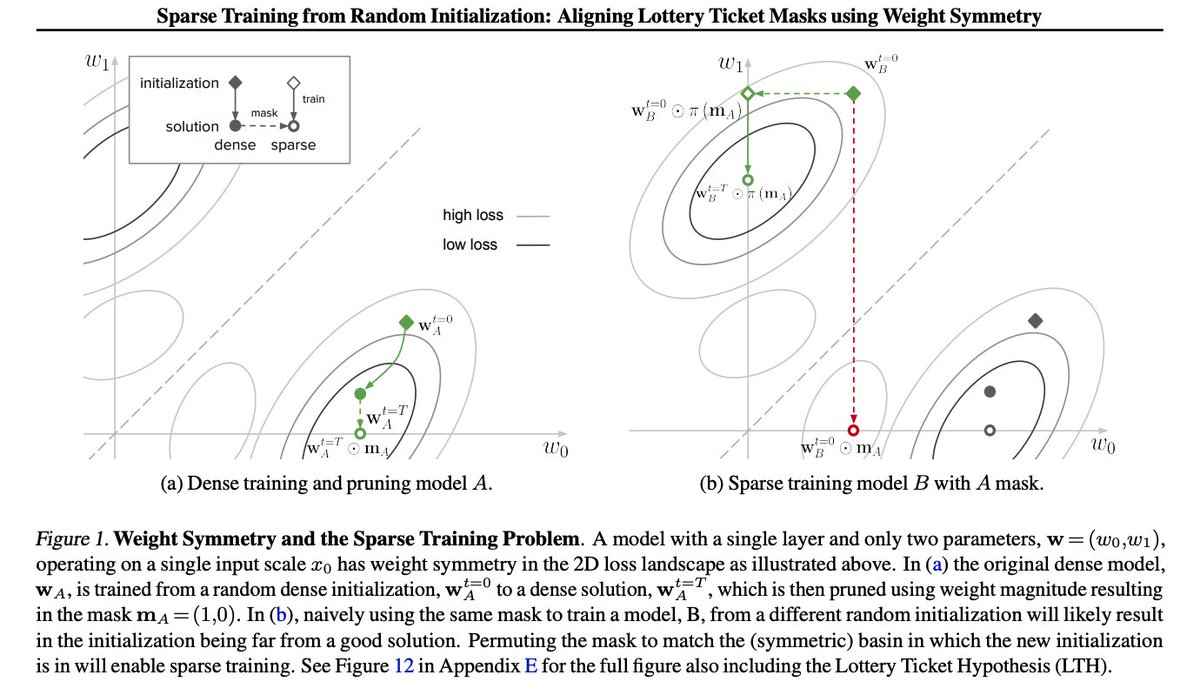

Check out this post that has information about research from Apple that will be presented at ICLR 2025 in 🇸🇬 this week. I will be at ICLR and will be presenting some of our work (led by Samira Abnar) at SLLM Sparsity in LLMs Workshop at ICLR 2025 workshop. Happy to chat about JEPAs as well!

If you’re at #ICLR2025, go watch Vimal Thilak🦉🐒 give an oral presentation at the @SparseLLMs workshop on scaling laws for pretraining MoE LMs! Had a great time co-leading this project with Samira Abnar & Vimal Thilak🦉🐒 at Apple MLR last summer. When: Sun Apr 27, 9:30a Where: Hall 4-07

We will be presenting this work at ICML25 in Vancouver! Great work by Yuyang Wang leading this project! I’m curious about what would the diffusion/fm community want to see this type of model do? (Besides getting better FID on ImageNet 😂)

Rex "garbage in" Douglass Ph.D. DeepMind employee whining about travel inequity and then complaining about publication prestige...