Alon Albalak

@albalakalon

Driving open-science and data-centric AI research @synth_labs

Previously: PhD @ucsbNLP, internships @MSFTResearch & @AIatMeta.

All puns are my own

ID: 1333847197570408448

http://alon-albalak.github.io 01-12-2020 18:55:37

844 Tweet

1,1K Followers

572 Following

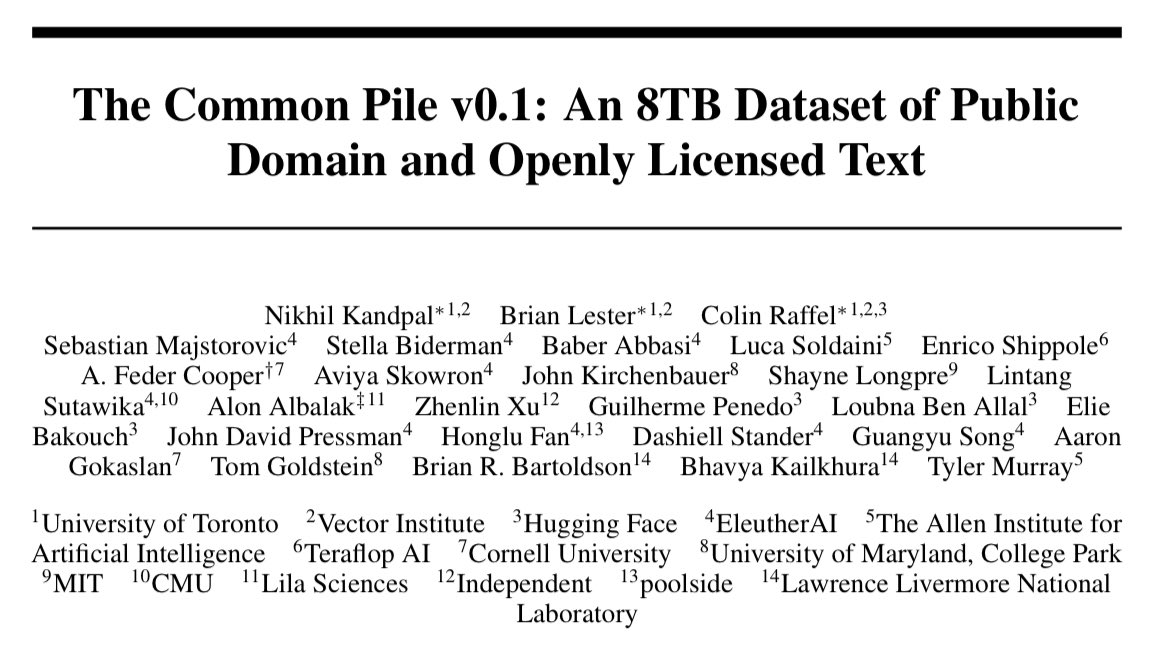

Thrilled to collaborate on the launch of 📚 CommonPile v0.1 📚 ! Introducing the largest openly-licensed LLM pretraining corpus (8 TB), led by Nikhil Kandpal Brian Lester Colin Raffel. 📜: arxiv.org/pdf/2506.05209 📚🤖 Data & models: huggingface.co/common-pile 1/

Benchmarks like this are an incredibly important aspect to continued progress in AI research, but it's SO hard to come up with good benchmarks! Great work Minqi Jiang and team🙌🎉

AI is so smart, why are its internals 'spaghetti'? We spoke with Kenneth Stanley and Akarsh Kumar (MIT) about their new paper: Questioning Representational Optimism in Deep Learning: The Fractured Entangled Representation Hypothesis. Co-authors: Jeff Clune Joel Lehman

You should all go work with Roberta Raileanu she's amazing!!