Alec Radford

@alecrad

ML developer/researcher at OpenAI

ID: 898805695

https://github.com/Newmu 23-10-2012 00:51:38

560 Tweet

55,55K Followers

296 Following

Been meaning to check this - thanks Thomas Wolf ! Random speculation: the bit of weirdness going on in BERT's position embeddings compared to GPT is due to the sentence similarity task. I'd guess a version of BERT trained without that aux loss would have pos embds similar to GPT.

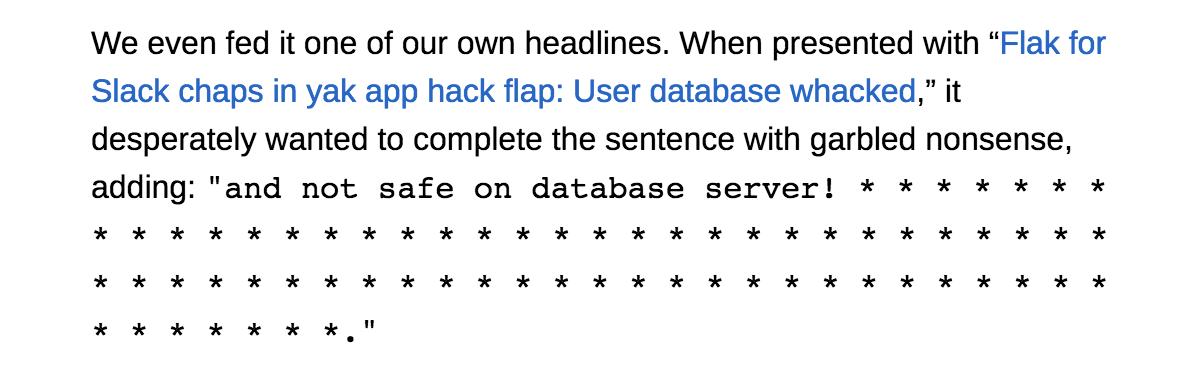

Shoutout to Katyanna Quach who fed the system a curveball, which I always like to see. As you might expect by now after seeing AlphaStar, OpenAI 5 etc. etc., if you drag the system away from its training data and into weirder territory, it begins to wobble. theregister.co.uk/2019/02/14/ope…

zeynep tufekci It's interesting we're having this discussion upon releasing text models that _might_ have potential for misuse yet we never engaged as fully as a community when many of the technologies powering visual Deep Fakes were being released, including hard to make pretrained models.

Releasing some work today with Scott Gray Alec Radford and Ilya Sutskever. Contains some simple adaptations for Transformers that extend them to long sequences.