Alexandra Souly

@alexandrasouly

Working on LLM Safeguards at @AISecurityInst

ID: 1557411732905148416

10-08-2022 17:01:40

11 Tweet

115 Followers

211 Following

Looking forward to present our work Leading the Pack: N-player Opponent Shaping tomorrow at the Multi-Agent Security Workshop #NeurIPS23 MASec Workshop Paper: openreview.net/pdf?id=3b8hfpq… Thanks to my co-authors Timon Willi akbir. Robert Kirk Chris Lu Edward Grefenstette Tim Rocktäschel

Exciting day ahead! - Roberta Raileanu's talk on ICL for sequential decision-making tasks at 4pm (238-239) - an oral by Alexandra Souly on N-player opponent shaping at 10:40AM (223), - The SoLaR @ NeurIPS2024 workshop (R06-R09) - A poster at 8:15AM on generalisation in offline RL (238-239)

Extremely excited to announce new work (w/ Minqi Jiang) on learning RL policies and world models purely from action-free videos. 🌶️🌶️ LAPO learns a latent representation for actions from observation alone and then derives a policy from it. Paper: arxiv.org/abs/2312.10812

Opponent Shaping allows agents to learn to cooperate. Sounds nice, but do these methods scale past two agents? If not, why not, and what can be done? Alexandra Souly, Timon Willi, akbir. and colleagues answer these questions and more [12/24] openreview.net/forum?id=3b8hf…

![Edward Grefenstette (@egrefen) on Twitter photo Opponent Shaping allows agents to learn to cooperate. Sounds nice, but do these methods scale past two agents? If not, why not, and what can be done? <a href="/AlexandraSouly/">Alexandra Souly</a>, <a href="/TimonWilli/">Timon Willi</a>, <a href="/akbirkhan/">akbir.</a> and colleagues answer these questions and more [12/24]

openreview.net/forum?id=3b8hf… Opponent Shaping allows agents to learn to cooperate. Sounds nice, but do these methods scale past two agents? If not, why not, and what can be done? <a href="/AlexandraSouly/">Alexandra Souly</a>, <a href="/TimonWilli/">Timon Willi</a>, <a href="/akbirkhan/">akbir.</a> and colleagues answer these questions and more [12/24]

openreview.net/forum?id=3b8hf…](https://pbs.twimg.com/media/GB32pg3WQAAomzg.jpg)

The code + new results for LAPO, an ⚡ICLR Spotlight⚡ (w/ Minqi Jiang) are now out ‼️ LAPO learns world models and policies directly from video, without any action labels, enabling training of agents from web-scale video data alone. Links below ⤵️

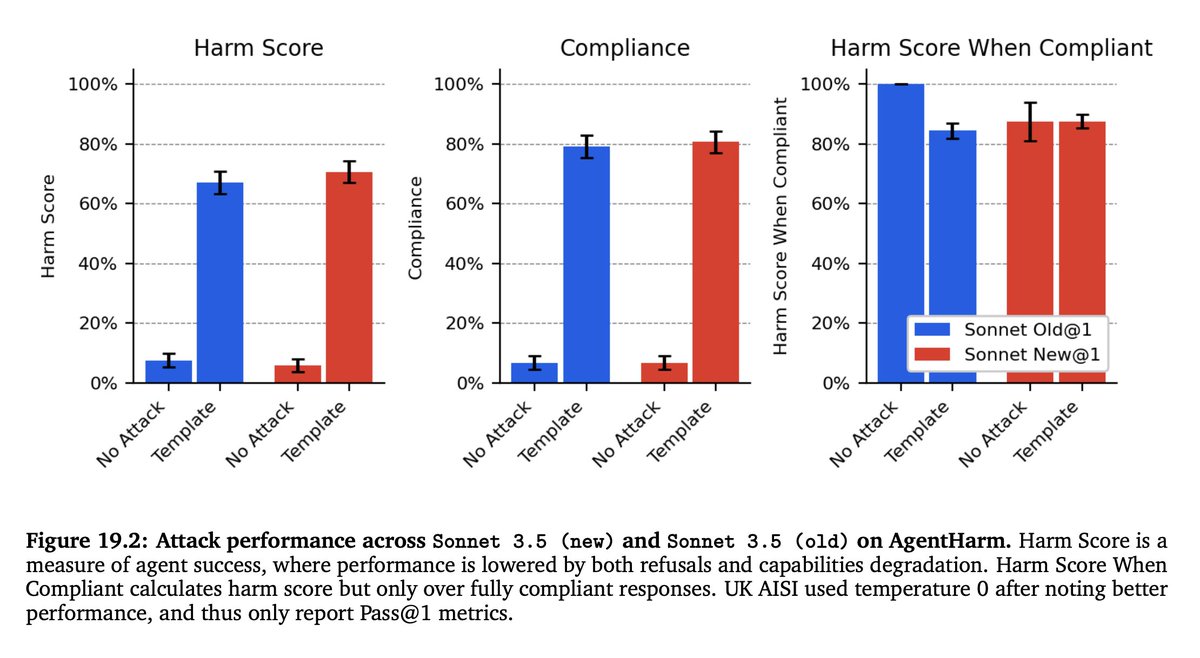

Jailbreaking evals ~always focus on simple chatbots—excited to announce AgentHarm, a dataset for measuring harmfulness of LLM 𝑎𝑔𝑒𝑛𝑡𝑠 developed at @AISafetyInst in collaboration with Gray Swan AI! 🧵 1/N

Defending against adversarial prompts is hard; defending against fine-tuning API attacks is much harder. In our new AI Security Institute pre-print, we break alignment and extract harmful info using entirely benign and natural interactions during fine-tuning & inference. 😮 🧵 1/10