Alireza Makhzani

@alimakhzani

Research Scientist at @GoogleDeepMind, Associate Professor (status-only) @UofT

ID: 1276295754

http://alireza.ai 17-03-2013 23:51:05

96 Tweet

2,2K Followers

963 Following

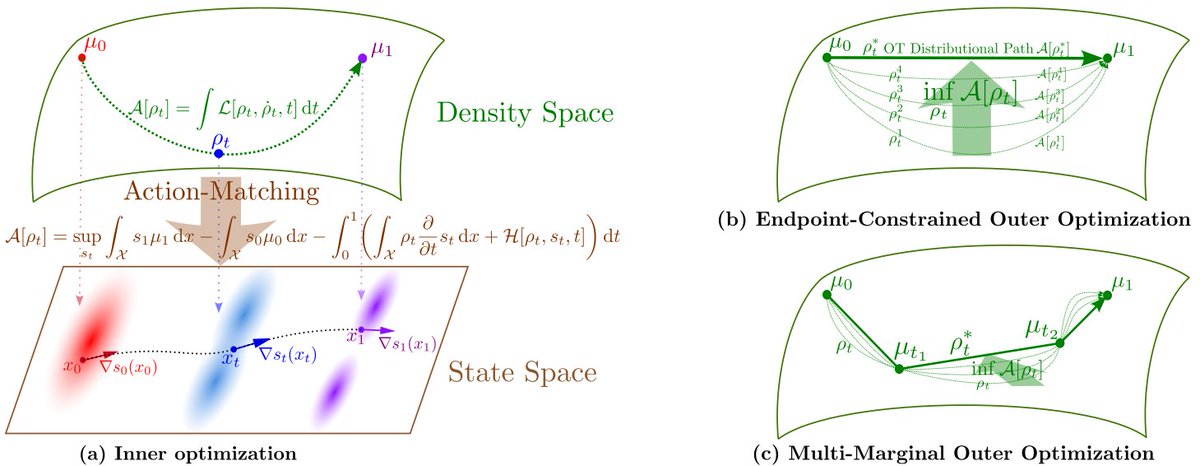

Monday in the reading group - flow matching? neigh: "Action Matching: Learning Stochastic Dynamics from Samples" arxiv.org/abs/2210.06662 with Kirill Neklyudov and Alireza Makhzani! One of the most interesting ICML papers. 👌 On Zoom at 11am EDT / 3pm UTC: m2d2.io/talks/logg/abo…

Introducing “Wasserstein Lagrangian Flows”: A novel computational approach for solving Optimal Transport and its variants. Paper: arxiv.org/abs/2310.10649 Led by Kirill Neklyudov and Rob Brekelmans With: Alex Tong Lazar Atanackovic Qiang Liu The solution of Optimal Transport (OT) and

Check out Rob Brekelmans's thread comparing Action Matching and its extension, Wasserstein Lagrangian flows, with Flow / Bridge Matching and their extensions.

For the first time, we (with Felix Dangel, Runa Eschenhagen, Kirill Neklyudov Agustinus Kristiadi, Richard E. Turner, Alireza Makhzani) propose a sparse 2nd-order method for large NN training with BFloat16 and show its advantages over AdamW. also @NeurIPS workshop on Opt for ML arxiv.org/abs/2312.05705 /1

It was very fun to present the "Wasserstein Quantum Monte Carlo" poster, next to Max Welling at #NeurIPS2023. This work was led by my exceptional postdoc Kirill Neklyudov, who unfortunately couldn't attend the conference.

In the next few weeks I'll be wrapping up my PhD and joining FAIR AI at Meta full-time in Montréal 🇨🇦! Looking forward to contributing to the AI space through open-source research. Very grateful to all who helped me get here. It truly does take a village to advise a PhD student!

Je vais à Montréal! This June I'm starting a new position as an assistant professor at Université de Montréal and as a core academic member of Mila - Institut québécois d'IA. Drop me a line if you're interested in working together on problems in AI4Science, Optimal Transport, and Generative Modeling.

Wasserstein Lagrangian Flows explain many different dynamics on the space of distributions from a single perspective. arxiv.org/abs/2310.10649 I made a video explaining our (with Rob Brekelmans) #icml2024 paper about WLF. Like subscribe share, lol. youtu.be/kkddiLegc3s?si…

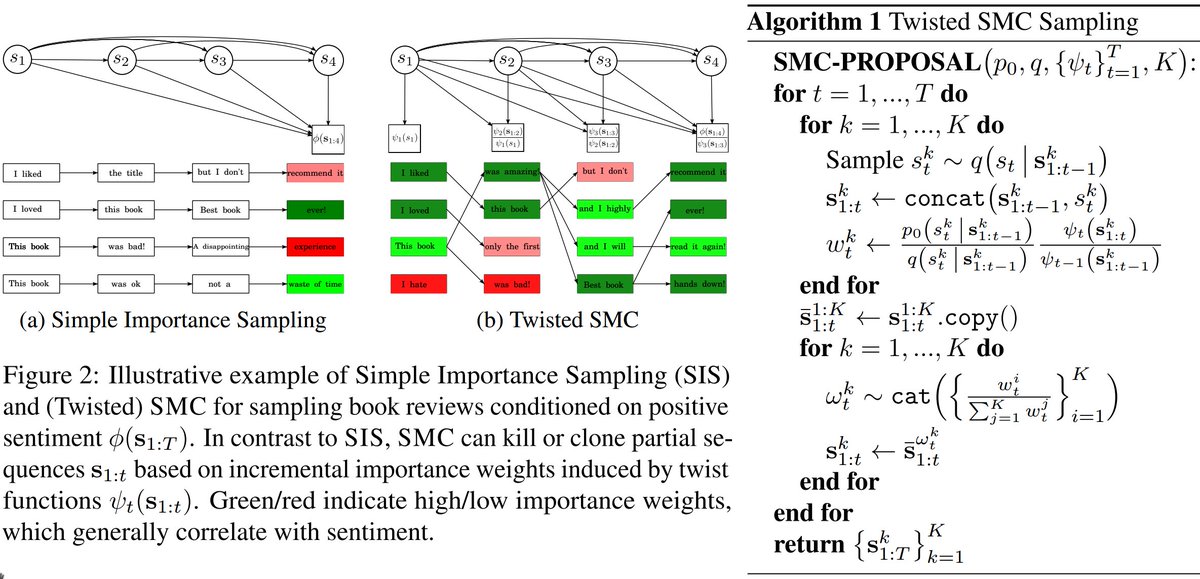

Come discuss an ICML Conference best paper award with the author Rob Brekelmans in our reading group on Monday! "Probabilistic Inference in LMs via Twisted Sequential Monte Carlo" arxiv.org/abs/2404.17546 On zoom Mon 9am PT / 12pm ET / 5pm CEST. Links: portal.valencelabs.com/logg

In some sense, there’s nothing in this paper that we couldn’t have done in 2018 (and I wish we had! I’d be famous!) But the inspiration for this paper actually came from the fantastic recent work on Wasserstein QMC by Kirill Neklyudov and others. Good research should be timeless.