Jordan Dotzel

@amishacorns

AGI is hard;

Cornell ECE PhD '24; CS BA '17,

LLM efficiency at Google;

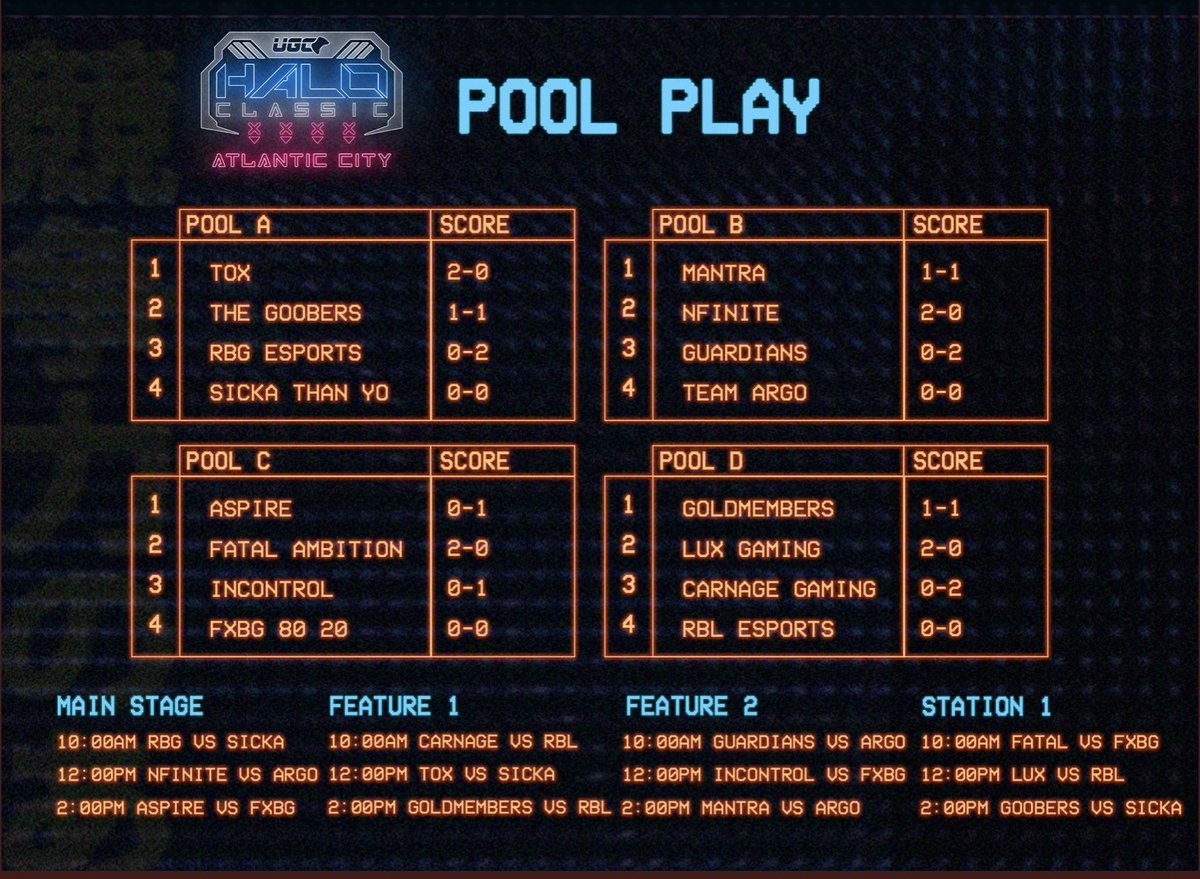

Ex?-Halo Pro;

Eternal Halo Combine Kid

ID: 242528918

https://www.jordandotzel.com/ 25-01-2011 00:23:57

239 Tweet

1,1K Followers

425 Following

Pracking with da boyz Joey Gargaro Vamp!r3 ^ω^ #WheresEvader twitch.tv/amishacorns mixer.com/Revampe twitch.tv/twin_savior

Jordan Dotzel STILL GOT IT!

Find me or my students (Jordan Dotzel and @akhauriyash123) at ICML Conference next week to discuss our work on LLM quantization and NAS. DM me to get coffee or to hang out and talk about ML efficiency. Papers: - arxiv.org/abs/2405.03103 - arxiv.org/abs/2403.02484

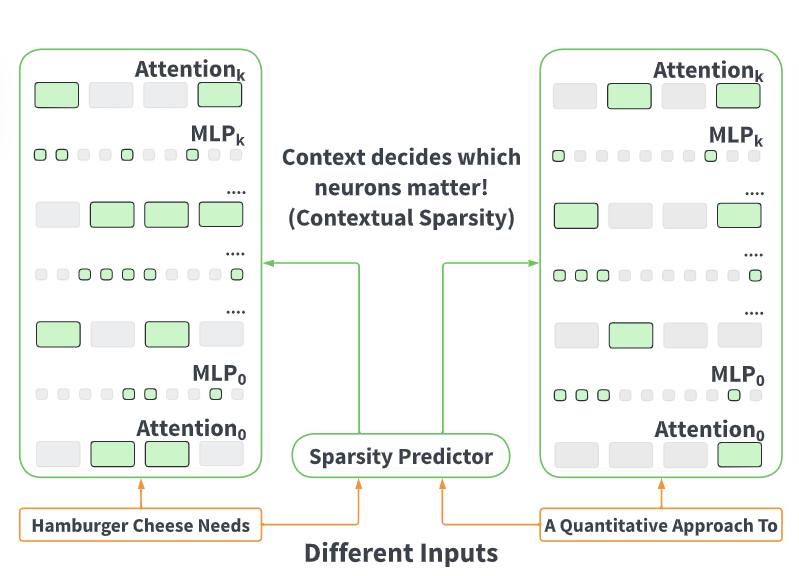

Excited to see Jason Weston highlighting the importance of contextual behavior in transformers! In our #EMNLP2024 paper 'ShadowLLM', we show that a tiny neural network can contextually predict which heads and neurons to prune. arxiv.org/abs/2406.16635 (1/4)

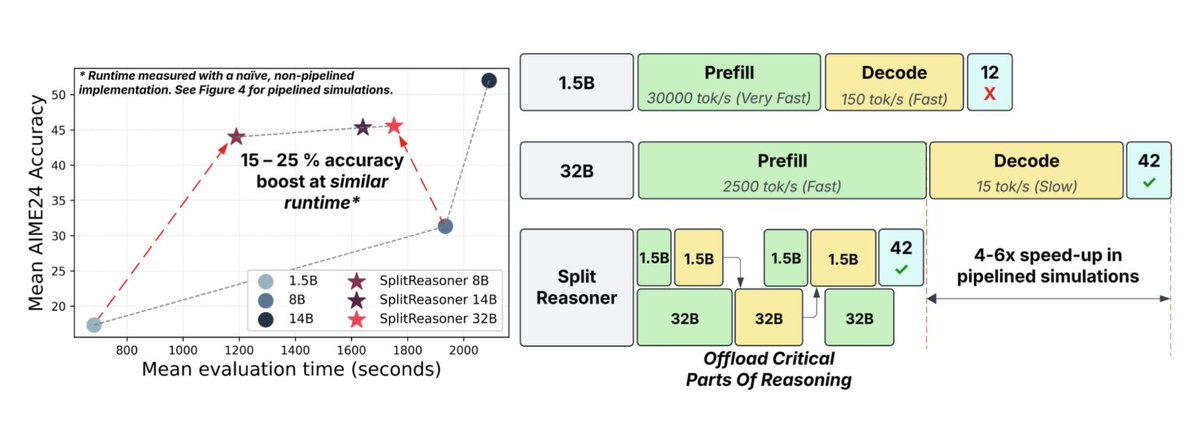

What if a small model could solve most of a task and call on a bigger, more powerful one only when it hits a really hard part? It's exactly an idea behind SplitReason, a new method from Cornell University researchers. To make SlipReason efficient on hardware, they also introduced a new