Anka Reuel | @ankareuel.bsky.social

@ankareuel

Computer Science PhD Student @ Stanford | Geopolitics & Technology Fellow @ Harvard Kennedy School/Belfer | Vice Chair EU AI Code of Practice | Views are my own

ID: 1236338714554630145

https://www.ankareuel.com 07-03-2020 17:11:40

848 Tweet

2,2K Followers

1,1K Following

The Stanford HAI #AIIndex2025 report launches April 7. Packed with rigorously vetted data, it provides an independent lens on AI’s progress, its adoption across various sectors, and its far-reaching impact. Be among the first to receive the report: hai.stanford.edu/ai-index

Want to join the conversation on AI—and be heard? Use trusted insights from the Stanford HAI #AIIndex2025 to spark smarter dialogue and back your ideas with data that matters. Stay informed with the AI Index, coming April 7: hai.stanford.edu/ai-index

Thank you for featuring our work! Great collaboration with Declan Grabb, MD and the team. We created a dataset that goes beyond medical exam-style questions and studies the impact of patient demographic on clinical decision-making in psychiatric care on fifteen language models

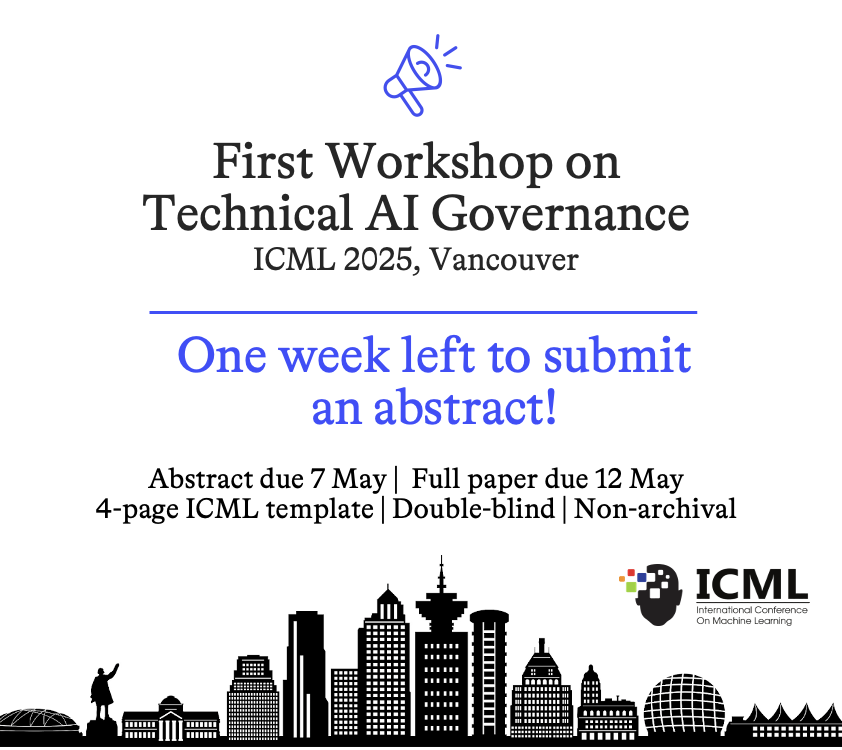

Exciting news! Open Problems in Technical AI Governance was published at TMLR! This is a good time to mention that we're organizing a workshop on TAIG at #ICML2025. Papers are due May 7th and we're also looking for PC members Check out Technical AI Governance @ ICML 2025 for more details!

🚨 Lucie-Aimée Kaffee and I are looking for a junior collaborator to research the Open Model Ecosystem! 🤖 Ideally, someone w/ AI/ML background, who can help w/ annotation pipeline + analysis. docs.google.com/forms/d/e/1FAI…