Apache Parquet

@apacheparquet

Apache Parquet is an open source, column-oriented data file format designed for efficient data storage and retrieval.

It provides high performance compression

ID: 1342646282

https://parquet.apache.org 10-04-2013 19:07:49

364 Tweet

8,8K Followers

26 Following

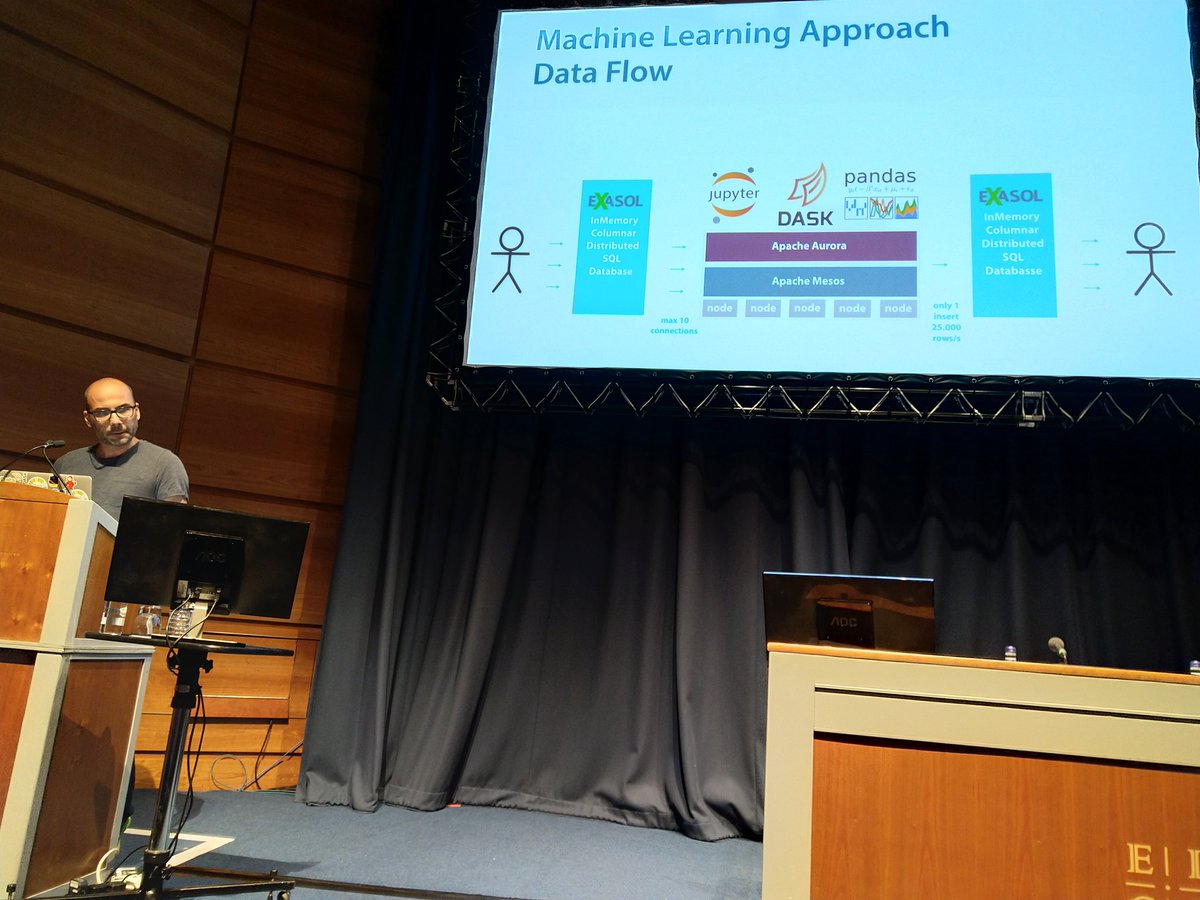

Last speaker on the #europython's scientific room before lunch is Peter Hoffmann talking about#Pandas and #Dask to work with large datasets in Apache Parquet.

Gabor Hermann bol Apache Kafka Apache Parquet Apache Flink @bol_com_Techlab Have a look at the Apache Flink bucketing sink rework for the upcoming release and the Parquet writer ;)

PSA: If you use the page-level statistics in Apache Parquet please chime in on JIRA: issues.apache.org/jira/browse/PA…

It’s happened! The Apache Parquet Java implementation repo I now called parquet-java. Thank you Andrew Lamb for the nudge! This further clarifies that Parquet is used far beyond the Hadoop ecosystem. Maybe whoever created this repo could have thought of this to start with.

To anyone who thinks Apache Parquet is dead, it it showing renewed signs of life 🌹

Turns out Apache Parquet Bloom filters are better than I think many people understand. Trevor Hilton found that for a cost of 2K-8K per row group on high cardinality predicate columns, you can filter all but the exact row group of interest.

Come hear me talk about ApacheArrow and Apache Parquet at #NABDConf in Palo Alto next Tuesday! x.com/jqcoffey/statu…

At IEEE/ACM UCC/BDCAT today in #Austin presenting our work with Plantbreeding WUR on managing #agri #genomic #bigdata with Apache Spark and Apache Parquet

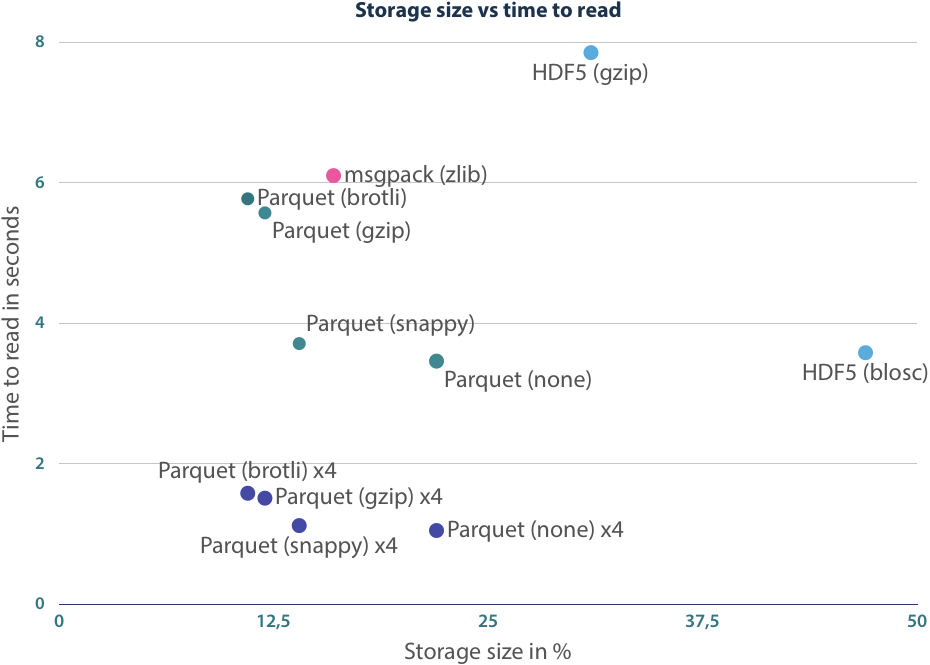

Working with a 10Gig csv data. Pandas read_csv took 16mins to load the csv into memory. Converted to Apache Parquet with ApacheArrow. It took 30 secs to read into pyarrow table and 16 sec to convert to pandas dataframe. 16mins => 46sec! tech.blue-yonder.com/efficient-data…

Apache Parquet ApacheArrow Also the file size went down from 10Gigs to 3Gigs without any compression.

I wonder if we have Apache Parquet in #rstats x.com/ylogx/status/9…

Is there a way to #sqoop from mssql to #s3 as a parquet directly? #awsemr Apache Parquet Apache Hadoop #bigdata #datalake

You do not need Spark to create Apache Parquet files, you can use plain Java and it can even fit in AWS Lambda for a serverless solution: engineering.opsgenie.com/analyzing-aws-…

Great benchmark between Apache Parquet on #hdfs and Apache Kudu blog.clairvoyantsoft.com/guide-to-using… In short kudu is faster than Parquet for random access Querys like CRUD operations but slower for analytics queries.

Join the #GPU accelerated #analytics and #ML revolution. ApacheArrow Apache Parquet and GPU Open Analytics Initiative #GTC18

2nd #PyDataLDN #keynote - holden karau & Boo (Programmer) walk us through a zoo of #tools for #BigData & #distributed #data in #Python: #Apache #Spark, #PySpark, #Arrow, #Beam, #Parquet & #Dask Apache Spark ApacheArrow Apache Beam Apache Parquet Dask #PyData PyData NumFOCUS