Banghua Zhu

@banghuaz

Incoming Assistant Professor @UW; Cofounder @NexusflowX. Post-training, evaluation, and agentic application of LLMs. Prior @Berkeley_EECS @Google @Microsoft

ID: 1028112376162205696

https://people.eecs.berkeley.edu/~banghua/ 11-08-2018 02:54:26

357 Tweet

2,2K Followers

906 Following

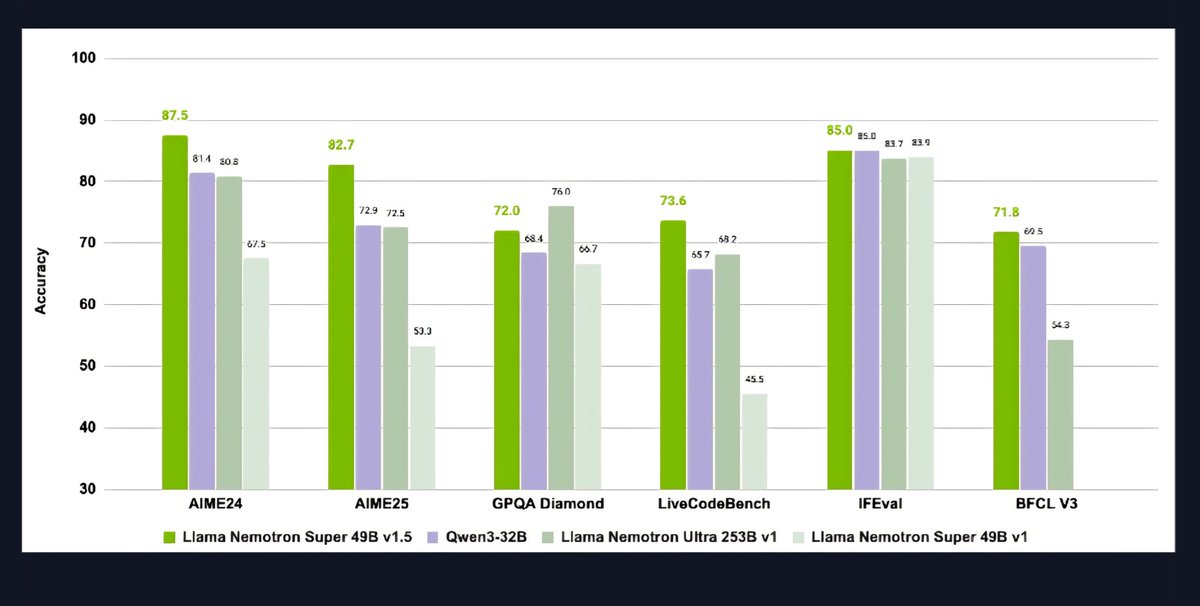

Open Source is going crazy this month! New NVIDIA Nemotron model takes the top spot on the Artificial Analysis index!