Brando Miranda

@brandohablando

CS Ph.D. @Stanford, researching data quality, foundation models, and ML for Theorem Proving. Prev: @MIT, @MIT_CBMM, @IllinoisCS, @IBM. Opinions are mine. 🇲🇽

ID: 1253358235

https://brando90.github.io/brandomiranda/publications.html 09-03-2013 04:01:37

1,1K Tweet

963 Followers

736 Following

Wrapped up Stanford CS336 (Language Models from Scratch), taught with an amazing team Tatsunori Hashimoto Marcel Rød Neil Band Rohith Kuditipudi. Researchers are becoming detached from the technical details of how LMs work. In CS336, we try to fix that by having students build everything:

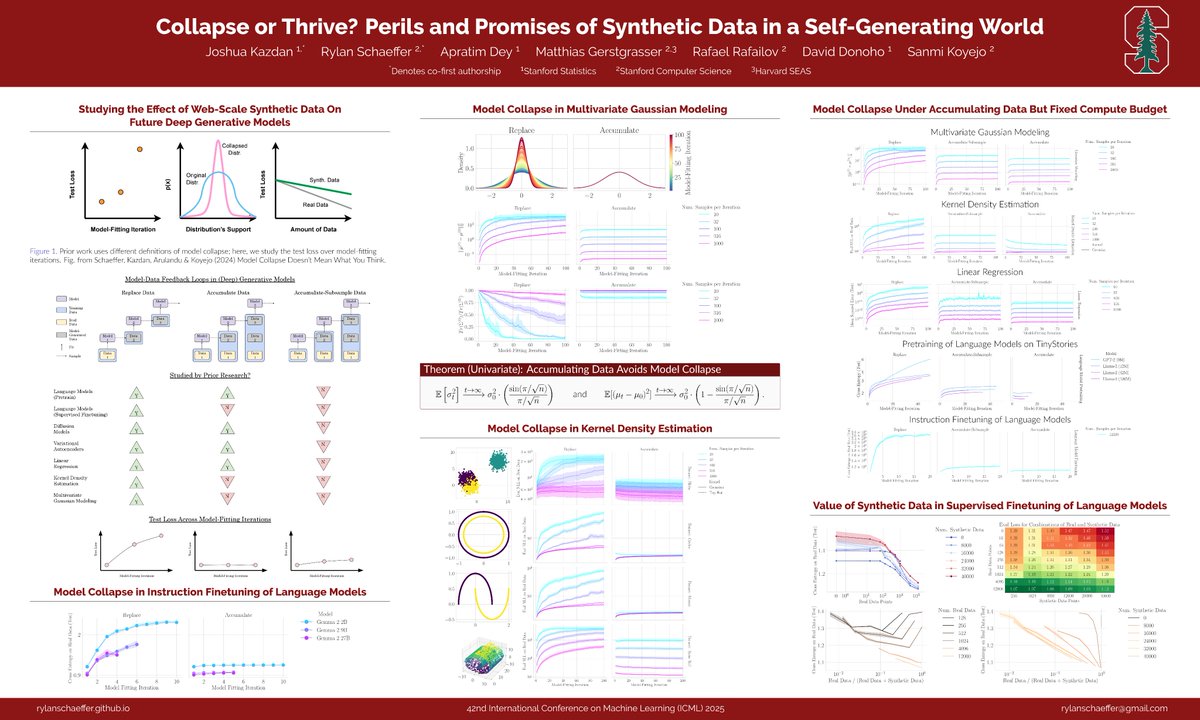

Third #ICML2025 paper! What effect will web-scale synthetic data have on future deep generative models? Collapse or Thrive? Perils and Promises of Synthetic Data in a Self-Generating World 🔄 Joshua Kazdan Apratim Dey Matthias Gerstgrasser Rafael Rafailov @ NeurIPS Sanmi Koyejo 1/7

Joshua Kazdan Lastly, don't sleep on our NEW position paper: Model Collapse Does Not Mean What You Think We discuss the state of research on synthetic data & model collapse, and where we feel more effort is necessary w/ Alvan Arulandu Joshua Kazdan Sanmi Koyejo arxiv.org/abs/2503.03150 7/7

New position paper! Machine Learning Conferences Should Establish a “Refutations and Critiques” Track Joint w/ Sanmi Koyejo Joshua Kazdan Yegor Denisov-Blanch Francesco Orabona Koustuv Sinha Jessica Zosa Forde Jesse Dodge Susan Zhang Brando Miranda Matthias Gerstgrasser isha Elyas Obbad 1/6

Tuğrulcan Elmas 🇹 🇯 Rylan Schaeffer Sanmi Koyejo Joshua Kazdan Yegor Denisov-Blanch Francesco Orabona Koustuv Sinha Jessica Zosa Forde Jesse Dodge Susan Zhang Matthias Gerstgrasser isha Elyas Obbad One fix: invite the peers to be authors. Being generous & correcting the field is most important. I'd be happy to write refutations for my papers. Discussion & truth matter most. The shift socially should be: being more open to corrections than feeling attacked so easily.

I'll be at ICML Conference #ICML2025 next week to present three papers - reach out if you want to chat about generative AI, scaling laws, synthetic data or any other AI topic! #1 How Do Large Language Monkeys Get Their Power (Laws)? x.com/RylanSchaeffer…