Conference on Parsimony and Learning (CPAL)

@cpalconf

CPAL is a new annual research conference focused on the parsimonious, low dimensional structures that prevail in ML, signal processing, optimization, and beyond

ID: 1659059197013807105

https://cpal.cc/ 18-05-2023 04:52:36

247 Tweet

888 Followers

1,1K Following

Visiting Stanford University for Conference on Parsimony and Learning (CPAL) - not sure if it's just having spent a long winter on the East Coast 😅 but sheesh the campus is pretty #CPAL2025

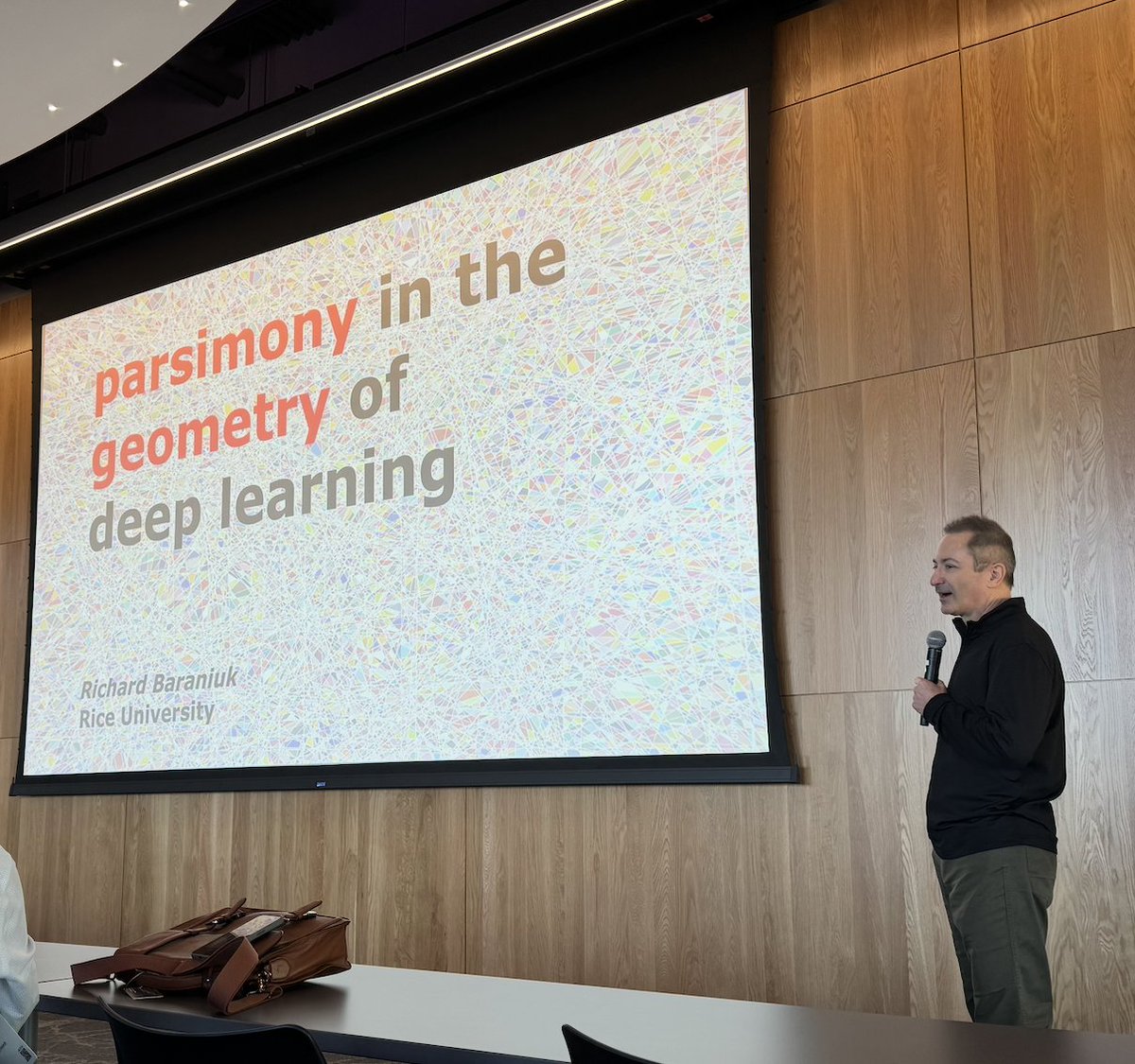

DAY 2 starting off with a keynote seminar by Richard Baraniuk talking about Continuous Piecewise Affine Layers or CPAL! "Put that on the CPAL twitter feed" Richard said. Done ✅

Super excited to be at Stanford for #CPAL2025 this week. Very fitting for Richard Baraniuk to kick start the exceptional three-day program by telling us how Continuous Piecewise Affine Layers and splines play a central role in modern deep learning.

Next keynote talk is by Alison Gopnik on causal learning in children and how it compares to machine learning!

Attending Conference on Parsimony and Learning (CPAL) this week on the beautiful Stanford campus! Will be presenting our work with Mohammed Adnan @ ICML 2025 Rohan Jain Ekansh Sharma in today’s recent spotlight poster session showing how to train Lottery Tickets from random initialization!

Our first Rising Stars session featured fantastic talks by Tianlong Chen Congyue Deng Nived Rajaraman Yihua Zhang Grigorios Chrysos

DAY 2 ending with a poster session and reception, featuring some of Stanford University 's stunning views!

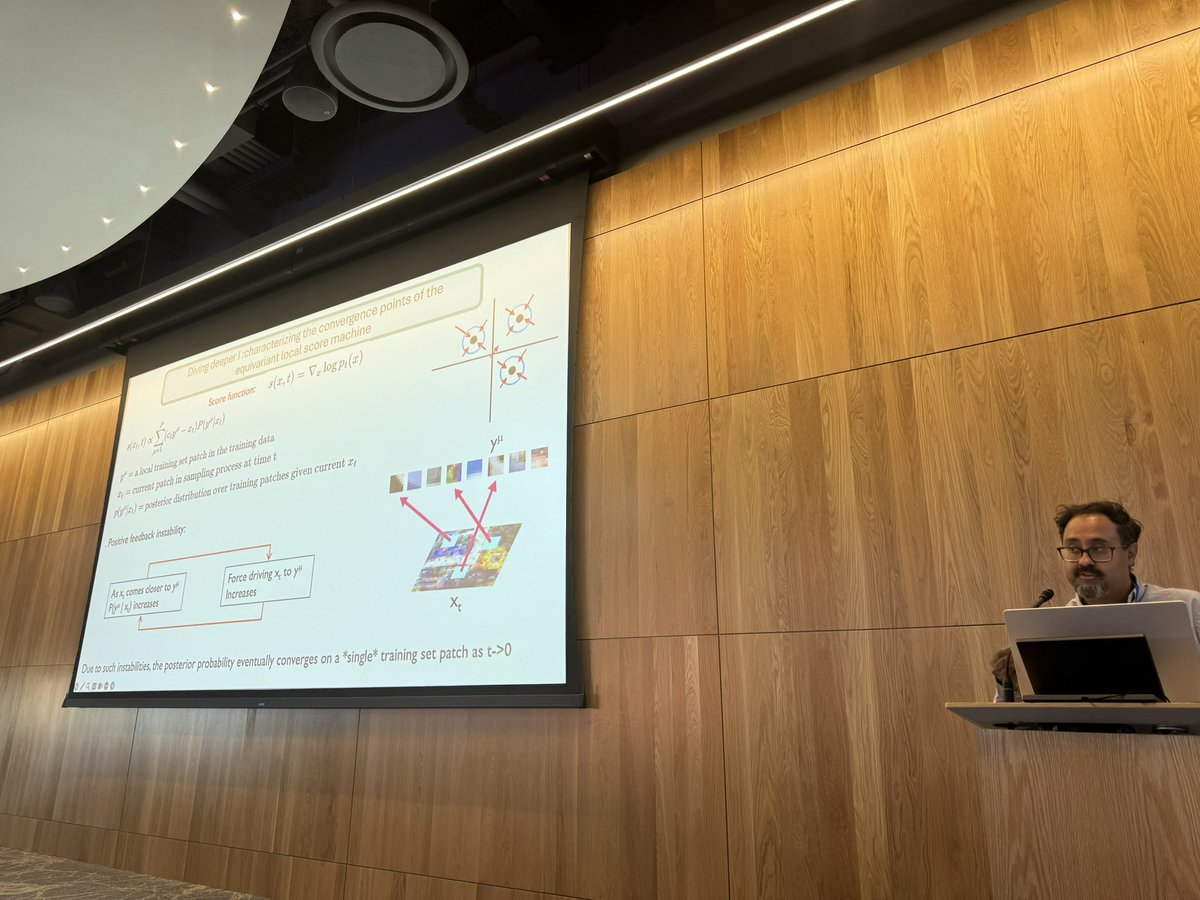

Why are diffusion model creative? #CPAL2025 invited speaker Surya Ganguli gives a compelling theory that it is creative because it mixes and matches local patches from many different training images.

Michael Unser Biomedical Imaging Group (BIG) gave a fantastic keynote talk on convex analysis methods

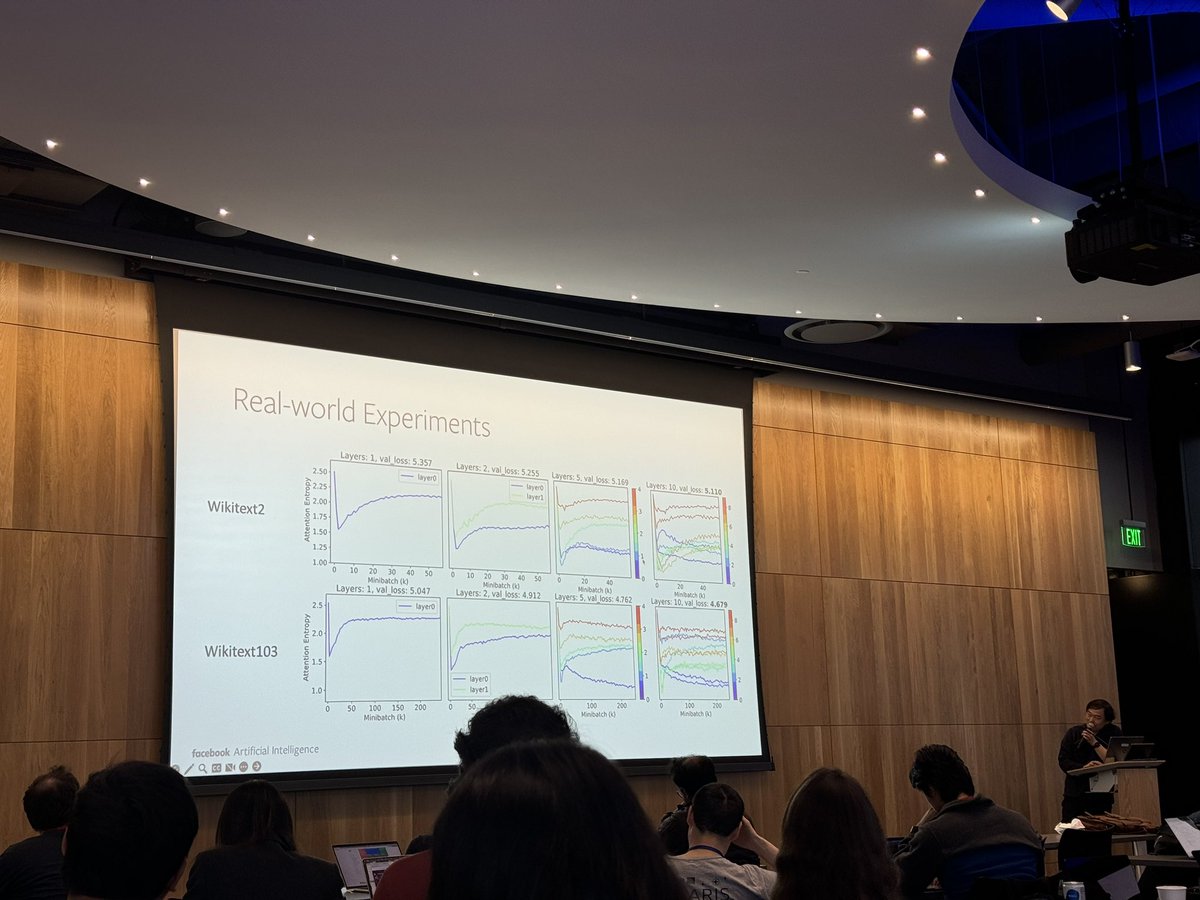

#CPAL2025 invited speaker Yuandong Tian shows that attention map first goes *sparser* than gets *denser* as we train a nonlinear attention mechanism using gradient flow — and the phenomena show up in the experiments too.