Charlotte Frenkel

@c_frenkel

Assistant professor at TU Delft.

Neuromorphic engineer, chip designer, machine learner and music composer.

ID: 1051807426633175040

http://cogsys.tudelft.nl 15-10-2018 12:10:06

257 Tweet

1,1K Followers

479 Following

.Melika Payvand @YigitDemirag Filippo_Moro et al. propose on-chip in-memory #spike #routing using #memristors, optimized for small-world graphs, offering orders of magnitude reduction in routing events compared to current approaches. Now out👉Nature Communications nature.com/articles/s4146…

Muratore's team on fire!🔥 Dante Muratore. Check these out! A low power accelerator for closed-loop neuromodulation ieeexplore.ieee.org/document/10399… Spike sorting in the presence of stimulation artifacts: a dynamical control systems approach iopscience.iop.org/article/10.108…

Our self-powered memristor neural network! It harvests energy with a tiny solar cell, and adjusts its accuracy depending on available energy! Very proud of this one IM2NP UMR 7334 C2N, Centre de Nanosciences et de Nanotechnologies CEA-Leti @IPVF_institute Nature Communications🌞 1/2

.Filippo_Moro Tristan Yigit Melika Payvand et al. present a #Spiking #NeuralNetwork #hardware with #dendritic architecture based on #memristive #devices for low-power signal processing with reduced memory footprint. Now out 👉 Nature Communications nature.com/articles/s4146…

Check out "DenRAM", the first implementation of delay-based dendritic architecture using #RRAM+CMOS technology, which we show is a natural match for efficient temporal signal processing Nature Communications. paper: nature.com/articles/s4146… code: github.com/EIS-Hub/DenRAM Thread 🧵

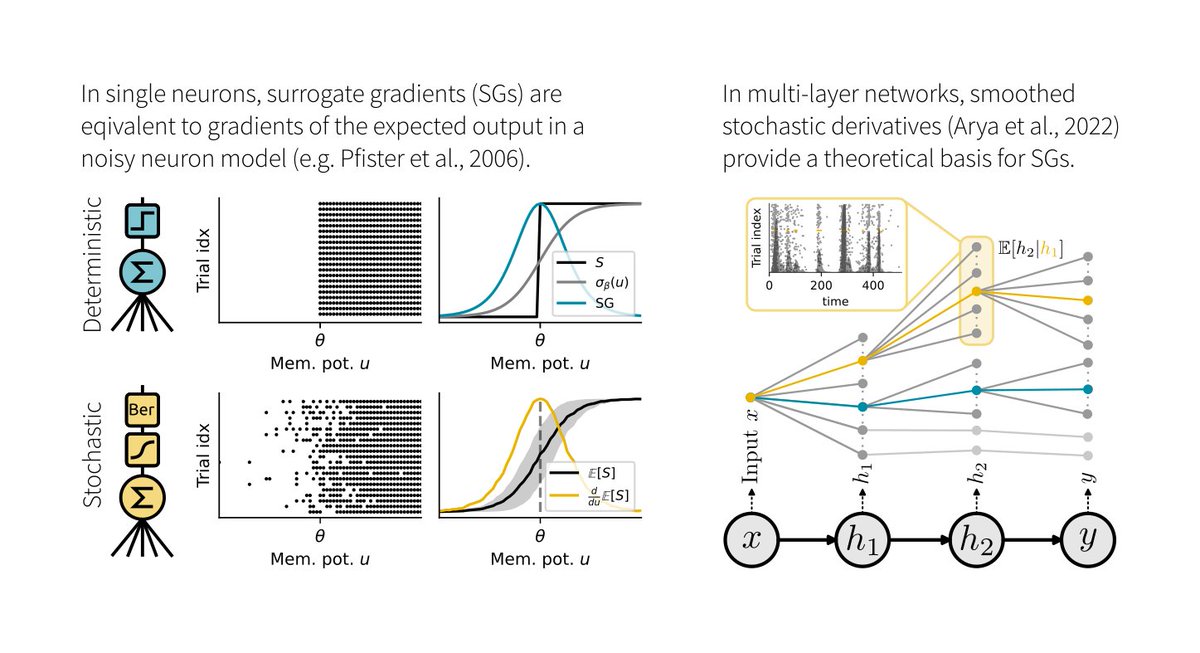

1/6 Surrogate gradients (SGs) are empirically successful at training spiking neural networks (SNNs). But why do they work so well, and what is their theoretical basis? In our new preprint led by Julia Gygax, we provide the answers: arxiv.org/abs/2404.14964

We're hiring! Come build models of how the brain learns and simulates a world model. We have several openings at PhD and postdoc levels, including a collab with Georg Keller lab on designing regulatory elements to target distinct neuronal cell types. zenkelab.org/jobs

#Neuromorphic #computing just got more accessible! Our work on a Neuromorphic Intermediate Representation (NIR) is out in Springer Nature Communications. We demonstrate interoperability with 11 platforms. And more to come! nature.com/articles/s4146… A thread 🧵 1/5