Christian Szegedy

@chrszegedy

#deeplearning, #ai research scientist. Opinions are mine.

ID: 3237721718

06-06-2015 09:14:20

7,7K Tweet

37,37K Followers

2,2K Following

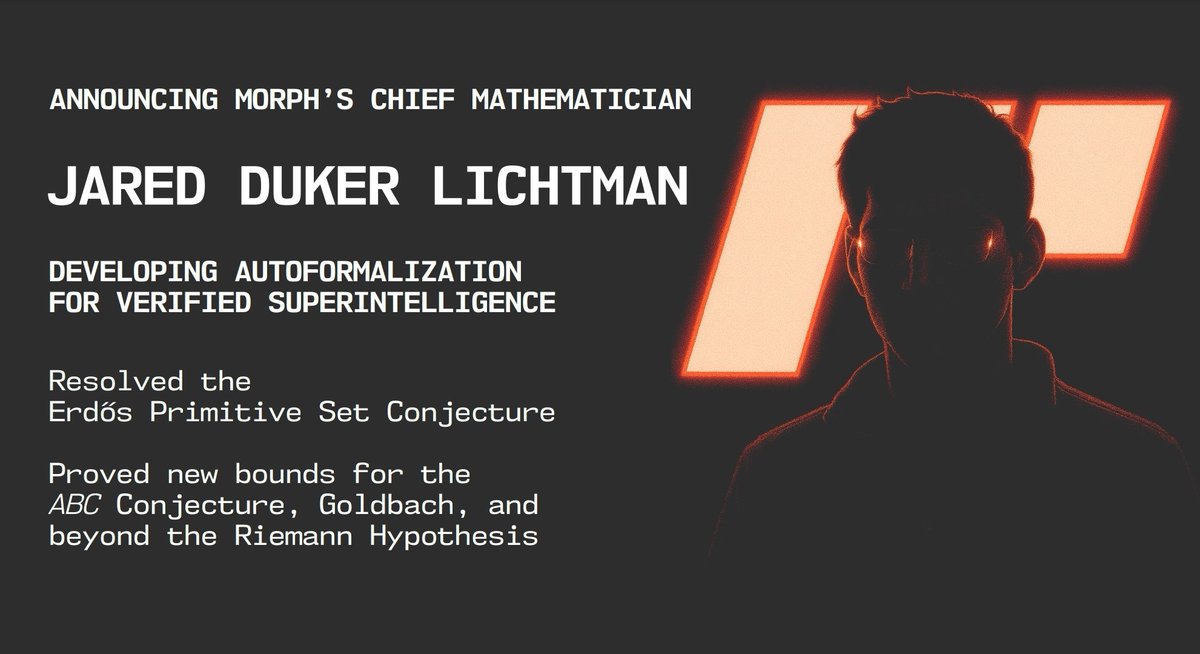

We're excited to welcome Jared Duker Lichtman to Morph as our chief mathematician, where he will shorten the way to verified superintelligence.

Self-supervised representation learning looks a bit like RL. What if we literally use RL as a SSL method for visual representations? Turns out that it works quite well. In new work by Dibya Ghosh, we show how this can be done: dibyaghosh.com/annotation_boo…

Tried Grok 4 on a dozen non-trivial math (under/)grad level math problems. So far, it has failed to fail me even once. Congrats to Yuhuai (Tony) Wu, Eric Zelikman and the whole xAI reasoning team, their progress has exceeded all my expectation!