Michael Churchill

@churchillmic

Fusion energy computational research engineer/physicist, head of digital engineering @PPPLab #fusion #AI

ID: 1299163639

25-03-2013 12:04:12

5,5K Tweet

636 Followers

1,1K Following

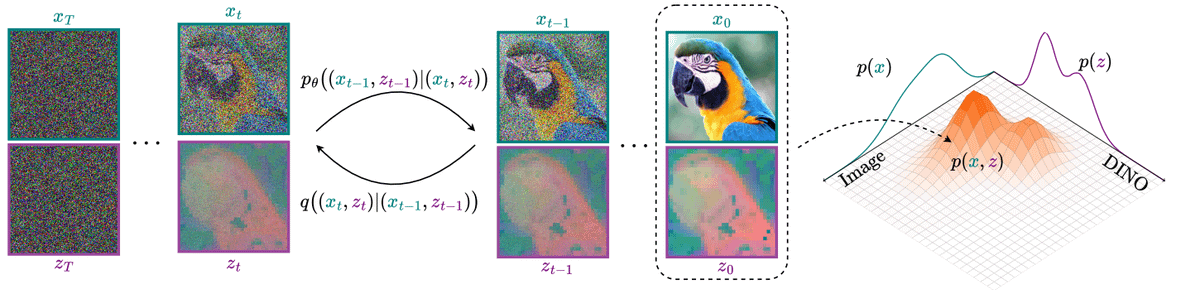

📢 EquiVDM: Equivariant Video Diffusion Models with Temporally Consistent Noise What's the role of equivariance in video diffusion models? and how can warped noise help with it? Is sampling from equivariant video models any easier? Project: research.nvidia.com/labs/genair/eq… w/ Chao Liu.

Paper: arxiv.org/pdf/2504.13074 Project page: github.com/SkyworkAI/SkyR… Try SkyReels - All-in-one AI Video Creation Platform now: 👇 skyreels.ai/home?utm_sourc…

Reward-driven algorithms for training dynamical generative models significantly lag behind their data-driven counterparts in terms of scalability. We aim to rectify this. Adjoint Matching poster Carles Domingo-Enrich Sat 3pm & Adjoint Sampling oral Aaron Havens Mon 10am FPI