Ndix Makondo🇿🇦🇯🇵

@collinsoptix

AI and robotics, seeking to uplift Africa through technology.

ID: 84659526

23-10-2009 18:46:54

349 Tweet

109 Followers

381 Following

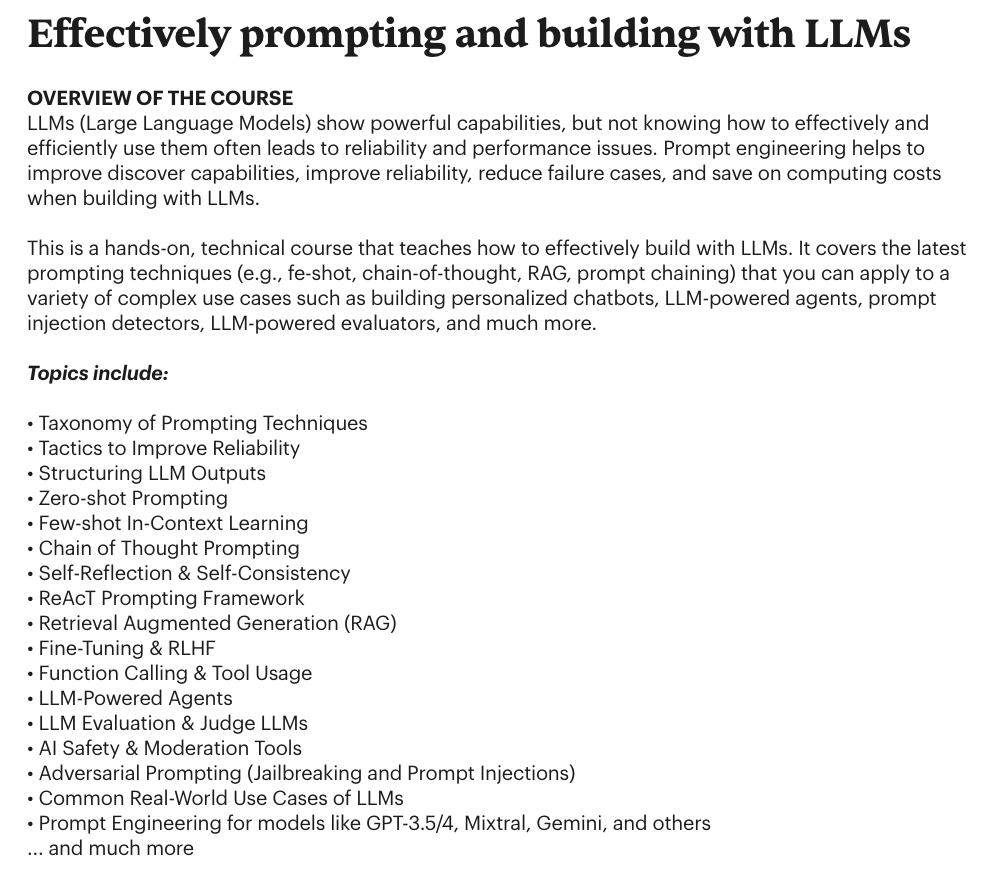

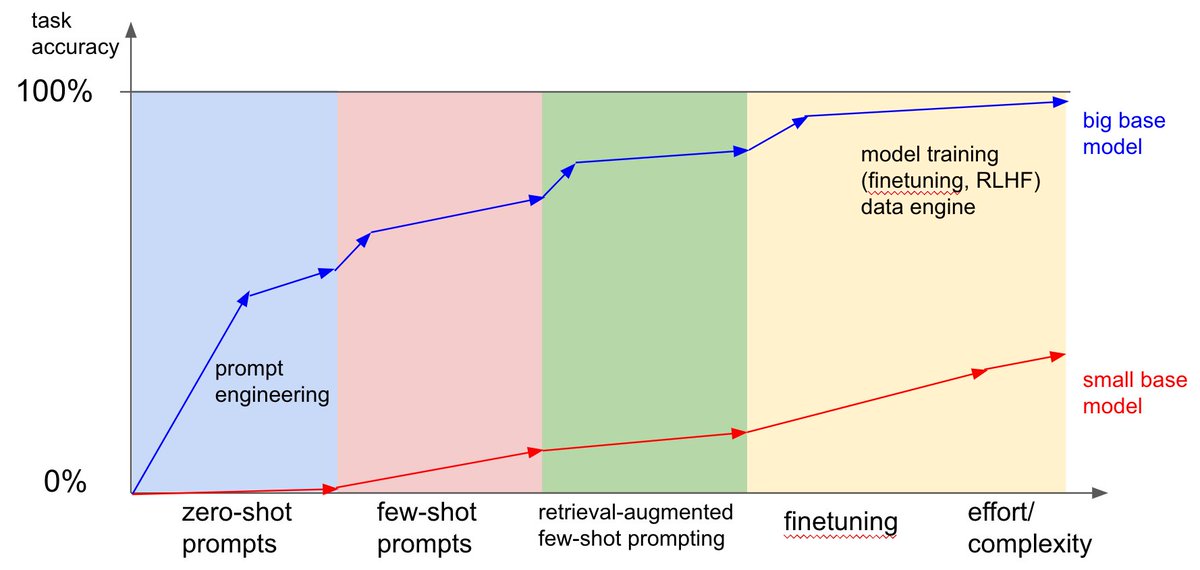

Aparna Dhinakaran Gloria Felicia It's a great question. I roughly think of finetuning as analogous to expertise in people: - Describe a task in words ~= zero-shot prompting - Give examples of solving task ~= few-shot prompting - Allow person to practice task ~= finetuning With this analogy in mind, it's

📣 if you’re attending Deep Learning Indaba and you work on #TrustML, submit an extended abstract at our workshop (co-organized w/ Aisha Alaagib Mbangula Lameck Amugongo #BlackLivesMatter Siobhan Mackenzie Hall, Tejumade & Nathi 🤩 Are you working on audit techniques ⚖️? Robust ML🤖? Privacy 🔐? ➡️ trustaideepindaba.github.io/2023/06/07/cfp/

We are going to start "NSA: Neuro-Symbolic Agents Workshop" at Almaty 6006 #IJCAI2023 Ndix Makondo🇿🇦🇯🇵 nsa-wksp.github.io