Da-Cheng Juan

@dachengjuan1

Research, Technology, Products.

Tech Lead Manager @GoogleAI & Adjunct Faculty @NTsingHuaU

All opinions are my own.

ID: 1253016284767203328

22-04-2020 17:42:22

14 Tweet

65 Followers

42 Following

Our paper "Sparse Sinkhorn Attention" is accepted to #ICML2020! 😃 In this paper, we propose efficient self-attention via differentiable sorting. Joint work with Dara Bahri @liuyangumass Don Metzler Da-Cheng Juan Preprint: arxiv.org/abs/2002.11296

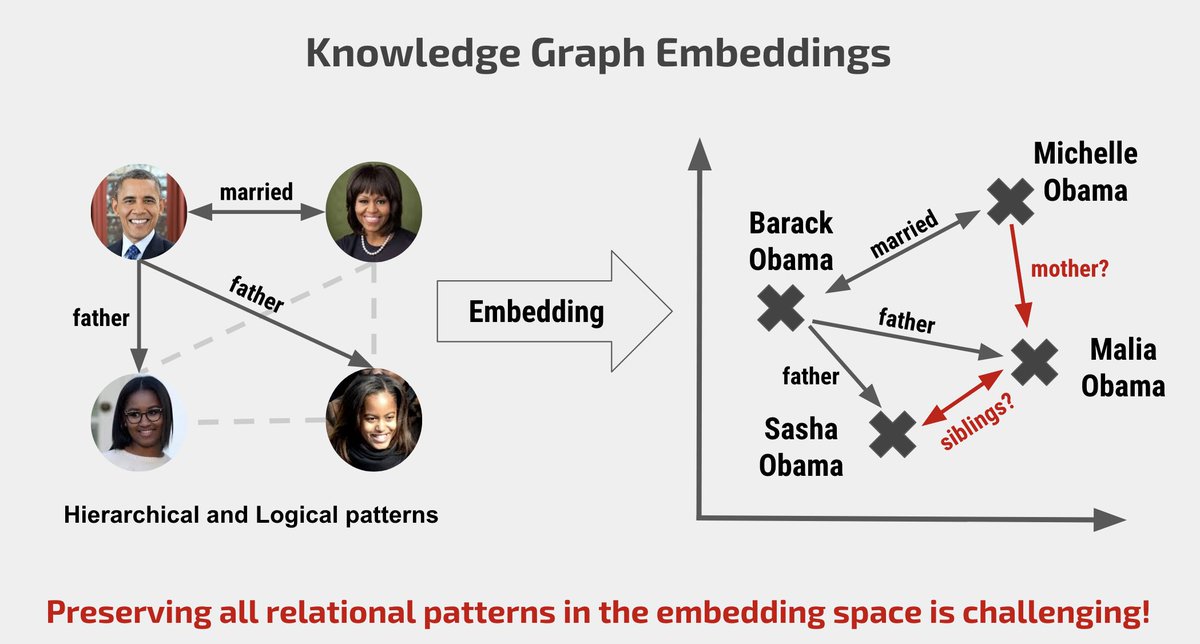

Excited to share a video describing our approach to learn hyperbolic Knowledge Graph embeddings! youtube.com/watch?v=Yf03-C… Thanks to my amazing collaborators! Adva Wolf Da-Cheng Juan Fred Sala Sujith Ravi hazyresearch

Join us at KDD on Tuesday, 9 AM - 12 PM Pacific time. We’re organizing a hands-on tutorial on Neural Structured Learning. Tutorial outline at github.com/tensorflow/neu…. Find us on whova or Vfairs. #KDD2020 Da-Cheng Juan SIGKDD 2025

Inspired by the dizzying number of efficient Transformers ("x-formers") models that are coming out lately, we wrote a survey paper to organize all this information. Check it out at arxiv.org/abs/2009.06732. Joint work with Mostafa Dehghani Dara Bahri and Don Metzler. Google AI 😀😃

📊 Neural Structured Learning in TFX. In Arjun Gopalan's new blog, you'll learn how graph regularization can be implemented using custom TFX components and how adversarial learning can be deployed seamlessly with TFX. Read more ↓ blog.tensorflow.org/2020/10/neural…

[#NeurIPS2020 Talk] Mitigating Forgetting in Online Continual Learning via Instance-Aware Parameterization by researchers from NTHU Google AI An-Chieh Cheng Da-Cheng Juan #paperwithvideo #machinelearning #neuralnetwork #deeplearning #convolution bit.ly/3nE3veY