Nolan Dey

@deynolan

Research Scientist @ Cerebras Systems

ID: 1508916574132002817

https://ndey96.github.io 29-03-2022 21:18:55

32 Tweet

435 Followers

36 Following

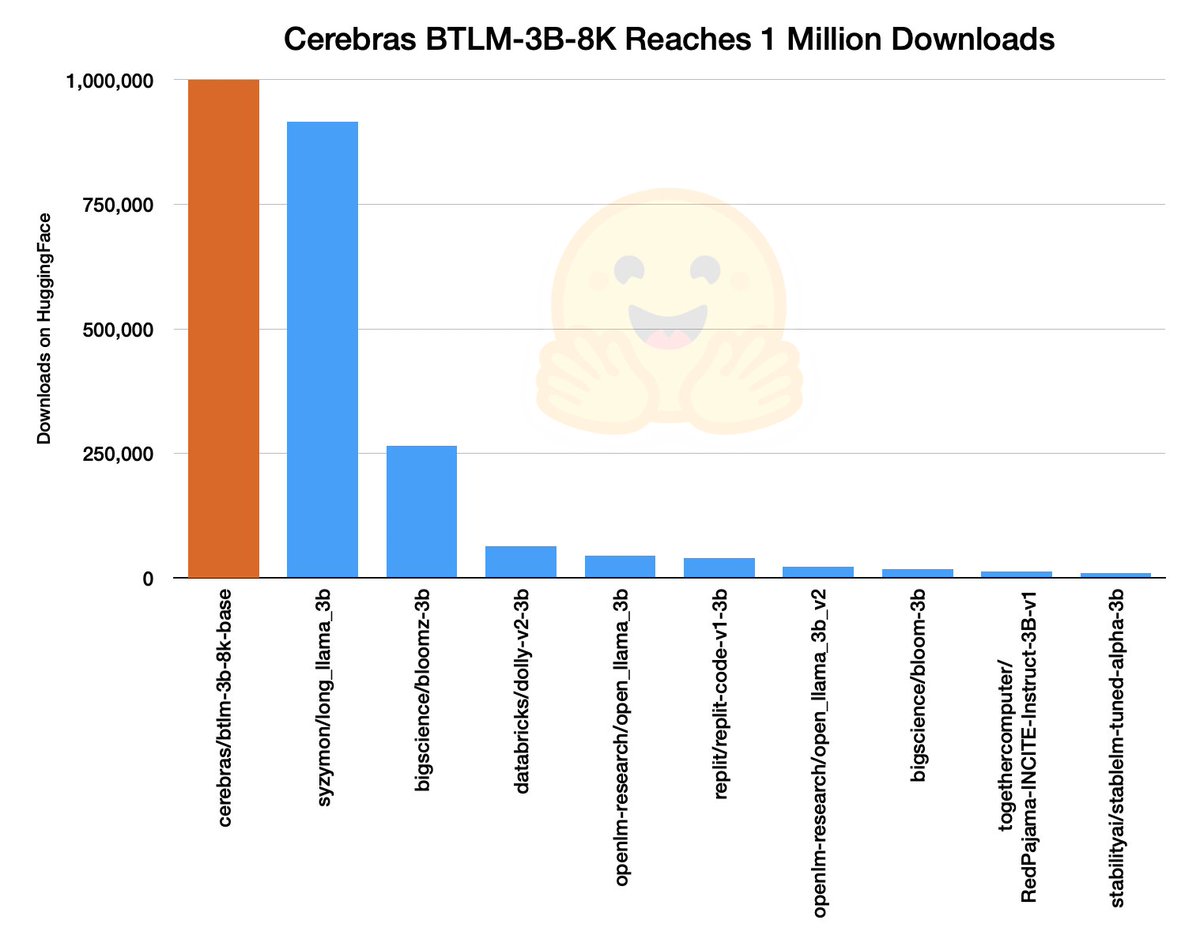

Cerebras BTLM-3B-8K model crosses 1M downloads🤯 It's the #1 ranked 3B language model on Hugging Face! A big thanks to all the devs out there building on top of open source models 🙌