explAInable.NL

@explainablenl

Tweeting about Explainable AI in the Netherlands. Account run by @wzuidema (amsterdam.explainable.nl) and others.

explainable.nl

ID: 1181276757032407042

07-10-2019 18:35:14

259 Tweet

435 Followers

224 Following

This paper analyzing discrete representations in models of spoken language with @bertrand_higy Lieke Gelderloos and afra alishahi will appear at #BlackboxNLP #EMNLP2021 arxiv.org/abs/2105.05582

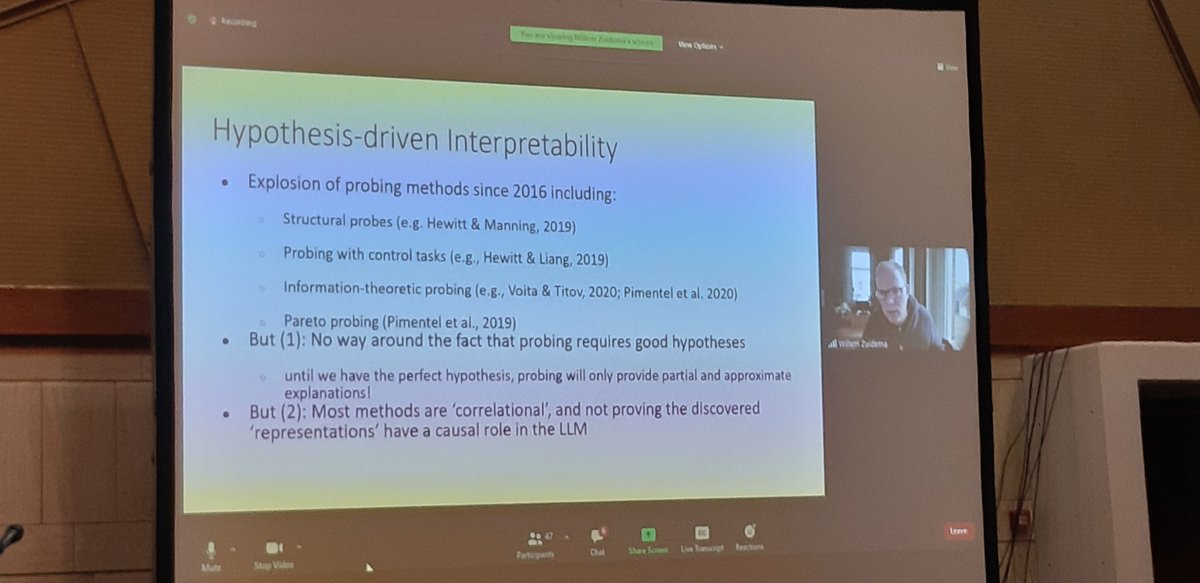

@wzuidema Sara Hooker Ana Marasović Hot take from @wzuidema : progress in probing classifiers will not come from sophisticated probing techniques but from the hard work of forming better hypotheses.

Grzegorz Chrupała 🇪🇺🇺🇦 A paper in ICASSP 2020 proposed probing by "audification" of hidden representations in ASR model. They learn a speech synthesizer on top of the ASR representations. They have a nice video of their work here youtu.be/6gtn7H-pWr8

📢#MSCAJobAlert Last days to apply to the PhD student position in #AI within NL4XAI Marie Skłodowska-Curie Actions at CiTIUS, ES. Join us and work on the following topic: From Grey-box Models to Explainable Models. ⌛️Deadline 31/03/2022 Apply👉nl4xai.eu/open_position/… Horizon 2020

Excited to see our work featured in the Fortune. Thank you so much, Jeremy Kahn!

And happy that also our work "On genetic programming representations and fitness functions for interpretable dimensionality reduction" made it to GECCO 2025! Preprint: arxiv.org/abs/2203.00528 A short explanation 👇 1/8

This year's Spring Conference focuses on foundation models, accountable AI, and embodied AI. HAI Associate Director and event co-host Christopher Manning explains these key areas and why you should not miss this event: stanford.io/3IxnjdH

I visualized my last #semantle game with a UMAP of the word embeddings. Here's the result: bp.bleb.li/viewer?p=D5d3y Semantle is a word guessing game by David Turner where your guesses, unlike in #wordle, are ranked by their similarity in meaning, not spelling, to the secret word.