Fabian Gloeckle

@fabiangloeckle

PhD student at @AIatMeta and @EcoledesPonts with @syhw and @Amaury_Hayat, co-supervised by @wtgowers. Machine learning for mathematics and programming.

ID: 1694458386635534336

23-08-2023 21:15:42

52 Tweet

519 Followers

220 Following

We're looking for a postdoc at Meta FAIR to work on AI4Math, e.g., neural theorem proving, autoformalization, learning mathematical rules and abstractions, and automated discovery/conjecturing in math. Please apply at metacareers.com/jobs/145969190… and email me. Please help share this

github.com/facebookresear… by Guillaume Lample @ NeurIPS 2024 Timothee Lacroix Marie-Anne Lachaux Aurelien Rodriguez Amaury Hayat Thibaut Lavril Gabriel Ebner Xavier M and others!

Prof. Anima Anandkumar I really worry that the, shall we say, “questionable” claims in this paper (162 unproven by human theorems) will get taken seriously and the rest of us working in this field will look really bad for it. There are much better works already in this field.

Meta Lingua: a minimal, fast LLM codebase for training and inference. By researchers, for researchers. Easily hackable, still reproducible. Built-in efficiency, profiling (cpu, gpu and mem) and interpretability (automatic activation and gradient statistics) Joint work w/ Badr Youbi Idrissi

We present an Autoregressive U-Net that incorporates tokenization inside the model, pooling raw bytes into words then word-groups. AU-Net focuses most of its compute on building latent vectors that correspond to larger units of meaning. Joint work with Badr Youbi Idrissi 1/8

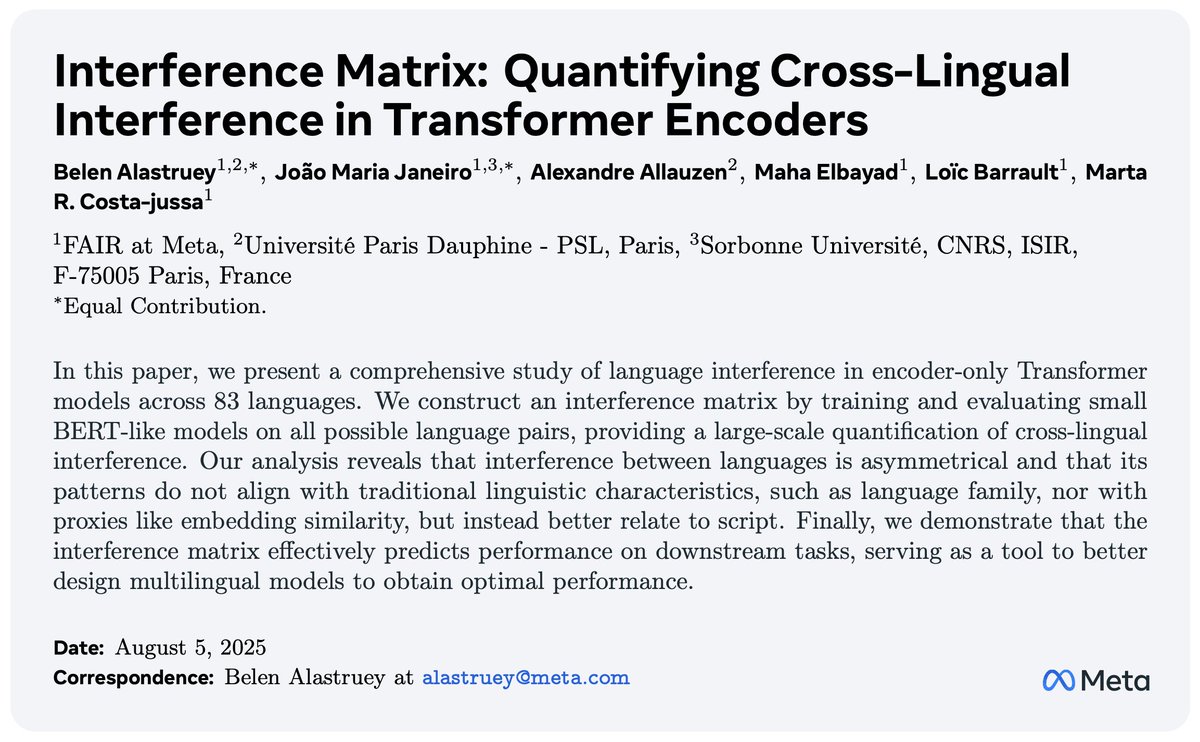

🚀New paper alert! 🚀 In our work AI at Meta we dive into the struggles of mixing languages in largely multilingual Transformer encoders and use the analysis as a tool to better design multilingual models to obtain optimal performance. 📄: arxiv.org/abs/2508.02256 🧵(1/n)