Francesco Faccio @ ICLR2025 🇸🇬

@faccioai

Senior Research Scientist @GoogleDeepMind Discovery Team. Previously PhD with @SchmidhuberAI. Working on Reinforcement Learning.

ID: 1037009714406715392

https://faccio.ai/ 04-09-2018 16:09:17

37 Tweet

901 Followers

307 Following

It was great to be part of this work and to present it at the #NeurIPS23 R0-FoMo workshop. Congrats to Mingchen Zhuge, Dylan R. Ashley, and all the other authors!

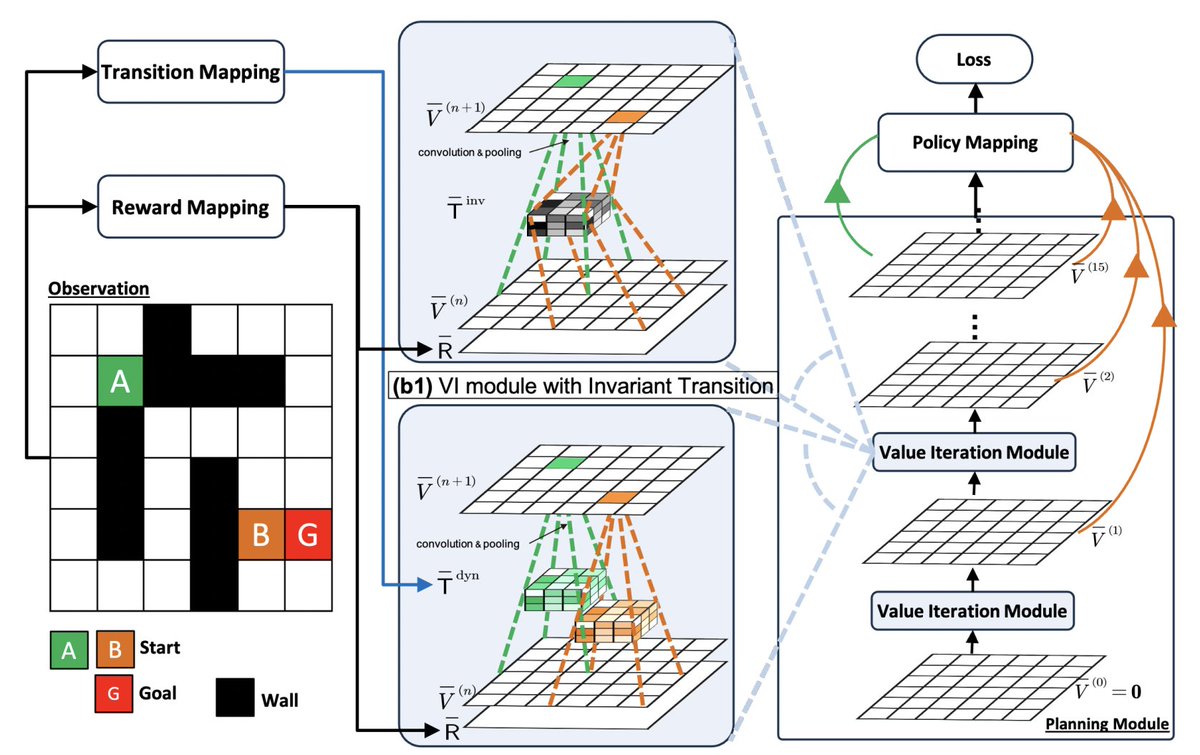

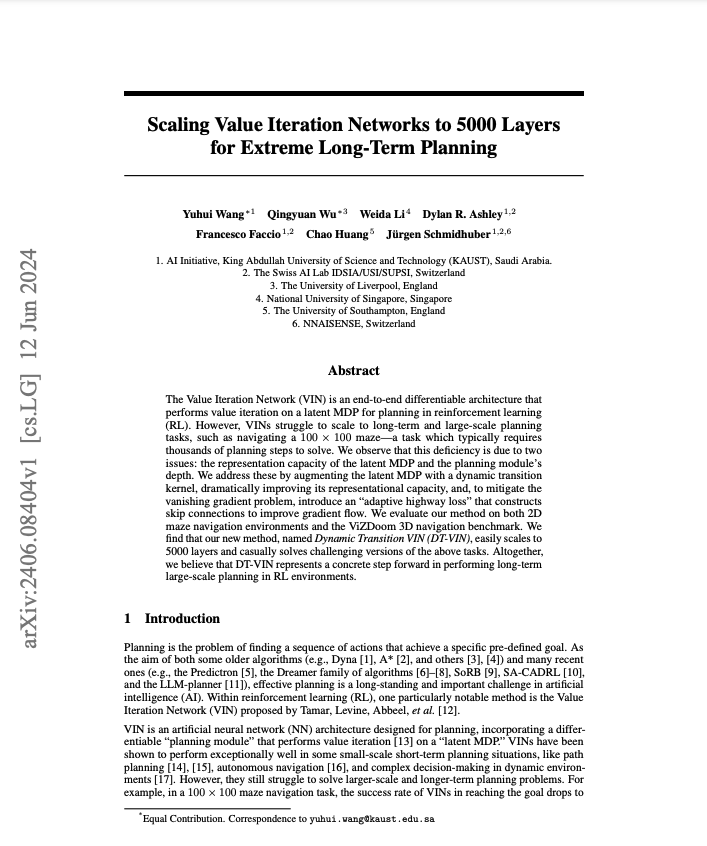

Can neural networks with 5000 layers improve long-term planning? 🤖 Check out our latest research with Jürgen Schmidhuber, Dylan R. Ashley, and team: arxiv.org/abs/2406.08404 #AI #DeepLearning #RL

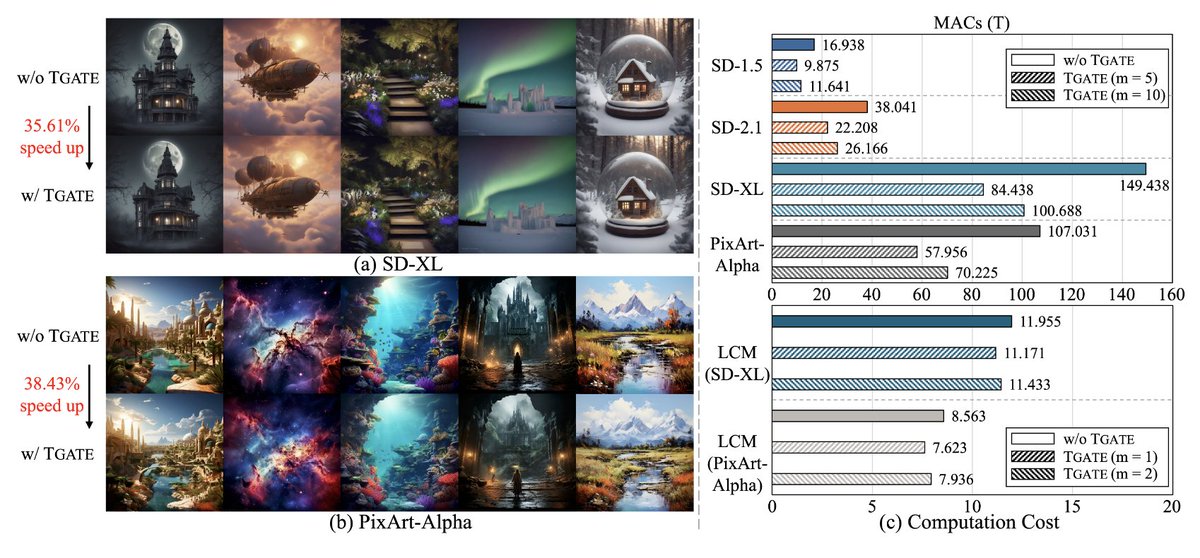

🚀Want to cut inference times by up to 50% and save money when using Transformer/CNN/Consistency-based diffusion models? Check out our latest work on Faster Diffusion Through Temporal Attention Decomposition led by Haozhe Liu, featuring Jürgen Schmidhuber. Paper:

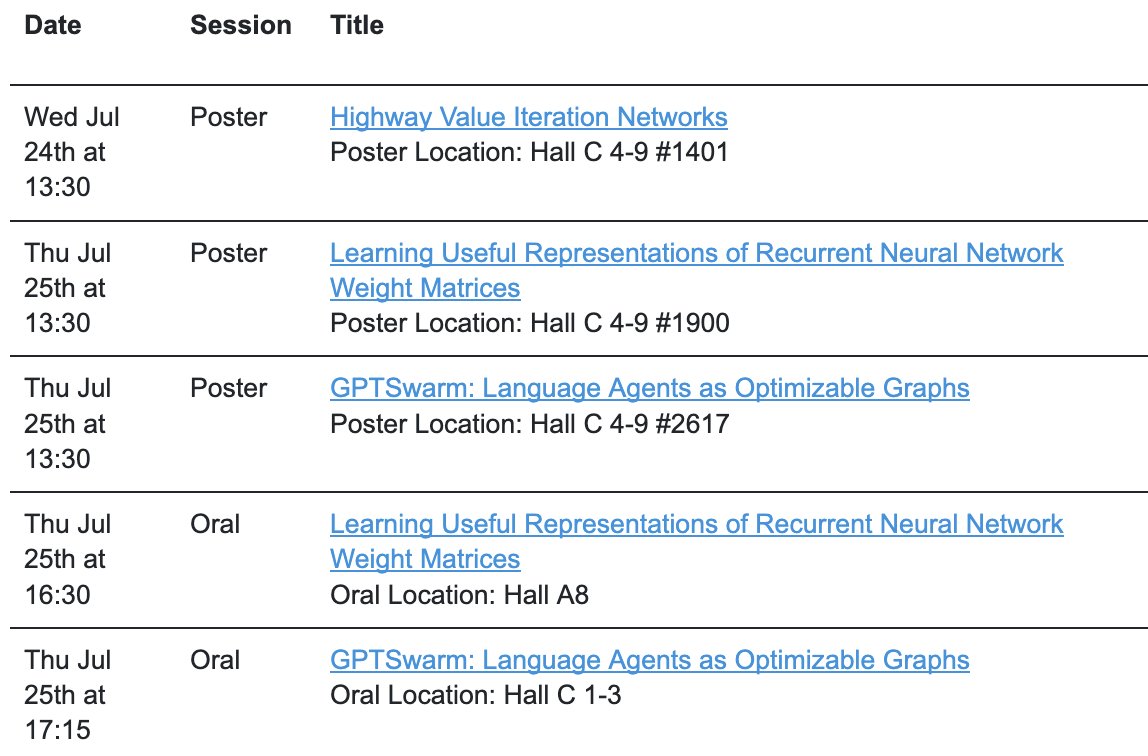

Heading to #ICML2024 for a busy week with 3 posters and 2 oral presentations. If you’re interested in discussing collaborations, visiting, or hiring opportunities at Artificial Intelligence @ KAUST with Jürgen Schmidhuber, feel free to connect!

Had a great time presenting at #ICML2024 alongside Vincent Herrmann & Louis Kirsch. But the true highlight was Jürgen Schmidhuber himself capturing our moments on camera! #AI #DeepLearning

I'm thrilled to announce that I'm joining the Discovery team at Google DeepMind in London as a Senior Research Scientist starting this January! It's incredible what the team has achieved in the past decade and I am so looking forward towards more scientific discoveries with AI.

I'm in Singapore for #ICLR2025! DM me if you’d like to meet and chat about Creativity and Curiosity in AI, AGI, Agents, or exciting opportunities at Google DeepMind. You might even get a free Italian coffee ☕️ :)