Chi Han

@glaciohound

CS PhD student at UIUC, interested in language models and their understanding.

ID: 1438485864393314311

https://glaciohound.github.io/ 16-09-2021 12:52:30

54 Tweet

745 Followers

264 Following

We got the #ACL2024 Outstanding Paper for “LM-Steer: Word Embeddings Are Steers for Language Models”! A big shoutout and congrats to our amazing leader Chi Han Chi Han and Heng Ji, and to our wonderful team Jialiang Xu @YiFung10 Chenkai Sun Nan Jiang Tarek!

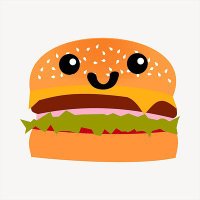

I am at #EMNLP2024! I will present our work "Why Does New Knowledge Create Messy Ripple Effects in LLMs? " on Web 10:30am. Thanks to all the collaborators Heng Ji Zixuan Zhang Chi Han Manling Li Looking forward to have a chat! Paper Link: arxiv.org/pdf/2407.12828

📢Our 2nd Knowledgeable Foundation Model workshop will be at AAAI 25! Submission Deadline: Dec 1st Thanks to the wonderful organizer team Zoey Sha Li Mor Geva Chi Han Xiaozhi Wang Shangbin Feng @silingao and advising committee Heng Ji Isabelle Augenstein Mohit Bansal !

👽New release: We filter out educationally valuable web data rather than using arXiv papers to continually pre-train a specialist Astro LLM. Big thanks to the first author Eric Modesitt for the project leadership! 💪 Great ideas and strong execution—you're an amazing undergrad!