Wei Yu

@gnosisyu

ID: 970040220904177664

03-03-2018 20:56:25

93 Tweet

31 Followers

979 Following

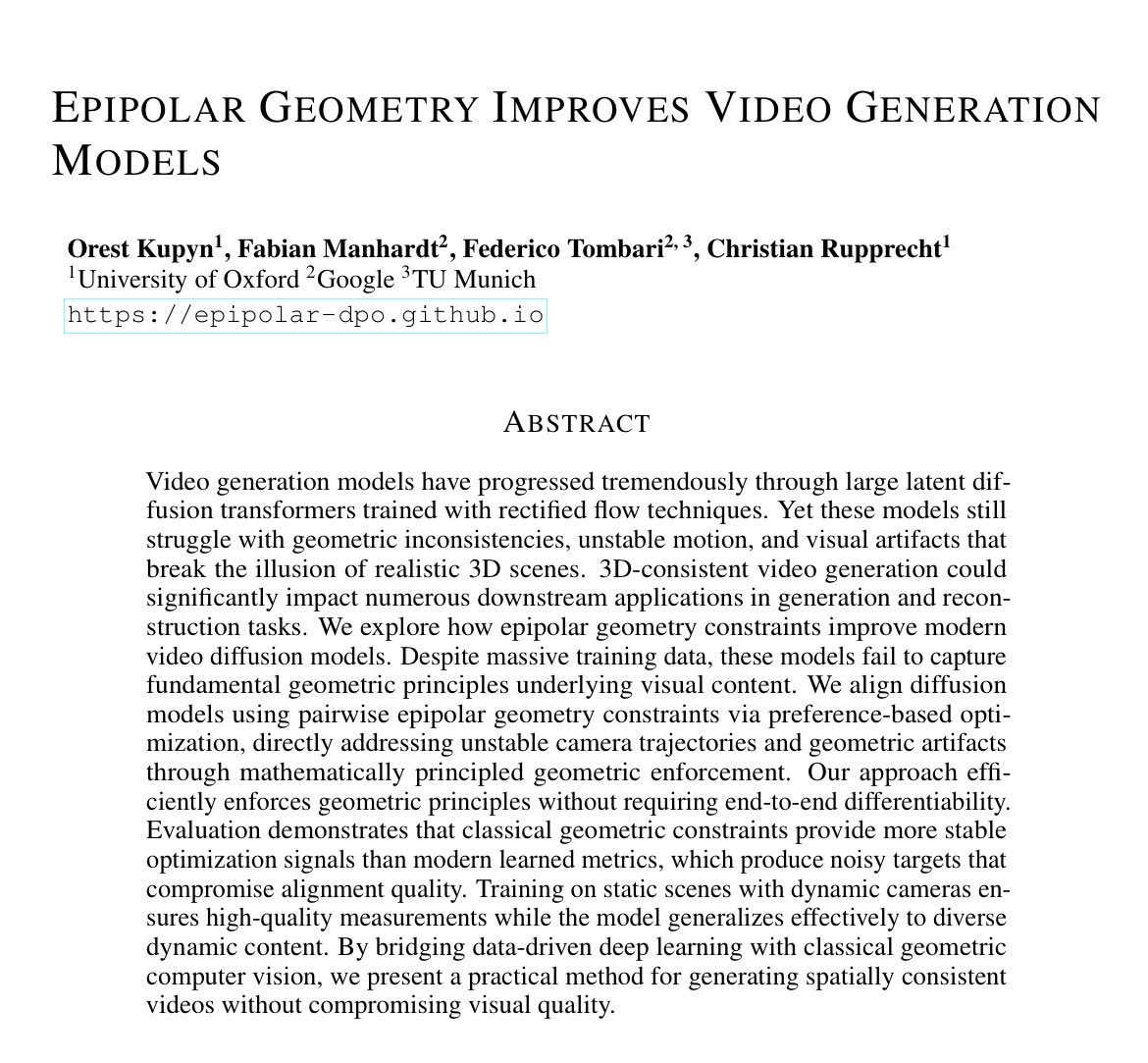

Really exciting multi-agent collaboration environment! Xavier Puig, Tianmin Shu, Shuang Li, Zilin Wang, Josh Tenenbaum, Antonio Torralba #machinelearning

How do we bridge Sim 2 Real gap? What is necessary and what is sufficient! 🤖🤯 Checkout: Dynamics Randomization Revisited A Case Study for Quadrupedal Locomotion Paper: arxiv.org/abs/2011.02404 Project: pair.toronto.edu/understanding-… Dennis Da, Xie Zhaoming @Mvandepanne Buck Babich

Long term reasoning needs an understanding ofcontinuous changes in the world. "What happens if I open the door" Action Concept Grounding Networks learn these semantics arxiv.org/abs/2011.11201 iclr-acgn.github.io/ACGN/ Wei Yu W.Chen No longer on Xitter University of Toronto Robotics Institute Vector Institute