Gregor Bachmann

@gregorbachmann1

I am a PhD student @ETH Zürich working on deep learning. MLP-pilled 💊.

gregorbachmann.github.io

ID: 1527256391806746624

19-05-2022 11:54:49

111 Tweet

336 Followers

345 Following

Ilan Fridman Rojas Sasha Rush Cosma Shalizi This is really nice! But the proof is very general and thus complicated. A simpler proof, together with a proof of what can go wrong when learning these next-token predictors with MLE, is given in this (IMHO underrated) paper arxiv.org/pdf/2403.06963 Gregor Bachmann Vaishnavh Nagarajan

We have an exciting line-up of keynote speakers at our workshop for open-vocabulary 3D scene understanding, OpenSUN3D☀️ at #ECCV2024! 🗓️Sept 29, Sunday 14:00-17:30 ✍️opensun3d.github.io Tim Meinhardt Or Litany Alex Bewley Krishna Murthy

🚀 Excited to share our preprint LoRACLR! TL;DR: LoRACLR merges multiple LoRA models into a unified diffusion model for seamless, high-fidelity multi-concept image synthesis with minimal interference. Thanks to Thomas Hofmann, Federico Tombari, and Pinar Yanardag! 🙌

BPE is a greedy method to find a tokeniser which maximises compression! Why don't we try to find properly optimal tokenisers instead? Well, it seems this is a very difficult—in fact, NP-complete—problem!🤯 New paper + P. Whittington, Gregor Bachmann :) arxiv.org/abs/2412.15210

I will be giving a talk on open-vocabulary 3D scene understanding at the next ZurichCV meetup! 🗓️ Date: Thursday, January 23rd 18:00 📍Location: ETH AI Center, please see zurichai.ch/events/zurichc… for additional details!

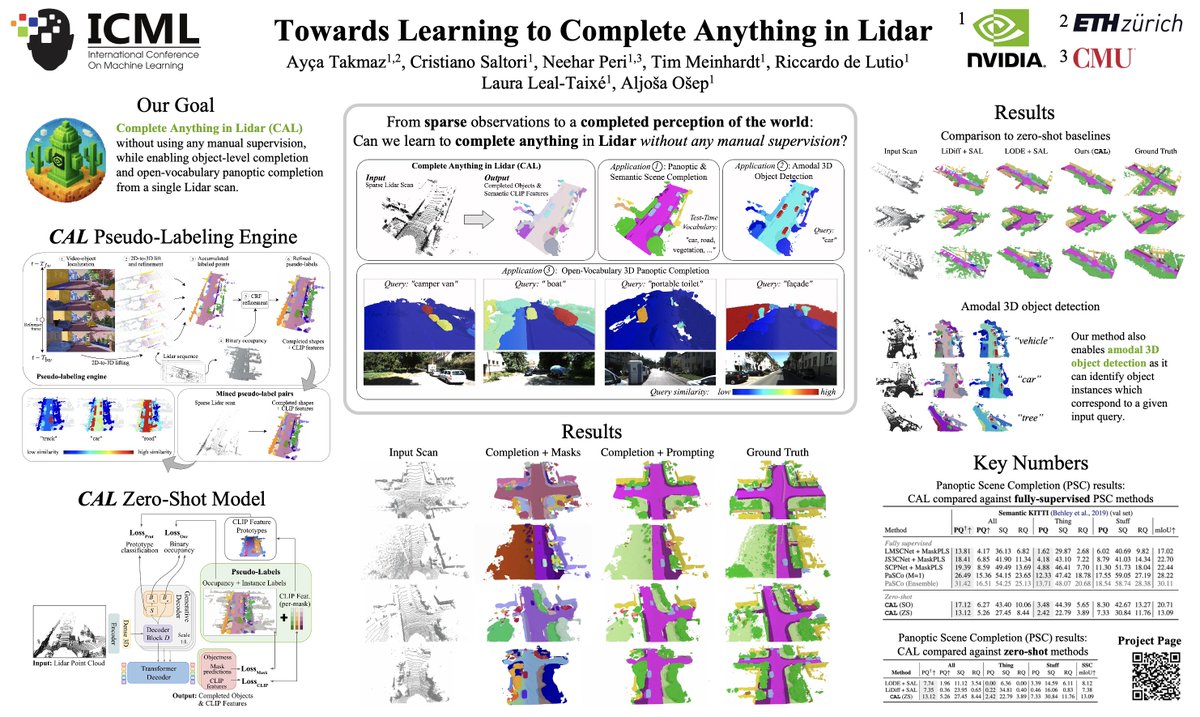

Thanks AK for sharing! During my internship at NVIDIA AI, we explored zero-shot panoptic completion of Lidar scans — together with Cristiano Saltori Neehar Peri Tim Meinhardt Riccardo de Lutio Laura Leal-Taixe Aljosa!

François Fleuret Hey François Fleuret, we had formalized this very intuition here in this late-2023 work you may be interested in :-) arxiv.org/abs/2403.06963

Better LLM training? Gregor Bachmann & Vaishnavh Nagarajan showed next-token prediction causes shortcut learning. A fix? Multi-token prediction training (thanks Fabian Gloeckle) We use register tokens: minimal architecture changes & scalable prediction horizons x.com/NasosGer/statu…

Can we learn to complete anything in Lidar without any manual supervision? Excited to share our #ICML2025 paper “Towards Learning to Complete Anything in Lidar” from my time at NVIDIA with Cristiano Saltori Neehar Peri Tim Meinhardt Riccardo de Lutio Aljosa Laura Leal-Taixe! Thread🧵👇

Honoured to receive two (!!) Senior Area Chair awards at #ACL2025 😁 (Conveniently placed on the same slide!) With the amazing Philip Whittington, Gregor Bachmann and Ethan Gotlieb Wilcox, Cui Ding, Giovanni Acampa, Alex Warstadt, Tamar Regev