Guillaume Le Strat

@guillaumelst

@zml_ai

Tech, startups, data & music

Paris - South of France

ID: 1539415124

http://www.linkedin.com/in/Guillaume-Le-Strat 22-06-2013 20:02:32

244 Tweet

350 Followers

2,2K Following

Mitchell Hashimoto Tristan Ross 😺❄️ Bun Literally yesterday with Francis Bouvier and Katie Hallett from Lightpanda

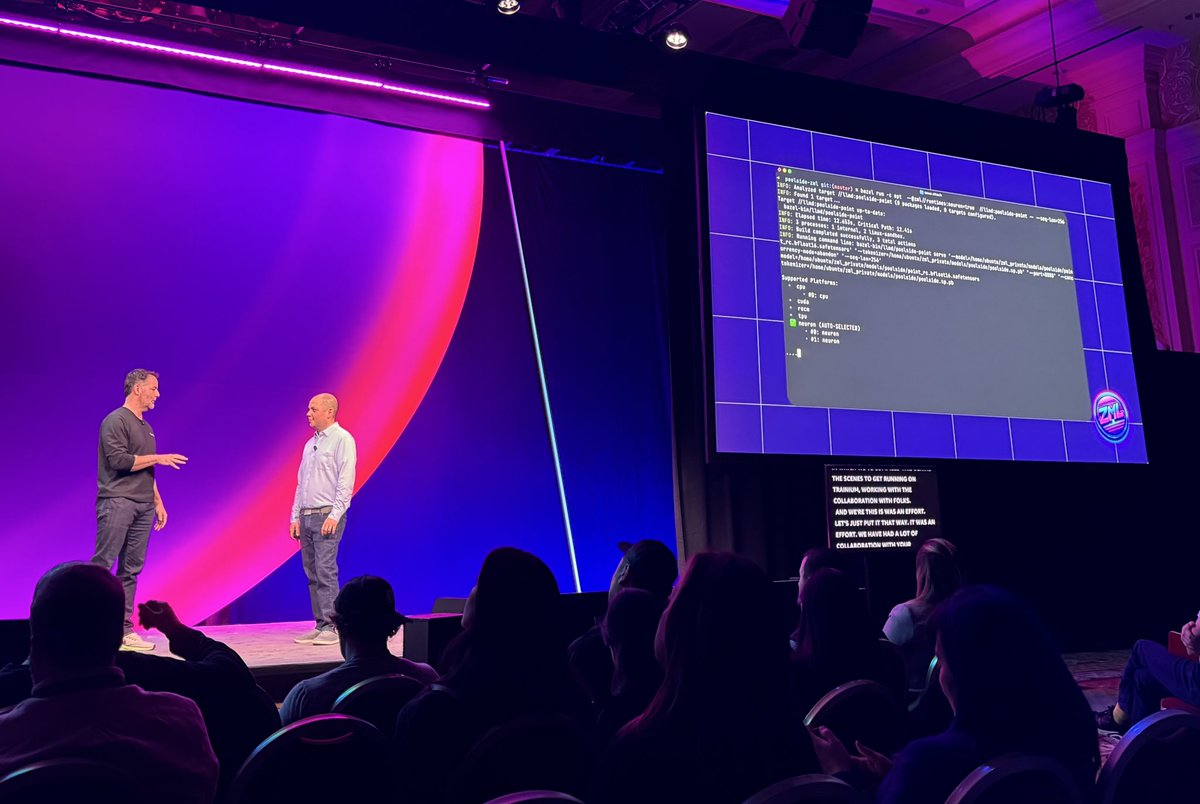

boy oh boy am I excited for this. Steeve Morin from ZML demoing at AI Demo Days lffffgggg one server. many models. speed afffff

Great to see these two together Steeve Morin & Harry Stebbings🔥🔥

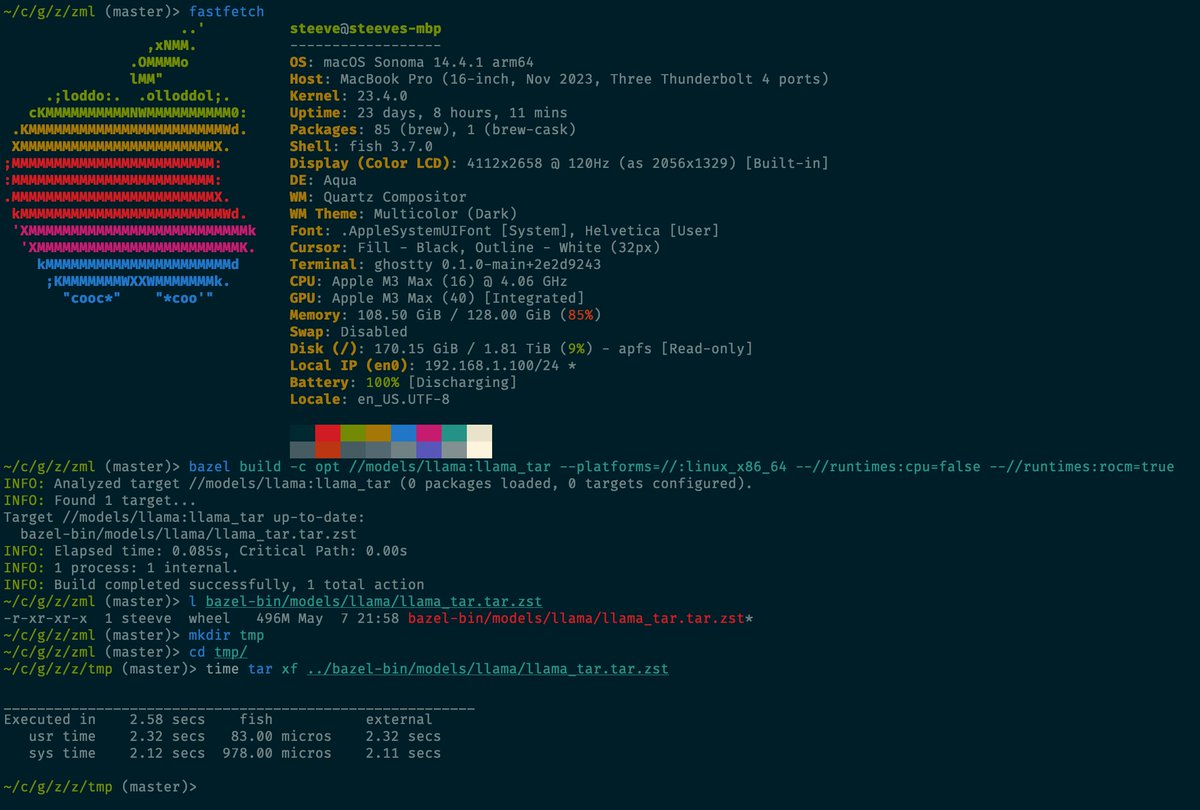

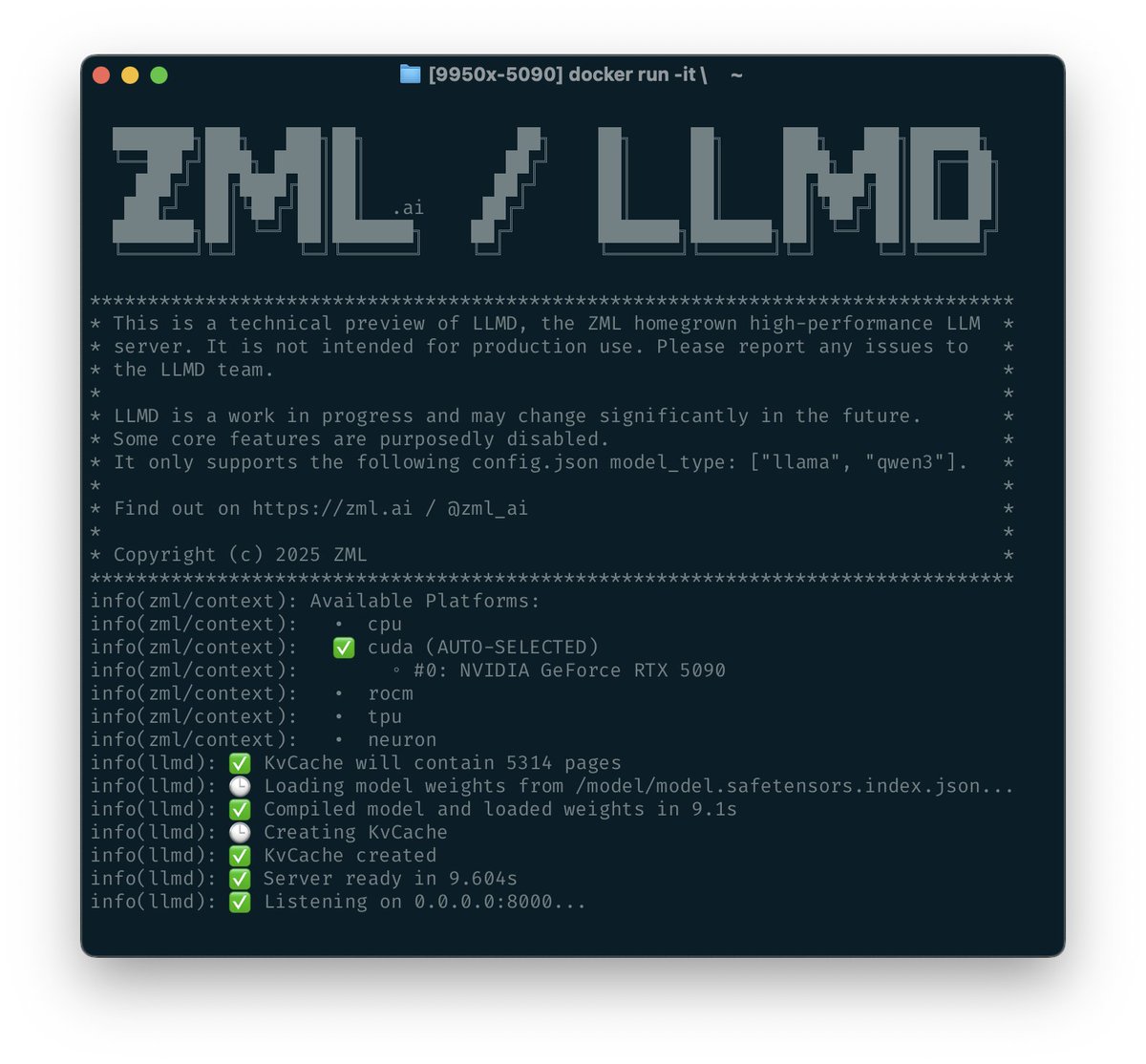

The ZML team and Steeve Morin cooked a new high-performance LLM inference engine. - lightweight 2.4GB container - easy on cross-platform - written in zig I just tested it and deployed on Hugging Face Inference Endpoints. You can try it out in 5min! 👇