Gullal S. Cheema

@gullal7

Research Assistant @l3s_luh

Previously Marie Sklodowska Curie ESR (PhD) @TIBHannover, Germany

MUWS Workshop: muws-workshop.github.io

Views are personal.

ID: 1042742865992937474

20-09-2018 11:50:47

685 Tweet

74 Followers

170 Following

it's been more than a decade since KD was proposed, and i've been using it all along .. but why does it work? too many speculations but no simple explanation. Sungmin Cha and i decided to see if we can come up with the simplest working description of KD in this work. we ended

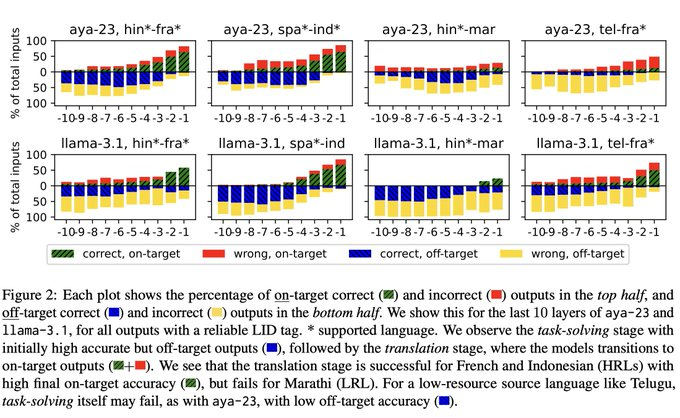

What’s really going on inside LLMs when they handle non-English queries? Niyati Bafna's recent work introduces the **translation barrier hypothesis**, a framework for understanding multilingual model behavior. This hypothesis says that : (1) Multilingual generation, internally,