Haihao Shen

@haihaoshen

Creator of #intel Neural Compressor and AutoRound for LLMs; HF Optimum-Intel Maintainer; OPEA Founding member; Opinions my own

ID: 1438706609400651777

https://github.com/intel/auto-round 17-09-2021 03:29:57

544 Tweet

3,3K Followers

2,2K Following

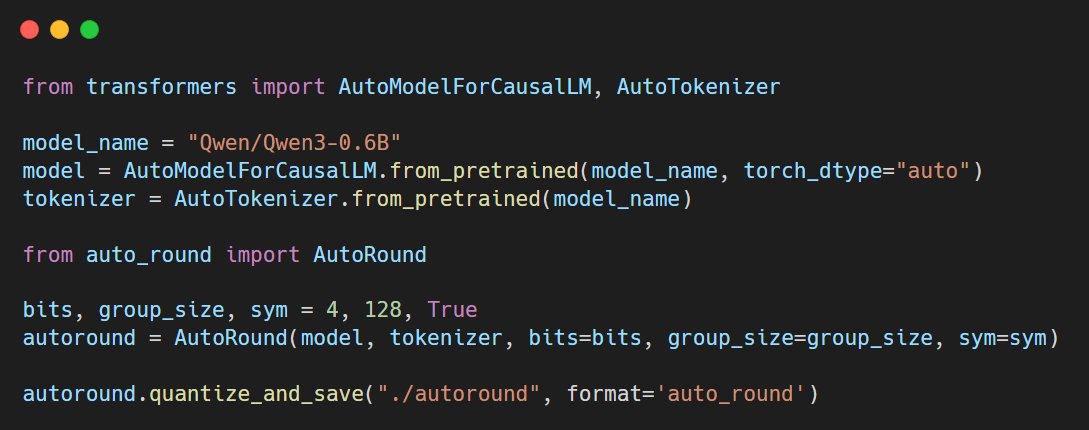

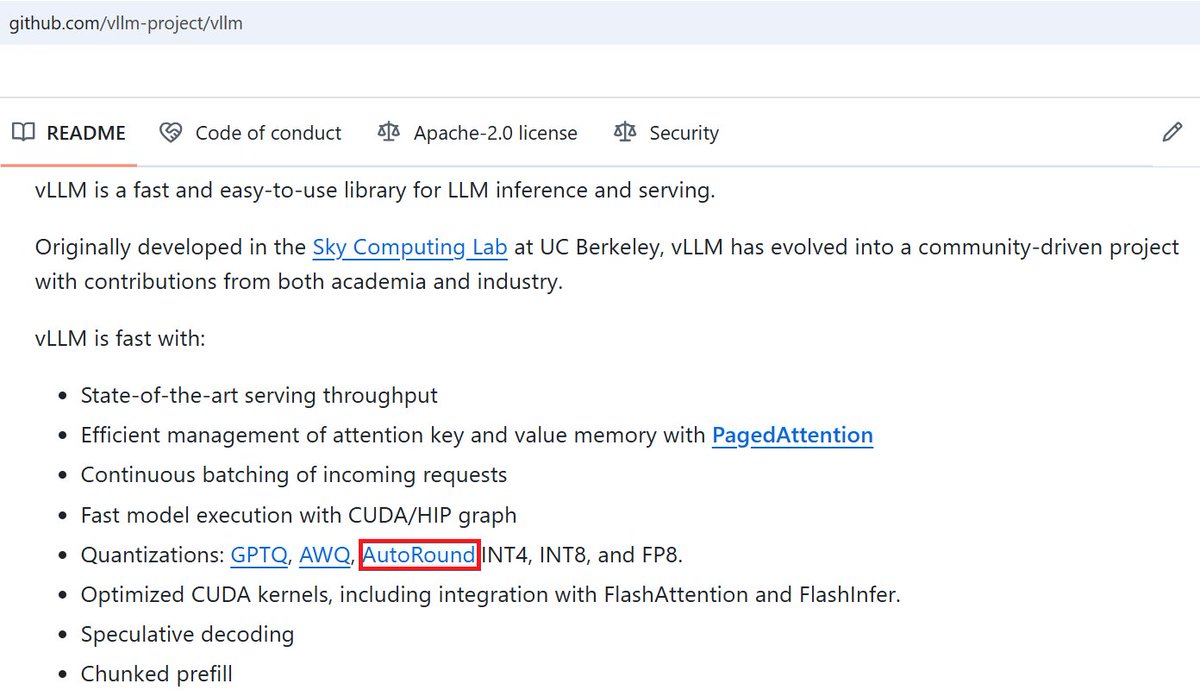

🔥AutoRound, a leading low-precision library for LLMs/LVMs developed by Intel, officially landed on Hugging Face transformers. Congrats to Wenhua, Weiwei, Heng! Thanks to Ilyas, Marc, Mohamed from HF team! github.com/huggingface/tr… #intel clem 🤗 Julien Chaumond

Congrats Junyang Lin and Qwen team! Really excited to have #intel Neural Compressor (github.com/intel/neural-c…) be part of SW partners for day0 launch! Cheers 🍻