Hamed Shirzad

@hamedshirzad13

PhD student @UBC_CS

Interested in Machine Learning on Graphs

ID: 1550221567174488064

https://www.hamedshirzad.com/ 21-07-2022 20:50:08

105 Tweet

182 Followers

247 Following

Can’t attend ICLR 🇸🇬 due to visa issues but Chandan Reddy will have the oral presentation of *LLM-SR*on Friday 👇🏻 + see our new preprint on benchmarking capabilities of LLMs for scientific equation discovery *LLM-SRBench*: arxiv.org/abs/2504.10415

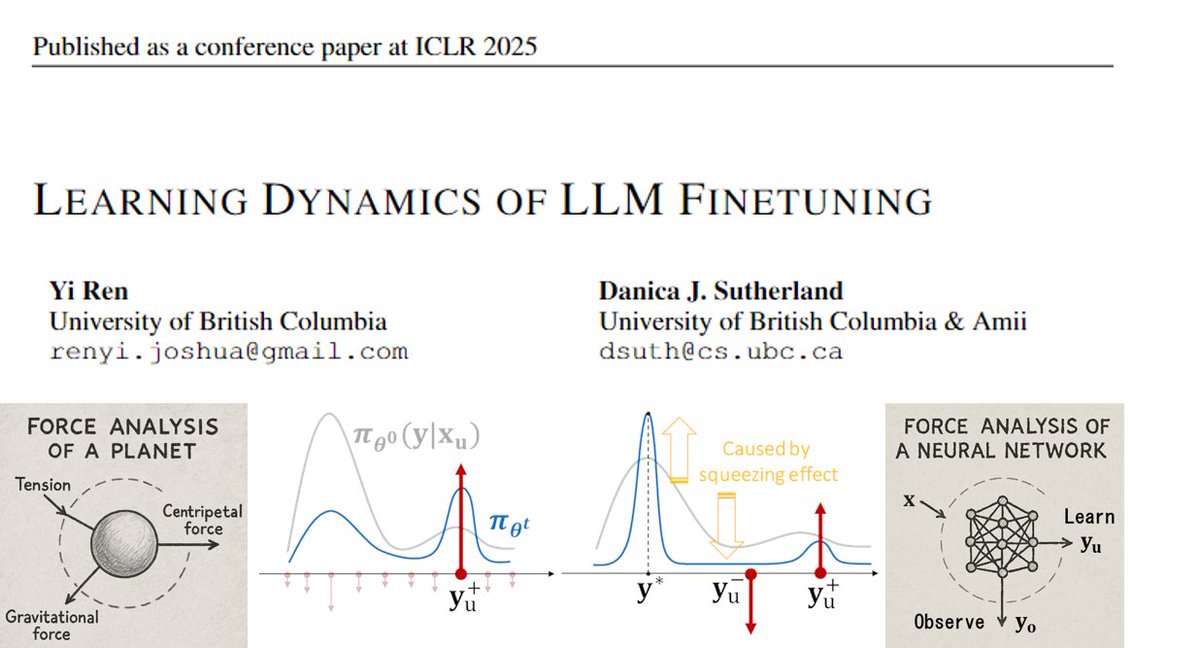

Huge congrats to my labmate Yi (Joshua) Ren and my supervisor Danica Sutherland for receiving an Outstanding Paper Award at ICLR 2026 for their work on Learning Dynamics of LLM Finetuning! So proud to see their amazing research recognized 👏🔥

Thank you Graph Signal Processing Workshop 2025 for the opportunity to give the morning keynote! It was a pleasure to present my work on Exphormer and Spexphormer models to such an insightful audience.

Great attending ICML this year, really enjoyed connecting with the graph learning community! Thanks a lot to Christopher Morris for organizing the lovely dinner :)