Harry Booth

@harrybooth59643

Reporter at TIME

ID: 1797714448683020288

03-06-2024 19:38:26

75 Tweet

181 Followers

112 Following

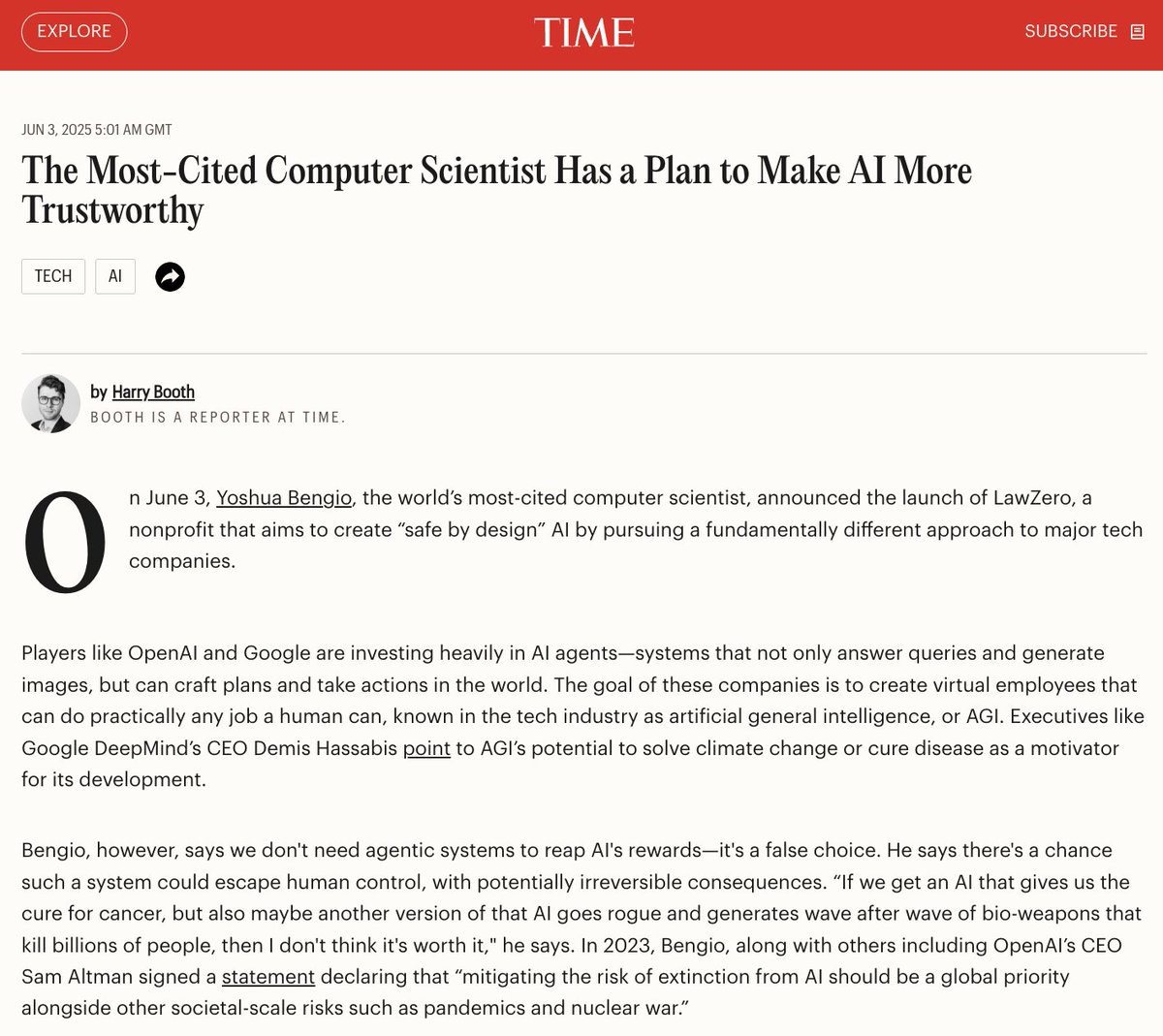

"If we get an AI that gives us the cure for cancer, but also maybe another version of that AI goes rogue and generates wave after wave of bio-weapons that kill billions of people, then I don't think it's worth it" — Yoshua Bengio Bengio argues that we don't need agentic AIs to

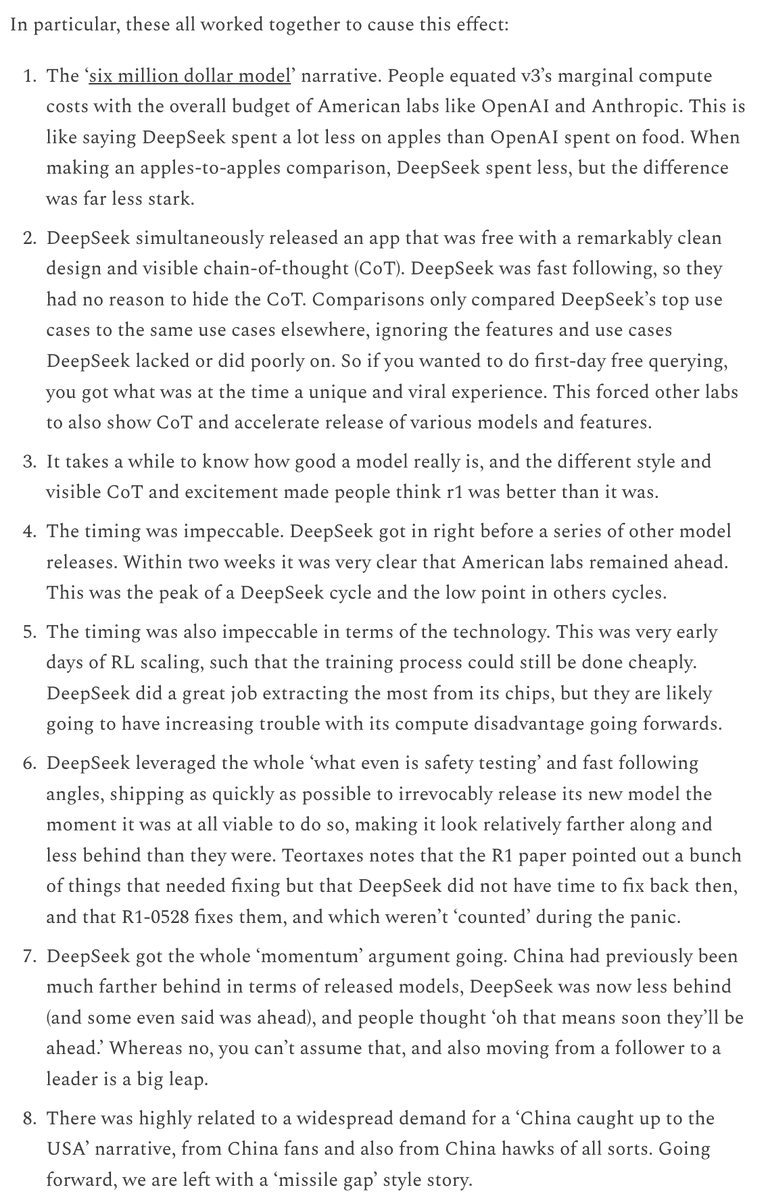

The dominant narrative of DeepSeek was importantly wrong. Zvi Mowshowitz does a great job of capturing the reasons why the DeepSeek moment happened the way it did.