Hossein Kashiani

@hossein_serein

PhD student. Interested in Computer Vision.

ID: 1268271506733309958

03-06-2020 20:01:46

164 Tweet

128 Followers

2,2K Following

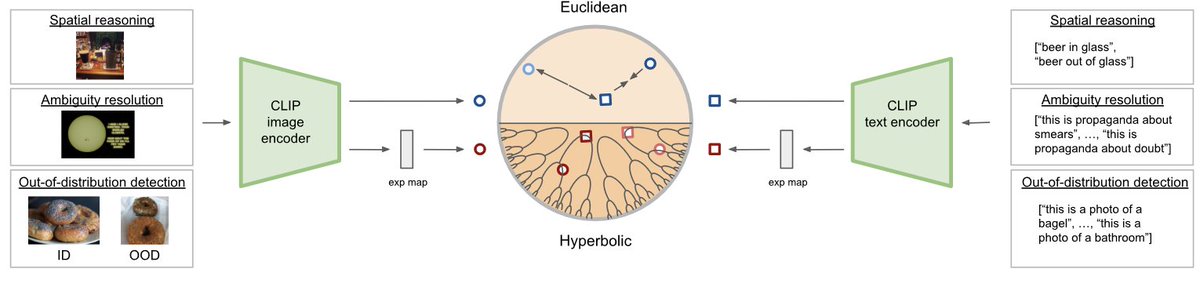

Vision-language models benefit from hyperbolic embeddings for standard tasks, but did you know that hyperbolic vision-language models also have surprising properties? Our new #TMLR paper shows 3 intriguing properties. w/ Sarah Ibrahimi Mina Ghadimi @nannevn marcel worring

Happy New Year! To kick off the year, I've finally been able to format and upload the draft of my AI Research Highlights of 2024 article. It covers a variety of topics, from mixture-of-experts models to new LLM scaling laws for precision: magazine.sebastianraschka.com/p/ai-research-…

Is DPO better than PPO? What are the important ingredients to properly train RLHF for LLM? How to train them efficiently? Yi Wu from Tsinghua University gave an excellent talk on Effective RL training for LLMs at CMU LTI before #NeurIPS2024. youtube.com/watch?v=T1SeqB… Language Technologies Institute | @CarnegieMellon

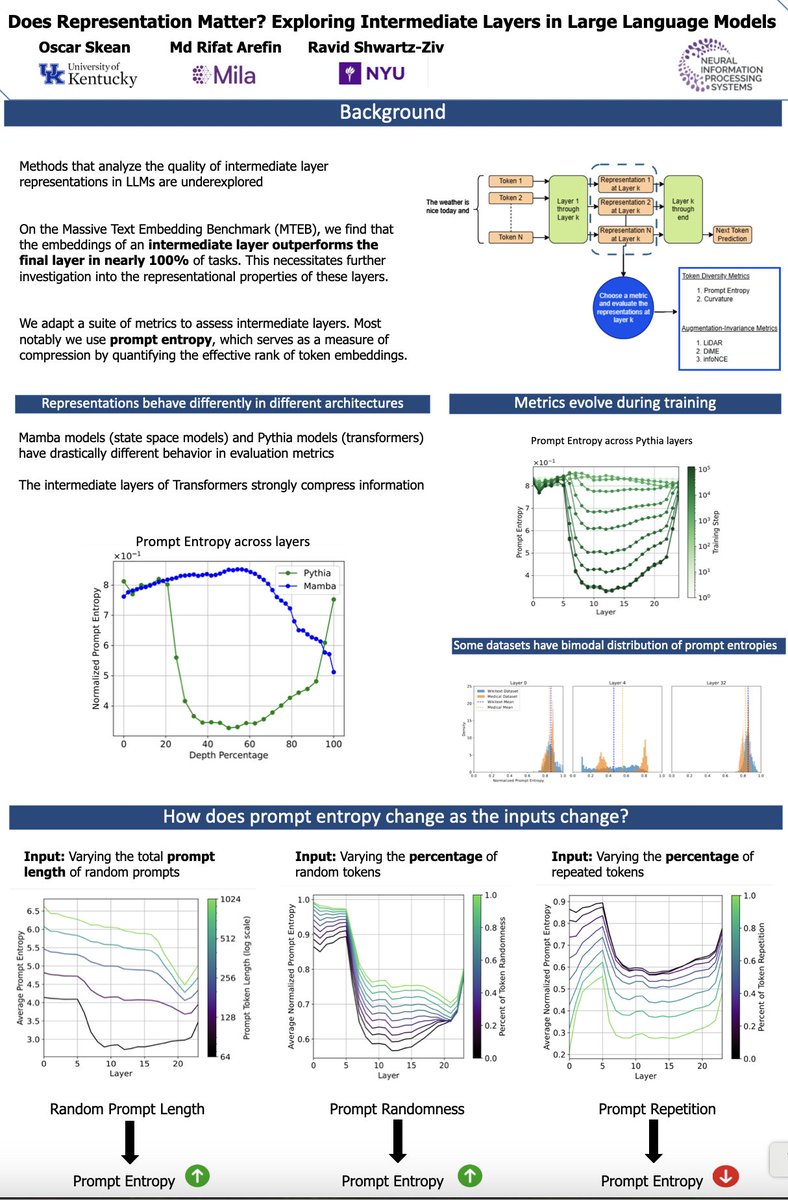

Recent work by Md Rifat Arefin, Oscar Skean, & CDS' Ravid Shwartz Ziv and Yann LeCun shows intermediate layers in LLMs often outperform the final layer for downstream tasks. Using info theory & geometric analysis, they reveal why this happens & how it impacts models. nyudatascience.medium.com/middle-layers-…