Hy Dang

@hydang99

PhD Student @ Uni. of Notre Dame

ID: 1568508746590031877

10-09-2022 07:57:07

57 Tweet

24 Followers

68 Following

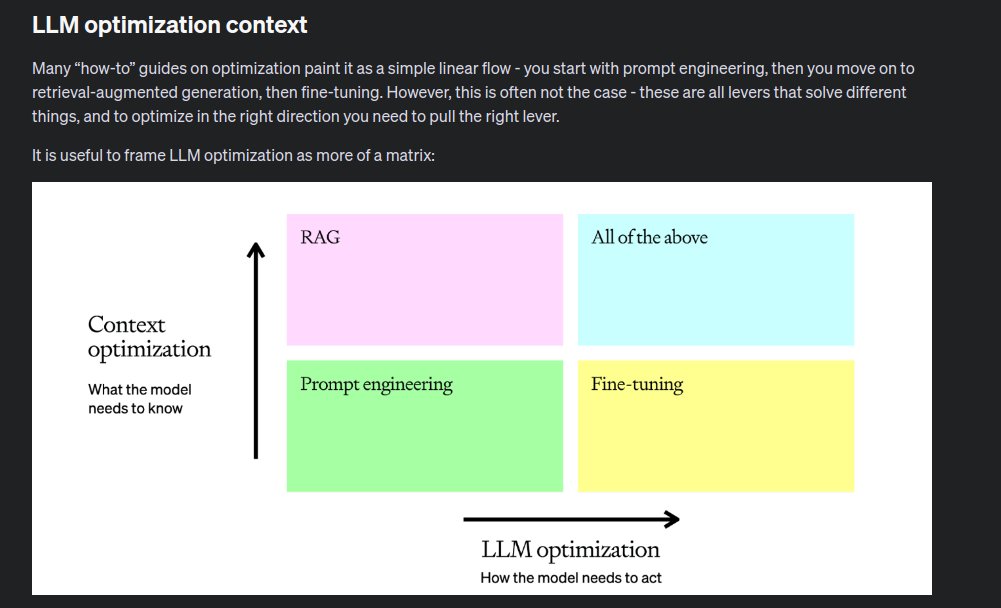

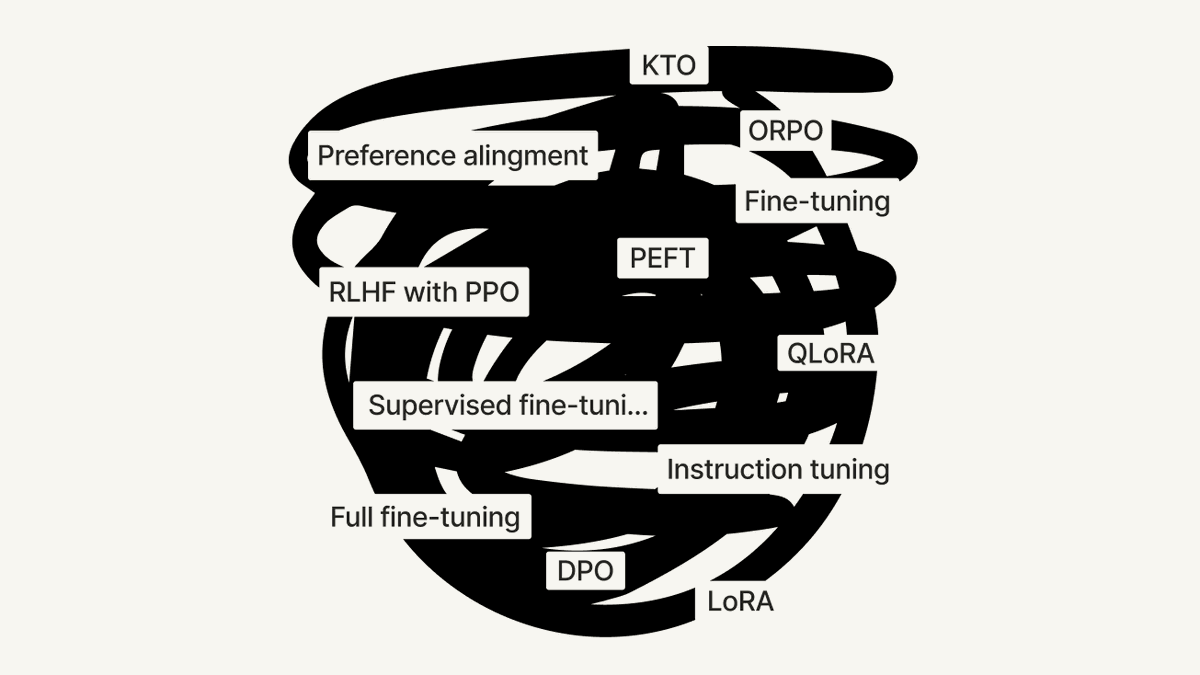

Over the recent weeks, an epic collaboration among some of the best practitioners in this space has brought us a three-part series of "What We Learned from a Year of Building with LLMs" on O'Reilly Media! In this three-part series, Eugene Yan, Bryan Bischof fka Dr. Donut, Charles 🎉 Frye,

🚨 Happy to say that I'll be presenting our work (w/Sean MacAvaney & Debasis Ganguly) "Top-Down Partitioning for Efficient List-Wise Ranking" at ReNeuIR Workshop @ SIGIR 2025 in Washington! here's a pre-print with updates coming soon: arxiv.org/abs/2405.14589 #SIGIR2024

![Kalyan KS (@kalyan_kpl) on Twitter photo Top RAG Papers of the Week

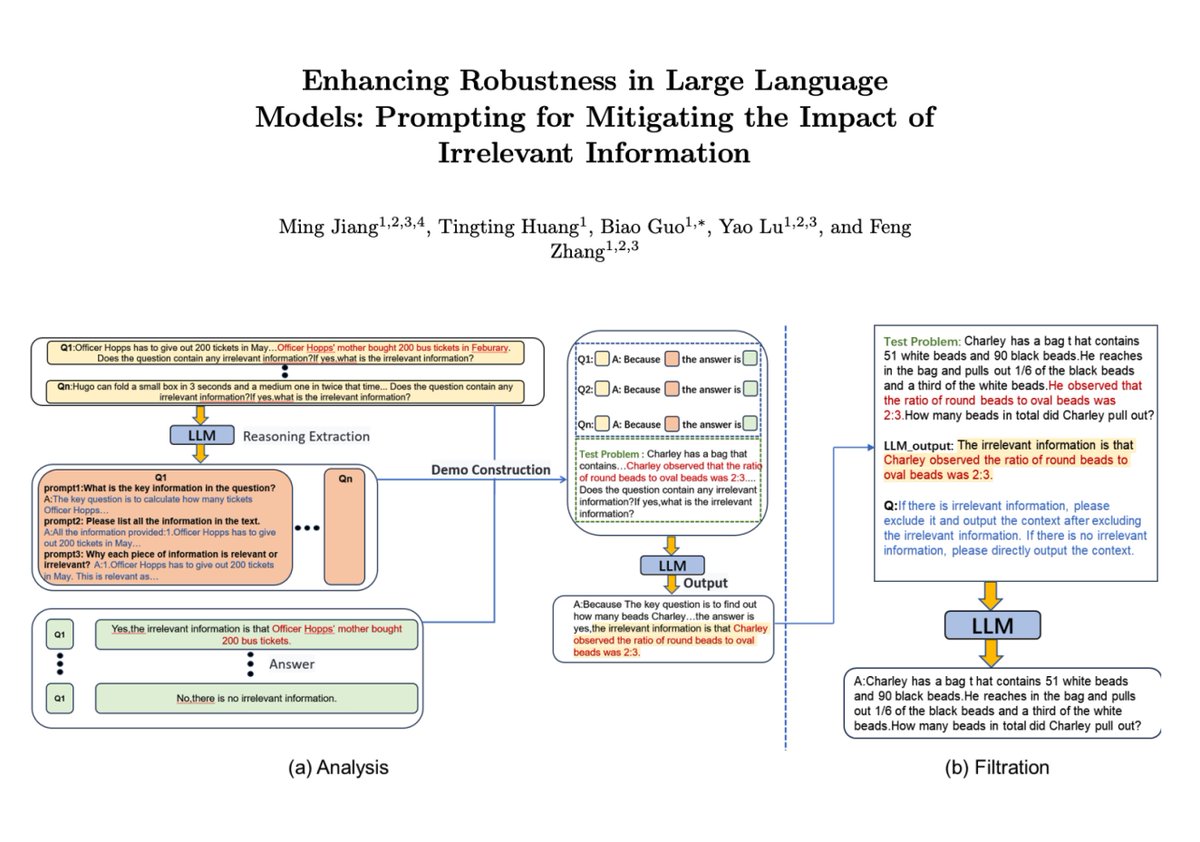

[1] Meta Knowledge for Retrieval Augmented Large Language Models

[2] RAGLAB: A Modular and Research-Oriented Unified Framework for Retrieval-Augmented Generation

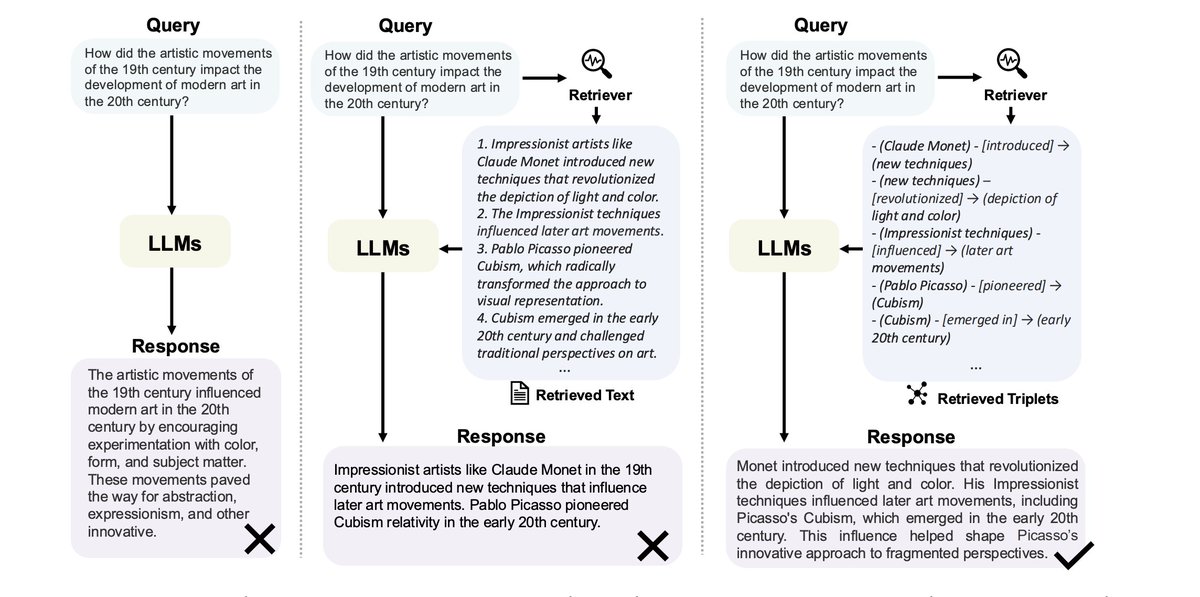

[3] Graph Retrieval-Augmented Generation: A Survey

[4] CommunityKG-RAG: Leveraging Top RAG Papers of the Week

[1] Meta Knowledge for Retrieval Augmented Large Language Models

[2] RAGLAB: A Modular and Research-Oriented Unified Framework for Retrieval-Augmented Generation

[3] Graph Retrieval-Augmented Generation: A Survey

[4] CommunityKG-RAG: Leveraging](https://pbs.twimg.com/media/GV6o7qZa0AE-hkf.jpg)