Ioannis Kakogeorgiou

@ioanniskakogeo1

I am a Postdoctoral Researcher at Archimedes AI. My research focuses on deep learning in computer vision and remote sensing.

ID: 1438464792474361856

https://scholar.google.com/citations?user=B_dKcz4AAAAJ 16-09-2021 11:28:57

77 Tweet

168 Followers

291 Following

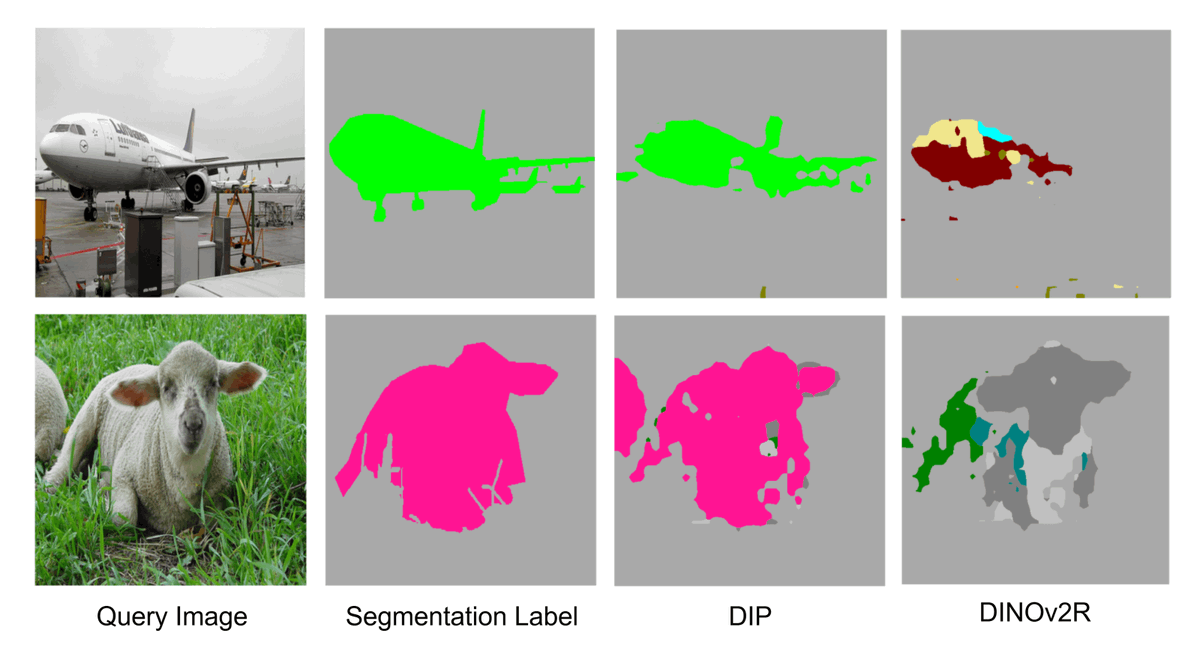

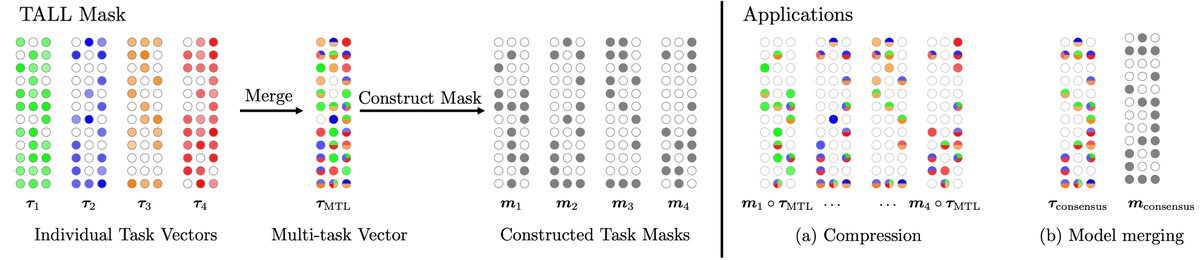

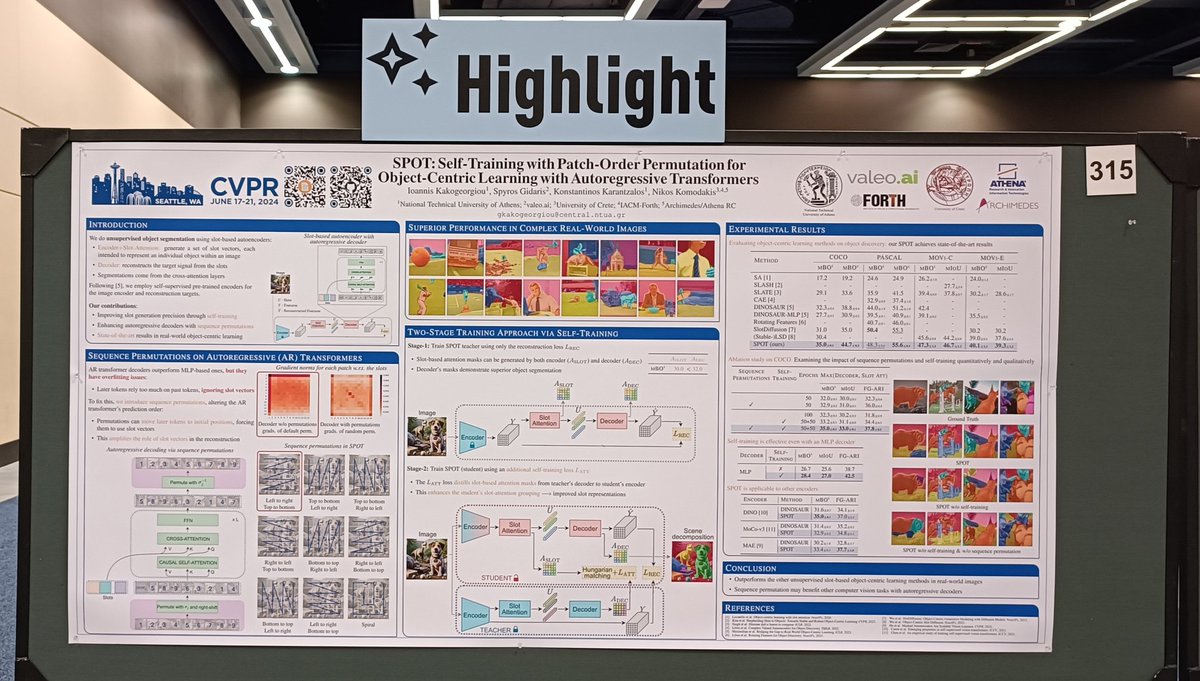

SPOT: Self-Training with Patch-Order Permutation for Object-Centric Learning with Autoregressive Transformers by @IoannisKakogeo1@SpyrosGidaris tsiou.karank N. Komodakis tl;dr: improve slot-based autoencoders w/ self-training & patch permutations #CVPR2024 x.com/IoannisKakogeo…

Day 2 of #IGARSS2024 #Summerschool starts today! From Data to Application 📊➡️📱 Today we start with an in-depth session on machine learning for Earth Observation by tsiou.karank, Bill Psomas, Ioannis Kakogeorgiou🌍🤖 Let's dive in! 🚀💻 #RemoteSensing #Athens #machinelearning

🌊 Exciting news! Our AI framework for tracking global marine pollution, including debris & oil spills, is featured on #NVIDIA's blog! 🚀🌍 By using deep learning & satellite imagery, we boost ocean cleanup efforts. 🌐💡 Read more: developer.nvidia.com/blog/high-tech… #AI NVIDIA AI Developer

Thanks NVIDIA AI Developer for featuring our work on marine pollution detection! 🌊🐬 Excited to leverage advanced AI for a cleaner ocean.

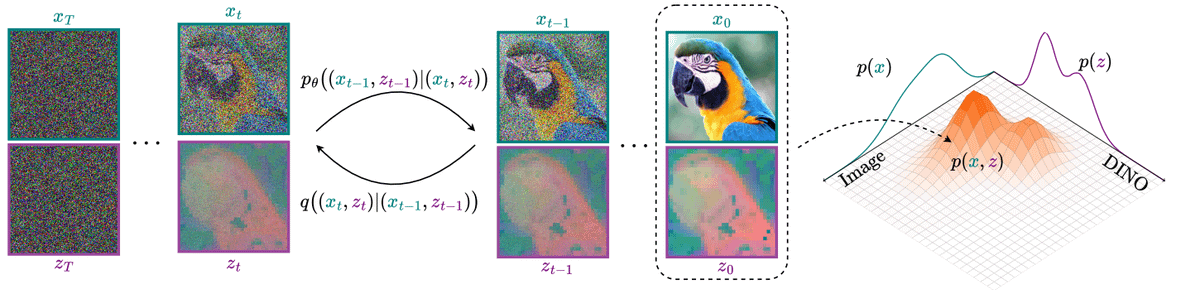

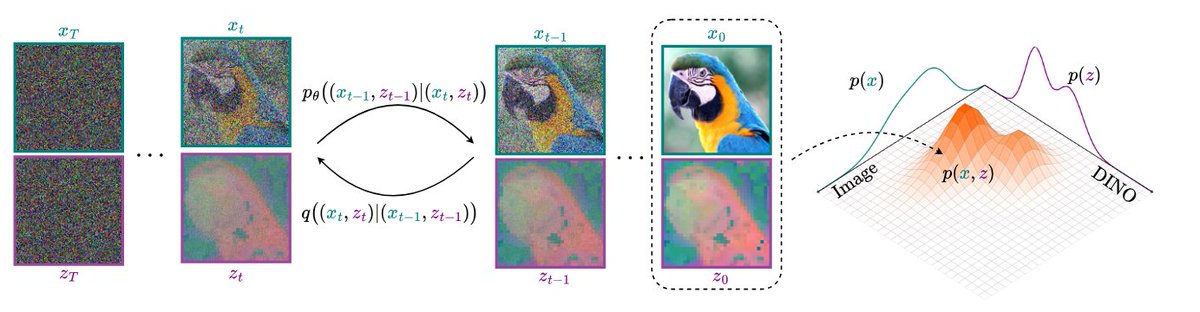

EQ-VAE: such a simple & cool trick to regularize multiple kinds of autoencoders: align reconstruction of transformed latents w/ the corresponding transformed inputs. 🚀REPA: 4x training speedup 🚀MaskGIT: 2x training speedup 🚀DiT-XL/2: 7x faster convergence Kudos Thodoris Kouzelis et al.

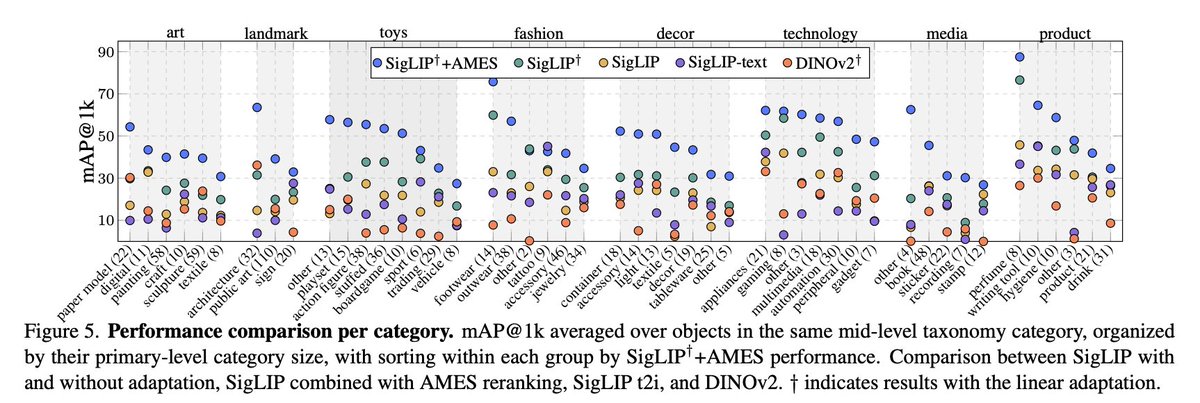

ILIAS: Instance-Level Image retrieval At Scale Giorgos Kordopatis-Zilos, Vladan Stojnić , Anna Manko, Pavel Šuma, Nikolaos-Antonios Ypsilantis , Nikos Efthymiadis, Zakaria Laskar, Jiří Matas, Ondřej Chum, Giorgos Tolias tl;dr: new retrieval dataset with guaranteed GT. SigLIP rules. arxiv.org/abs/2502.11748 1/

EQ-VAE is accepted at #ICML2025 😁. Grateful to my co-authors for their guidance and collaboration! Ioannis Kakogeorgiou, Spyros Gidaris, Nikos Komodakis.

![valeo.ai (@valeoai) on Twitter photo 🚗 Ever wondered if an AI model could learn to drive just by watching YouTube? 🎥👀

We trained a 1.2B parameter model on 1,800+ hours of raw driving videos.

No labels. No maps. Just pure observation.

And it works! 🤯

🧵👇 [1/10] 🚗 Ever wondered if an AI model could learn to drive just by watching YouTube? 🎥👀

We trained a 1.2B parameter model on 1,800+ hours of raw driving videos.

No labels. No maps. Just pure observation.

And it works! 🤯

🧵👇 [1/10]](https://pbs.twimg.com/media/GkjVjoPXMAAQzMq.jpg)