Xu Cao

@irohxu

CS PhD Student @IllinoisCS; Co-founder of PediaMed AI. ML researcher.

ID: 1544844847693152257

https://www.irohxucao.com/ 07-07-2022 00:45:00

36 Tweet

83 Followers

126 Following

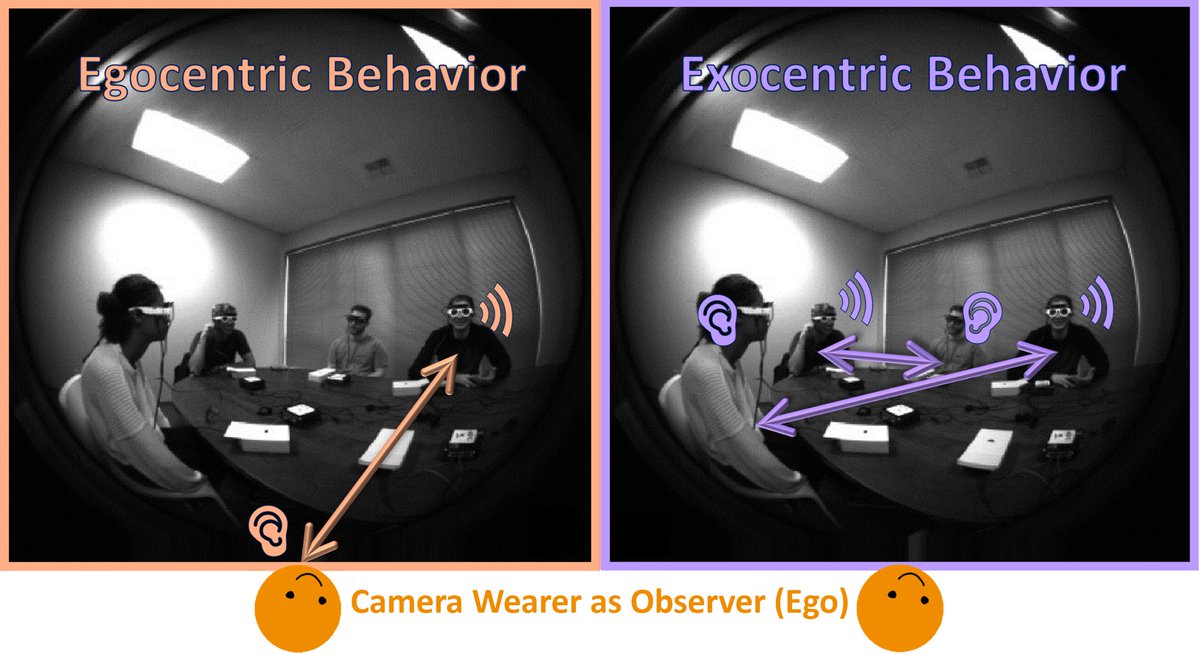

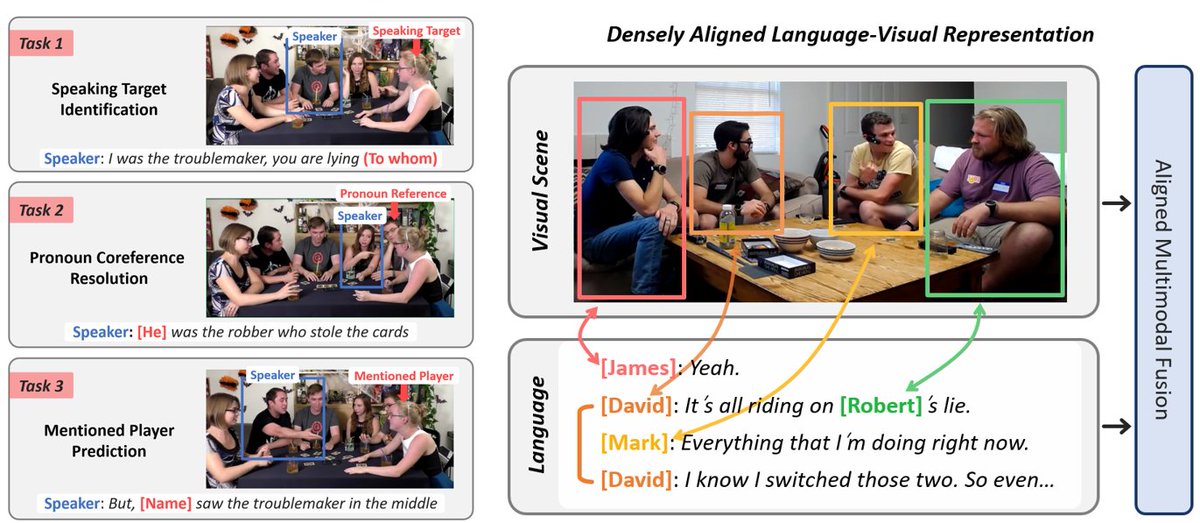

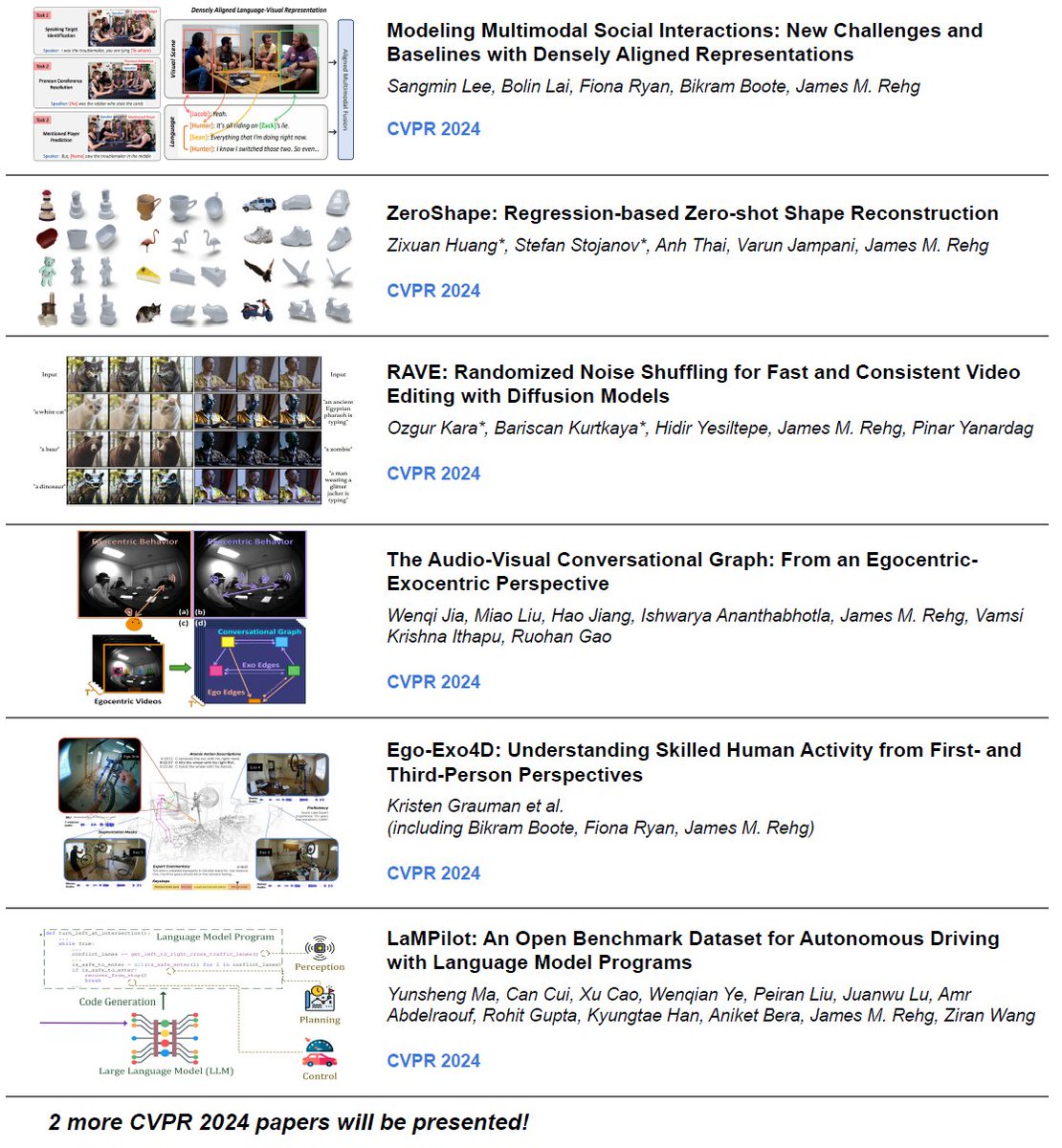

Delighted to share an overview of my lab's eight #cvpr 2024 papers. Thanks to my amazing postdoc Sangmin Lee for spearheading the effort and to our invaluable collaborators AI at Meta Reality Labs at Meta Stability AI Toyota USA. See you in Seattle! @IllinoisCS The Grainger College of Engineering

Come to #CVPR 2024 Poster #220 this morning to chat with our team about how to build the Vision-Language Model of the HD map and traffic scene understanding task. The updated version of our benchmark will serve as a new challenge dataset in the ITSC 2024 workshop. James Matthew Rehg

A delightful Sunday at #ICLR2025 in the Pediatric AI workshop pediamedai.com/ai4chl/ listening to an exciting talk by Jason Alan Fries describing his exciting work with Nigam Shah Stanford Health Care and others!