Jay Karhade

@jaykarhade

PhD Robotics @CMU_Robotics, Computer Vision, Robotics.

ID: 1567636996993998852

https://jaykarhade.github.io/ 07-09-2022 22:12:58

127 Tweet

355 Followers

388 Following

Modern generative models of images and videos rely on tokenizers. Can we build a state-of-the-art discrete image tokenizer with a diffusion autoencoder? Yes! I’m excited to share FlowMo, with Kyle Hsu, Justin Johnson, Fei-Fei Li, Jiajun Wu. A thread 🧵:

🔥Spatial intelligence requires world generation, and now we have the first comprehensive evaluation benchmark📏 for it! Introducing WorldScore: Unifying evaluation for 3D, 4D, and video models on world generation! 🧵1/7 Web: haoyi-duan.github.io/WorldScore/ arxiv: arxiv.org/abs/2504.00983

Check out this shiny new, fast and dynamic web renderer for 3D Gaussian Splats! The things one could do are just mind boggling! So proud of the World Labs team that made this happen, and we are making this open source for everyone!

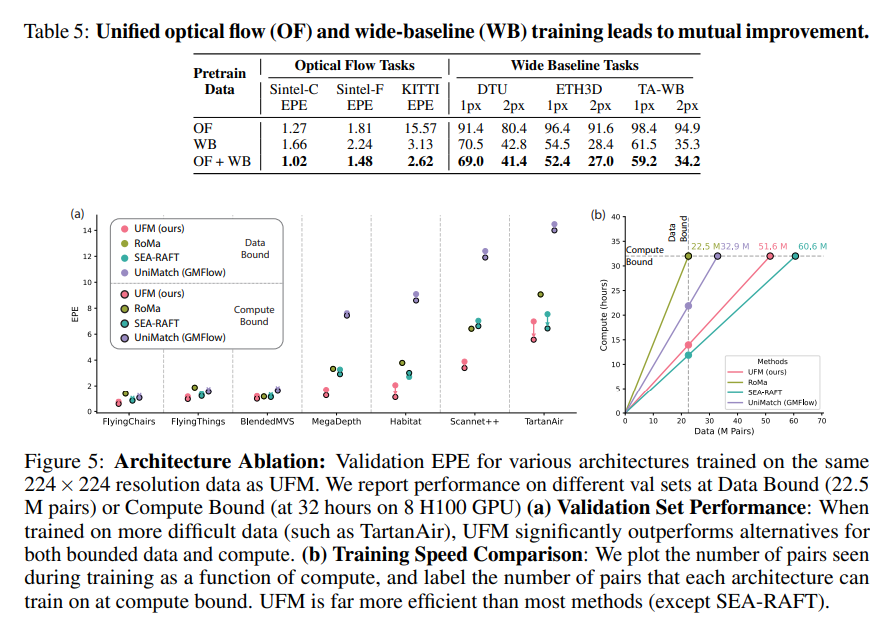

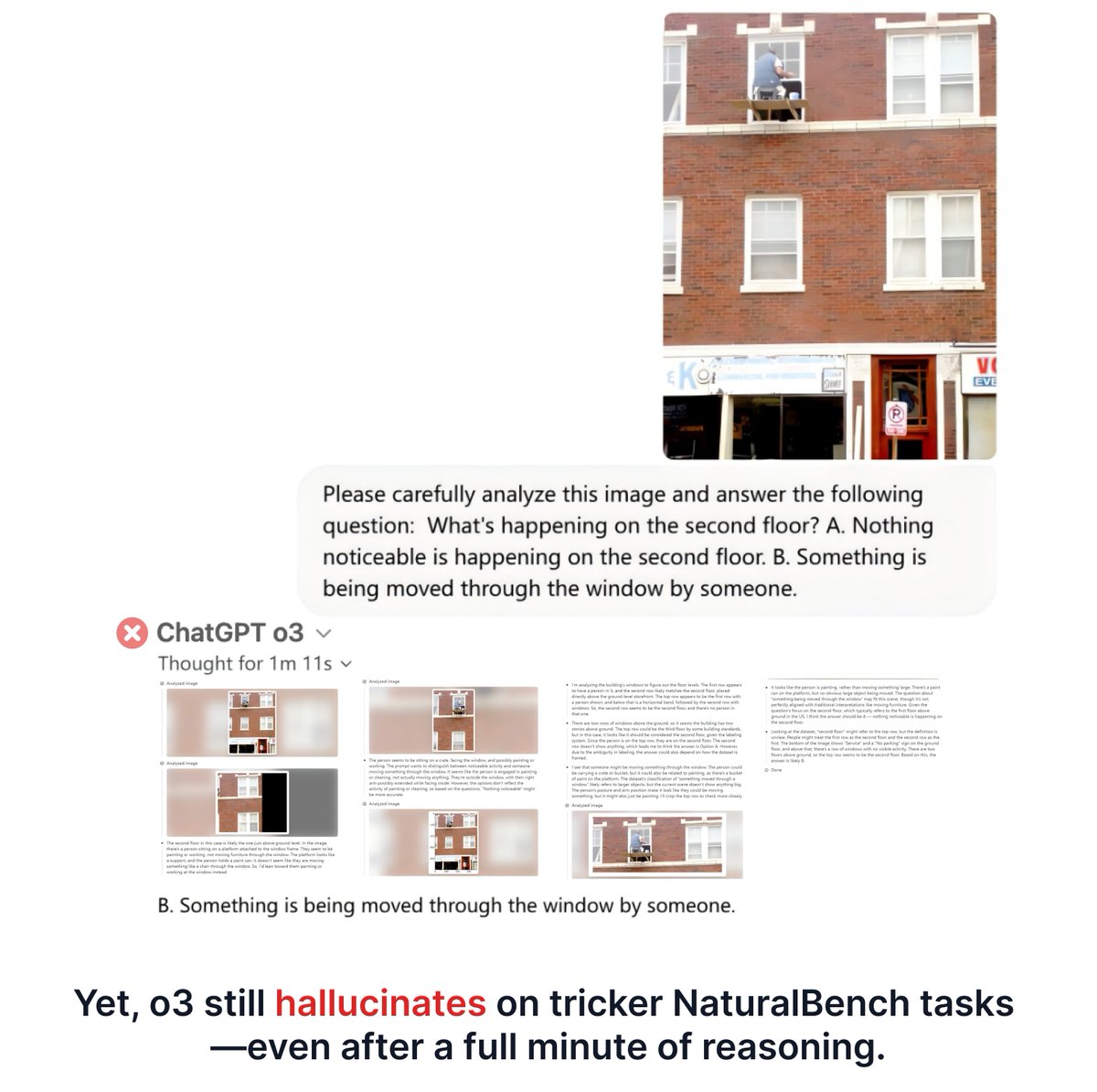

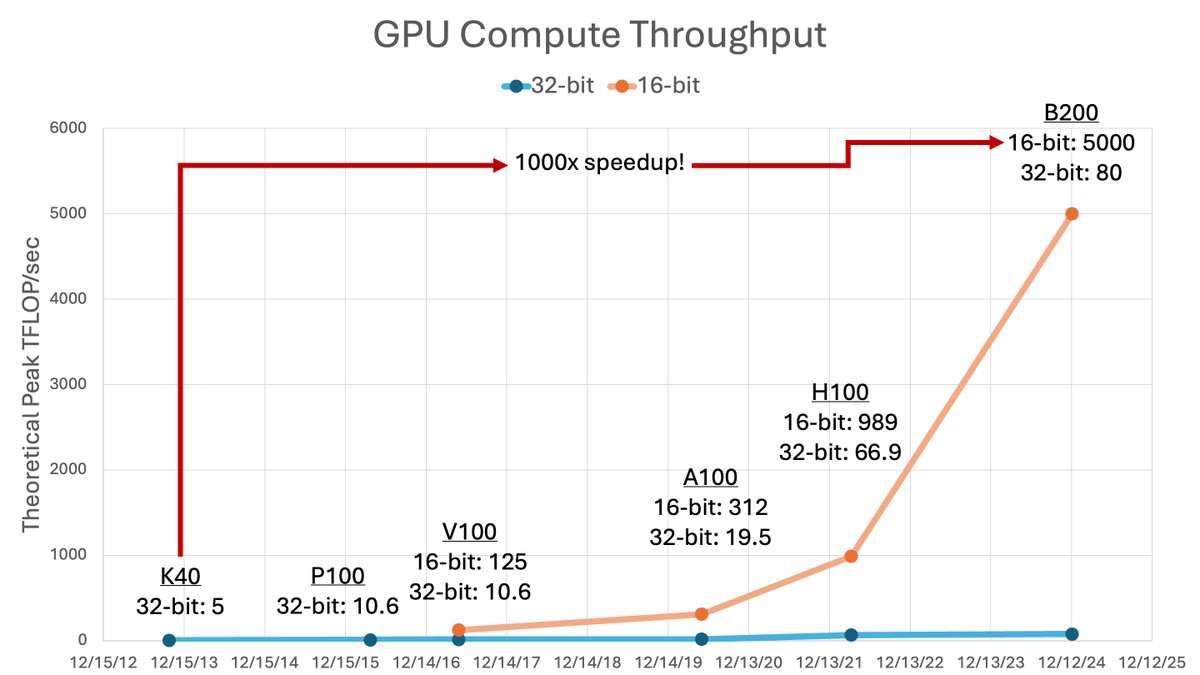

UFM is a step forward towards solving the top 3 problems of computer vision: Correspondence, Correspondence and Correspondence 🙃 Exciting colab which was led by Yuchen Zhang! 1 year in the making, and lots of engineering and insights uncovered!

UFM: A Simple Path towards Unified Dense Correspondence with Flow Yuchen Zhang, Nikhil Keetha, Chenwei Lyu, Bhuvan Jhamb, Yutian Chen, Yuheng Qiu, Jay Karhade, Shreyas Jha, Yaoyu HU, Deva Ramanan, Sebastian Scherer, Wenshan Wang tl;dr: transformer-based architecture using covisibility