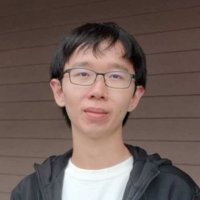

Jiarui Xu

@jerry_xu_jiarui

Final-year Ph.D in UC San Diego

Undergrad. from HKUST

ID: 745524777662582785

http://jerryxu.net 22-06-2016 07:52:13

89 Tweet

1,1K Followers

528 Following

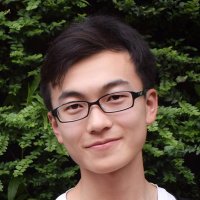

Thinking about a PhD? Don’t miss the chance to work with Elliott / Shangzhe Wu! He’s not only a brilliant researcher but also an inspiring mentor and collaborator. Excited to see the amazing projects his new team will bring to life! 🌟