Jiarui Zhang (Jerry)

@jiaruiz58876329

@USC CS Ph.D. student @CSatUSC | ex-intern @amazon | B.Eng. @Tsinghua_Uni | MLLM | Visual Perception | Reasoning | AI for Science

ID: 1559440854699237376

https://saccharomycetes.github.io/ 16-08-2022 07:24:14

47 Tweet

301 Followers

588 Following

Last month, I spoke at the Tianqiao & Chrissy Chen Institute × AGI House Parametric Memory Workshop, where I introduced mem0 adaptive memory layer for AI agents. I presented real-world examples: - Personalized Learning: Tracking each student’s mastery to tailor lessons without repetition

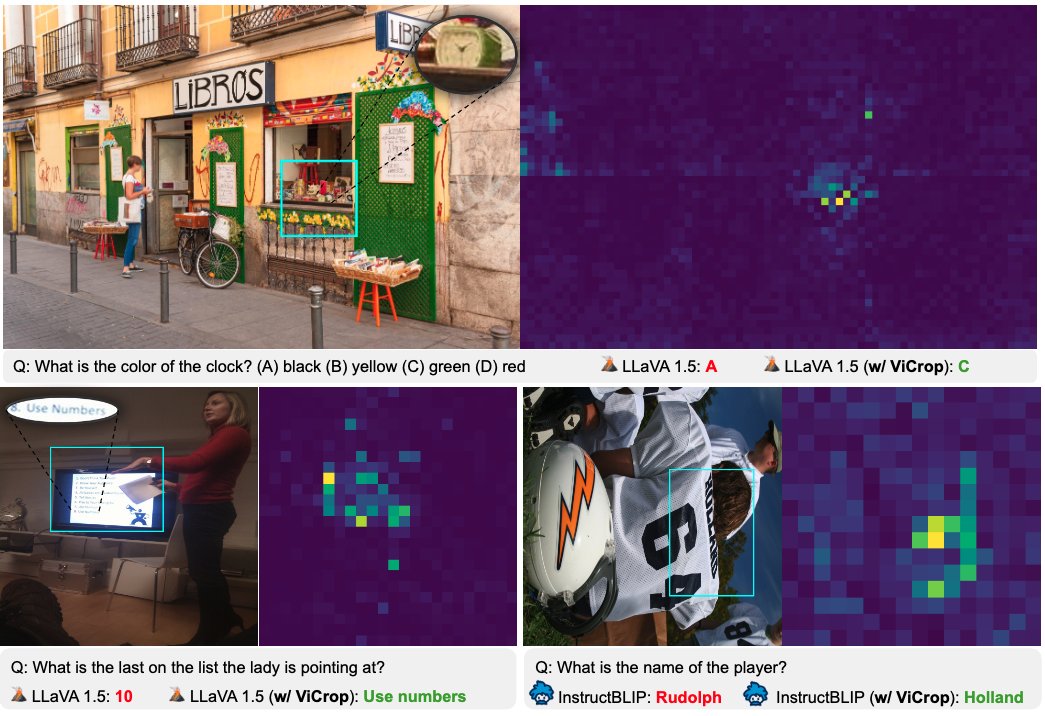

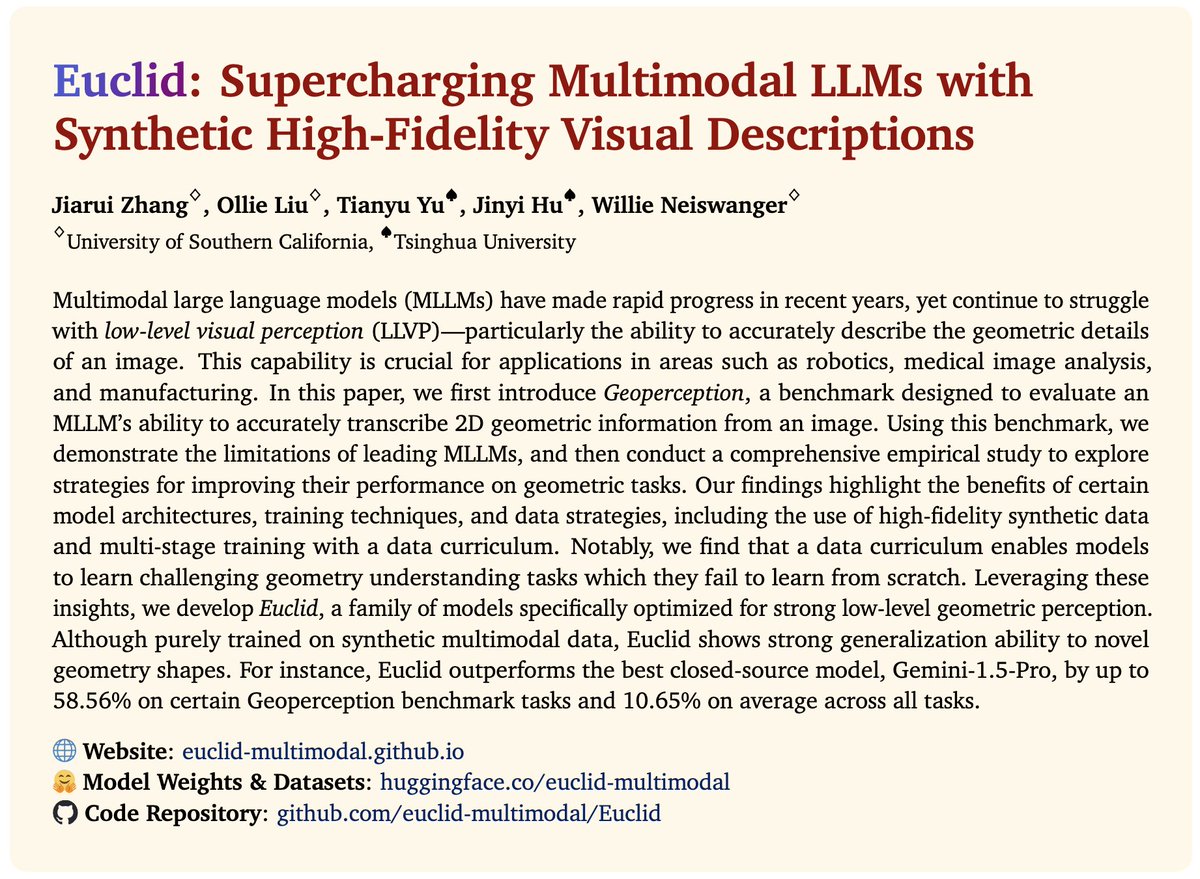

![Jiarui Zhang (Jerry) (@jiaruiz58876329) on Twitter photo [1/11] Many recent studies have shown that current multimodal LLMs (MLLMs) struggle with low-level visual perception (LLVP) — the ability to precisely describe the fine-grained/geometric details of an image.

How can we do better?

Introducing Euclid, our first study at improving [1/11] Many recent studies have shown that current multimodal LLMs (MLLMs) struggle with low-level visual perception (LLVP) — the ability to precisely describe the fine-grained/geometric details of an image.

How can we do better?

Introducing Euclid, our first study at improving](https://pbs.twimg.com/media/Gg8lAuxasAAv3oD.jpg)