Jin Zhou

@jinpzhou

Computer Science PhD at Cornell

ID: 1584374004017758208

https://www.linkedin.com/in/jinpeng-zhou/ 24-10-2022 02:39:43

8 Tweet

96 Followers

88 Following

Can large language models write prompts…for themselves? Yes, at a human-level (!) if they are given the ability to experiment and see what works. arxiv.org/abs/2211.01910 with Yongchao Zhou, Andrei Muresanu, Ziwen, Silviu Pitis, Harris Chan, and Jimmy Ba (1/7)

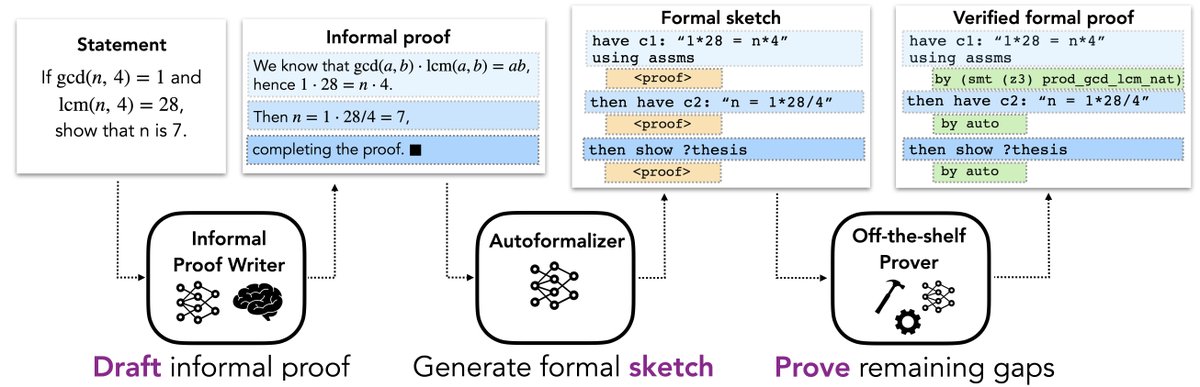

I’m presenting two papers on value-based RL for post-training & reasoning on Friday at AI for Math Workshop @ ICML 2025 at #ICML2025! 1️⃣ Q#: lays theoretical foundations for value-based RL for post-training LMs; 2️⃣ VGS: practical value-guided search scaled up for long CoT reasoning. 🧵👇