John Langford

@johnclangford

Solving Machine Learning at Microsoft in New York.

icml.cc pandemic past president.

vowpalwabbit.org makes RL real.

hunch.net for thinking out loud.

ID: 1210596212140892160

http://hunch.net/~jl 27-12-2019 16:21:13

239 Tweet

9,9K Followers

41 Following

New reqs for low to high level researcher positions: jobs.careers.microsoft.com/global/en/job/… , jobs.careers.microsoft.com/global/en/job/…, jobs.careers.microsoft.com/global/en/job/…, jobs.careers.microsoft.com/global/en/job/…, with postdocs from Akshay and Miro Dudik x.com/MiroDudik/stat… . Please apply or pass to those who may :-)

Last year, we had offers accepted from Kwangjun Ahn, Riashat Islam, Tim Pearce , Pratyusha Sharma while Akshay and Miro Dudik hired 7(!) postdocs.

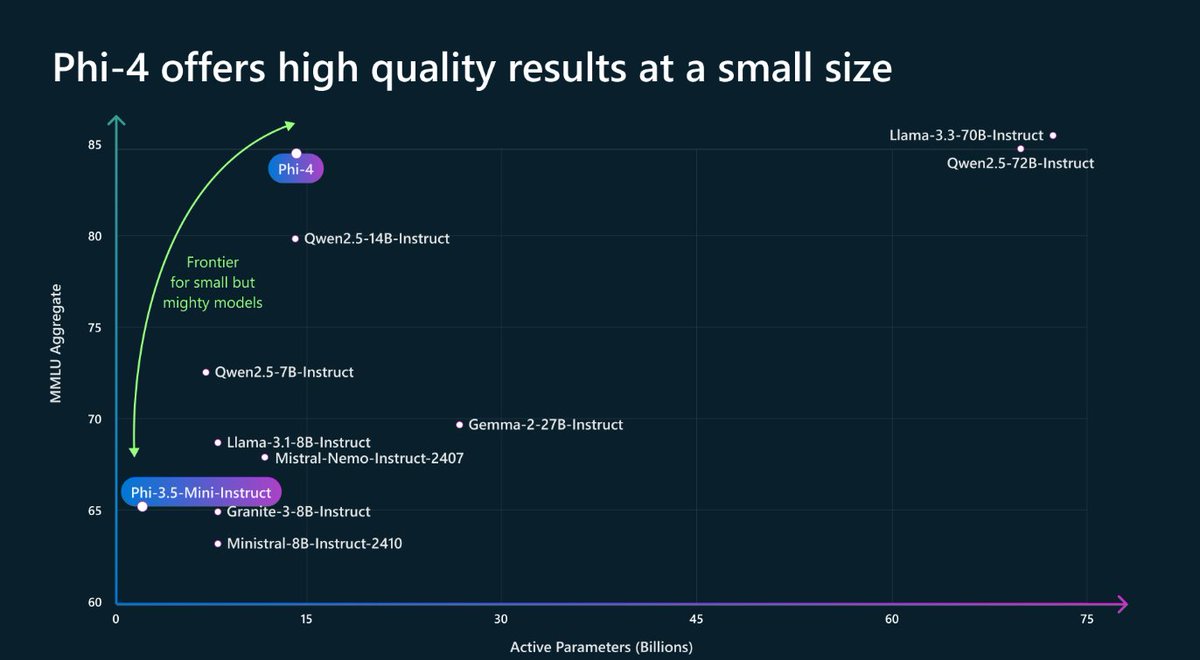

🚀 Phi-4 is here! A small language model that performs as well as (and often better than) large models on certain types of complex reasoning tasks such as math. Useful for us in Microsoft Research, and available now for all researcher on the Azure AI Foundry! aka.ms/phi4blog