Jonathan Hayase

@jonathanhayase

5th year Machine Learning PhD student at UW CSE

ID: 1270435381872193537

https://jon.jon.ke 09-06-2020 19:27:31

22 Tweet

137 Followers

116 Following

Congratulations to Jonathan Hayase on winning the 2024 ICML Best Paper Award for his work during his Google internship.

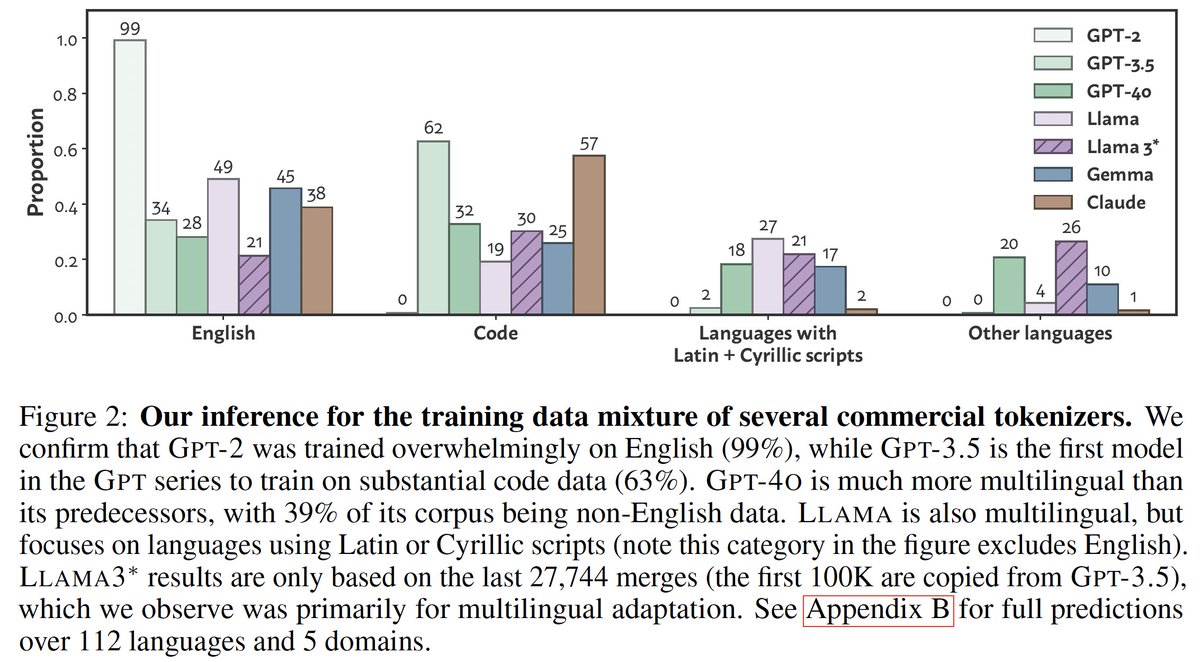

What do BPE tokenizers reveal about their training data?🧐 We develop an attack🗡️ that uncovers the training data mixtures📊 of commercial LLM tokenizers (incl. GPT-4o), using their ordered merge lists! Co-1⃣st Jonathan Hayase arxiv.org/abs/2407.16607 🧵⬇️

excited to be at #NeurIPS2024! I'll be presenting our data mixture inference attack 🗓️Thu 4:30pm w/ Jonathan Hayase — stop by to learn what trained tokenizers reveal about LLM development and chat about all things tokenizers.😊