joschkastrueber

@joschkastrueber

ID: 1887901452112384000

07-02-2025 16:29:46

14 Tweet

8 Followers

26 Following

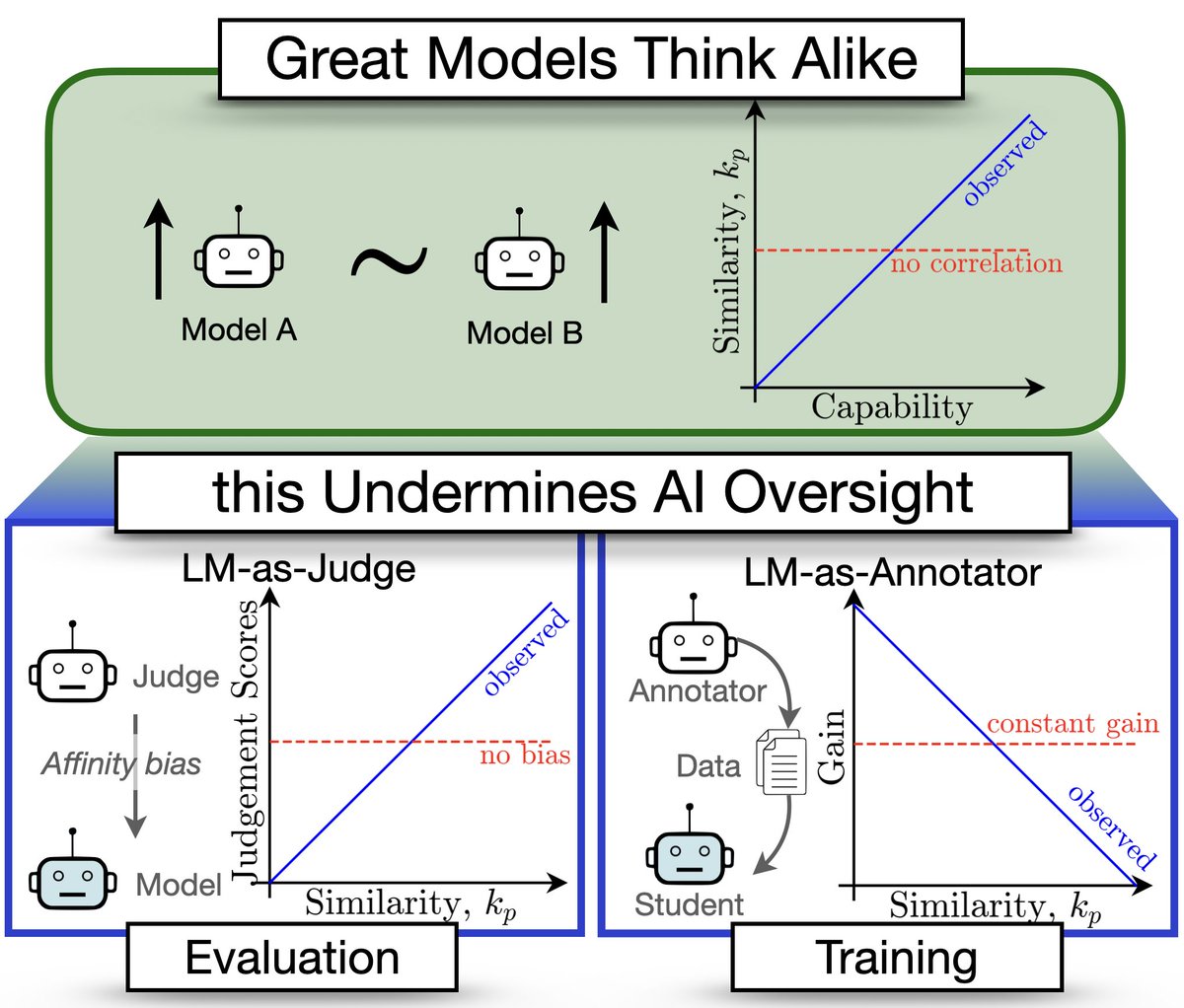

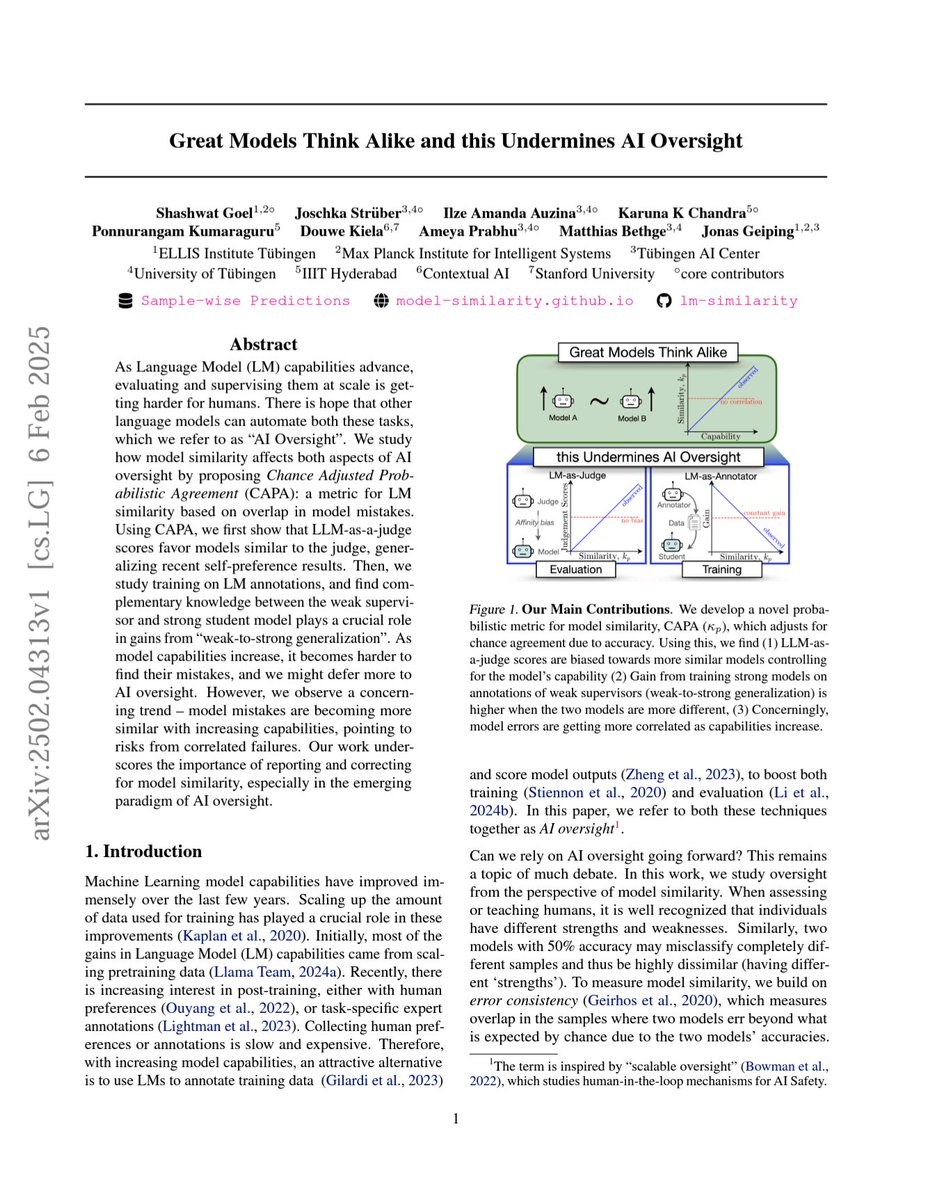

Presenting today at #ICML2025. To learn how to measure language model similarity, and it's effects on LLM as a Judge and Weak to Strong distillation, join our poster session: Today 11 am -1:30 pm, East Exhibition Hall A-B E-2411 w/ Ameya P. @ ICML 2025 joschkastrueber Ilze Amanda Auzina