Kexin Wang

@kexinwang2049

A doctoral researcher at UKP @UKPLab @TUDarmstadt.

ID: 1304177764493295617

https://github.com/kwang2049 10-09-2020 21:59:51

93 Tweet

309 Followers

121 Following

New blog post experimenting with using semantic search to find potential machine learning models for fine-tuning on the Hugging Face model hub. danielvanstrien.xyz/huggingface/hu…

Activation functions reduce the topological complexity of data. Best AF may be diff for diff models and diff layers, but most Transformer models use GELU. What if the model learns optimized activation functions during training? led by Haishuo with Ji Ung Lee and Iryna Gurevych

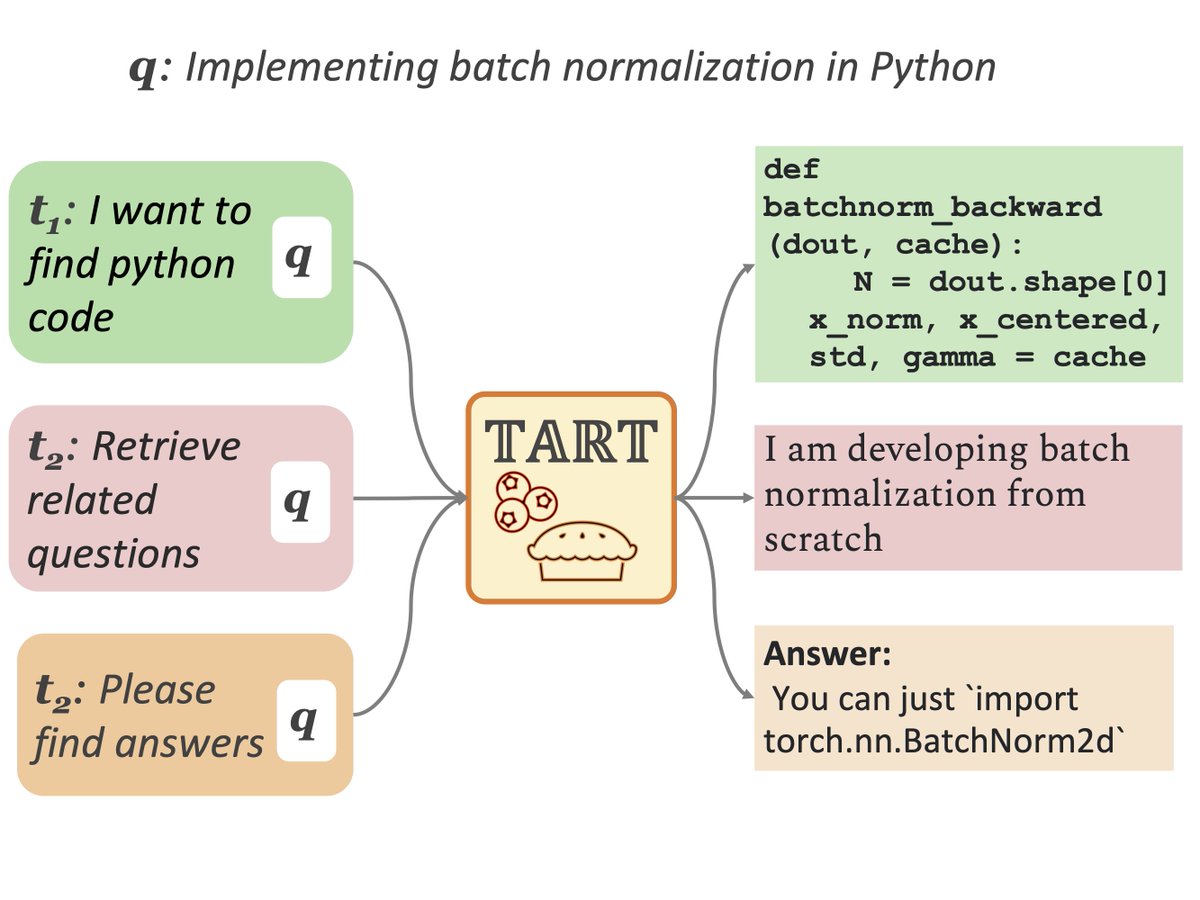

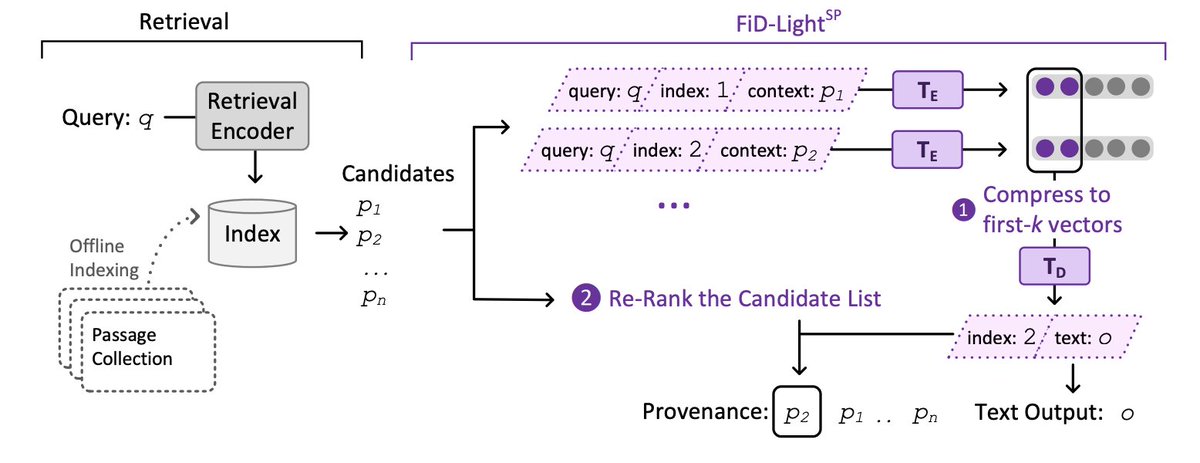

I am super proud to share the work of my Google AI internship 🎉 FiD-Light is an efficient retrieval-augmented generation model advancing the state-of-the-art effectiveness on six KILT tasks considerably 🙌 w/ Jiecao Chen Karthik Raman Hamed Zamani 📄 arxiv.org/abs/2209.14290

Happy to collaborate with Yongxin Huang . Congrats!

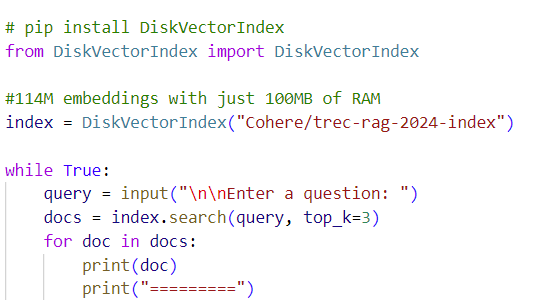

Andreas Waldis HSLU Hochschule Luzern Yufang Hou IBM Research Iryna Gurevych Md. Imbesat Hassan Rizvi Xiaodan Zhu ECE Queens Ingenuity Labs Indraneil Paul Goran Glavaš Universität Würzburg #UniWürzburg Jonibek Mansurov Jinyan Su (on job market) Artem Shelmanov Akim Tsvigun Osama Afzal Alham Fikri Aji @nyhabash Preslav Nakov MBZUAI TU Darmstadt @IstiCnr NYU Abu Dhabi Furkan Şahinuç Ilia Kuznetsov Irina Bigoulaeva rachneet Harish Tayyar Madabushi BathNLP Justus-Jonas Erker 🇪🇺 @ ACL2024 🇹🇭 Florian Mai Computer Science | KU Leuven Nils Reimers cohere Jerry Spanakis 🟥 🦋 gerasimoss.bsky.social Maastricht University Max Glockner Qian Ruan »DAPR: A Benchmark on Document-Aware Passage Retrieval« by Kexin Wang (UKP Lab), Nils Reimers (cohere) and Iryna Gurevych (12/🧵) #ACL2024NLP 📑 arxiv.org/abs/2305.13915

Meet our fellow researchers representing UKP Lab at this year's @ACLmeeting: Iryna Gurevych, Qian Ruan, Justus-Jonas Erker 🇪🇺 @ ACL2024 🇹🇭, Indraneil Paul, Fengyu Cai, Sheng Lu, Haishuo, Furkan Şahinuç, Kexin Wang, Haau-Sing Li 李 效丞, Andreas Waldis @EMNLP2024, and a very special guest from BathNLP, @Harish! #ACL2024NLP

1/12 📢New paper alert “DOCE: Finding the Sweet Spot for Execution-Based Code Generation” The work is a group effort with Patrick Fernandes, Iryna Gurevych, and Andre Martins. UKP Lab ELLIS Unit Lisbon DeepSPIN 📰: arxiv.org/pdf/2408.13745