Kwangjun Ahn

@kwangjuna

Senior Researcher at Microsoft Reserach // PhD from MIT EECS

ID: 1229766355622055936

http://kjahn.mit.edu/ 18-02-2020 13:56:04

42 Tweet

516 Followers

260 Following

If you're at #NeurIPS2023, Kwangjun Ahn will be presenting his work on SpecTr++ in Optimal Transport workshop where he discusses improved transport plans for speculative decoding.

Exciting new paper by Kwangjun Ahn (Kwangjun Ahn) and Ashok Cutkosky (Ashok Cutkosky)! Adam with model exponential moving average is effective for nonconvex optimization arxiv.org/pdf/2405.18199 This approach to analyzing Adam is extremely promising IMHO.

In our ICML 2024 paper (ICML Conference), joint w/ Zhiyu Zhang (Zhiyu Zhang), Yunbum Kook, Yan Dai, we provide a new perspective on Adam optimizer based on online learning. In particular, our perspective shows the importance of Adam's key components. (video: youtu.be/AU39SNkkIsA)

New reqs for low to high level researcher positions: jobs.careers.microsoft.com/global/en/job/… , jobs.careers.microsoft.com/global/en/job/…, jobs.careers.microsoft.com/global/en/job/…, jobs.careers.microsoft.com/global/en/job/…, with postdocs from Akshay and Miro Dudik x.com/MiroDudik/stat… . Please apply or pass to those who may :-)

Last year, we had offers accepted from Kwangjun Ahn, Riashat Islam, Tim Pearce , Pratyusha Sharma while Akshay and Miro Dudik hired 7(!) postdocs.

The Belief State Transformer edwardshu.com/bst-website/ is at ICLR this week. The BST objective efficiently creates compact belief states: summaries of the past sufficient for all future predictions. See the short talk: microsoft.com/en-us/research… and mgostIH for further discussion.

Kevin Frans Depen Morwani Kwangjun Ahn Nikhil Vyas Oh I found them: linear warmup and then constant

elie noahamsel Robert M. Gower 🇺🇦 and also Dion by Kwangjun Ahn, John Langford et al arxiv.org/abs/2504.05295

If you are at ICML 2025, come check out our oral presentation about the non-convex theory of Schedule Free SGD in the Optimization session tomorrow! This work was done with amazing collaborators Kwangjun Ahn and Ashok Cutkosky.

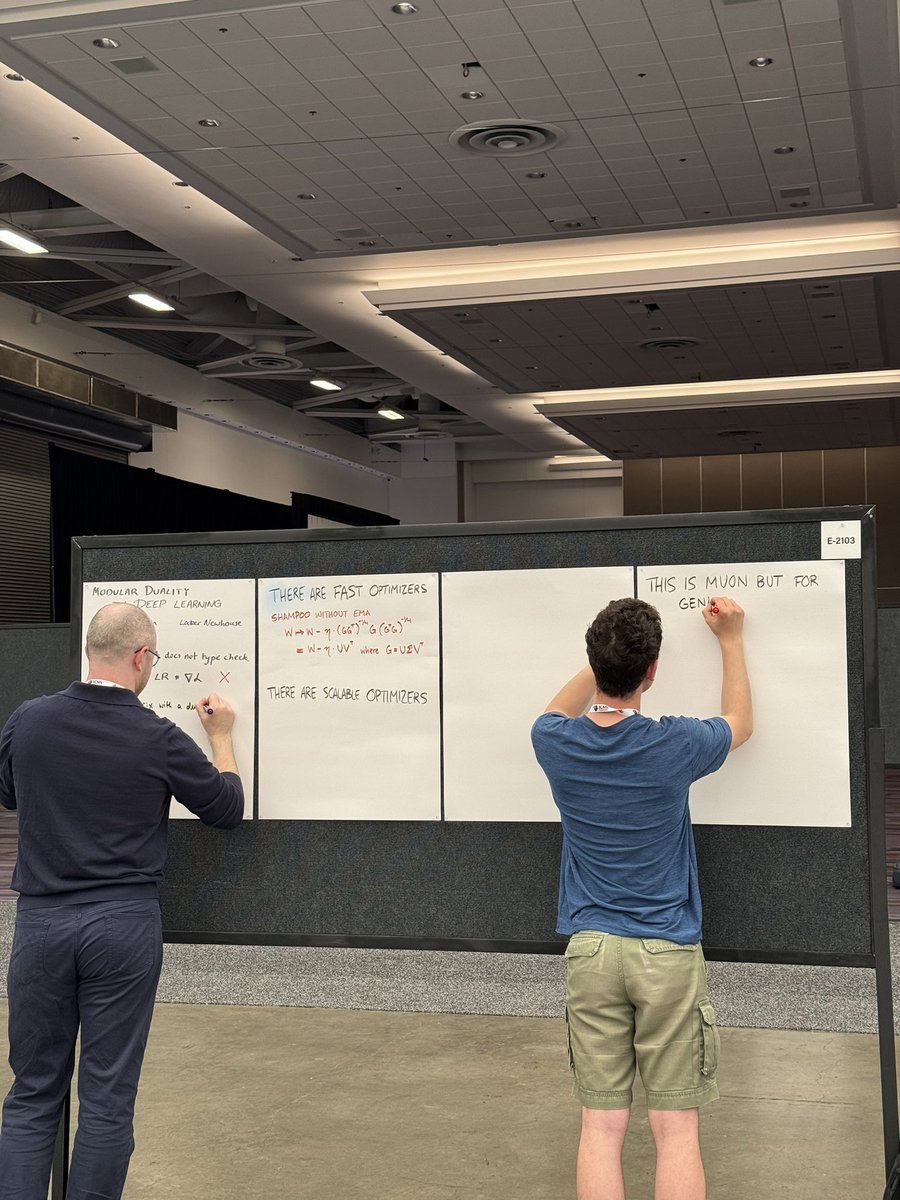

But actually this is the og way of doing it and should stop by E-2103 to see Jeremy Bernstein and Laker Newhouse whiteboard the whole paper.

![Laker Newhouse (@lakernewhouse) on Twitter photo [1/6] Curious about Muon, but not sure where to start? I wrote a 3-part blog series called “Understanding Muon” designed to get you up to speed—with The Matrix references, annotated source code, and thoughts on where Muon might be going. [1/6] Curious about Muon, but not sure where to start? I wrote a 3-part blog series called “Understanding Muon” designed to get you up to speed—with The Matrix references, annotated source code, and thoughts on where Muon might be going.](https://pbs.twimg.com/media/GwZjR6AbEAAaYOX.png)