Leonie Weissweiler

@laweissweiler

postdoc @UT_Linguistics with @kmahowald | PhD @cislmu, prev. @Princeton @LTIatCMU @CambridgeLTL computational linguistics, construction grammar, morphology

ID: 1212484521678929920

http://leonieweissweiler.github.io/ 01-01-2020 21:23:39

224 Tweet

1,1K Followers

320 Following

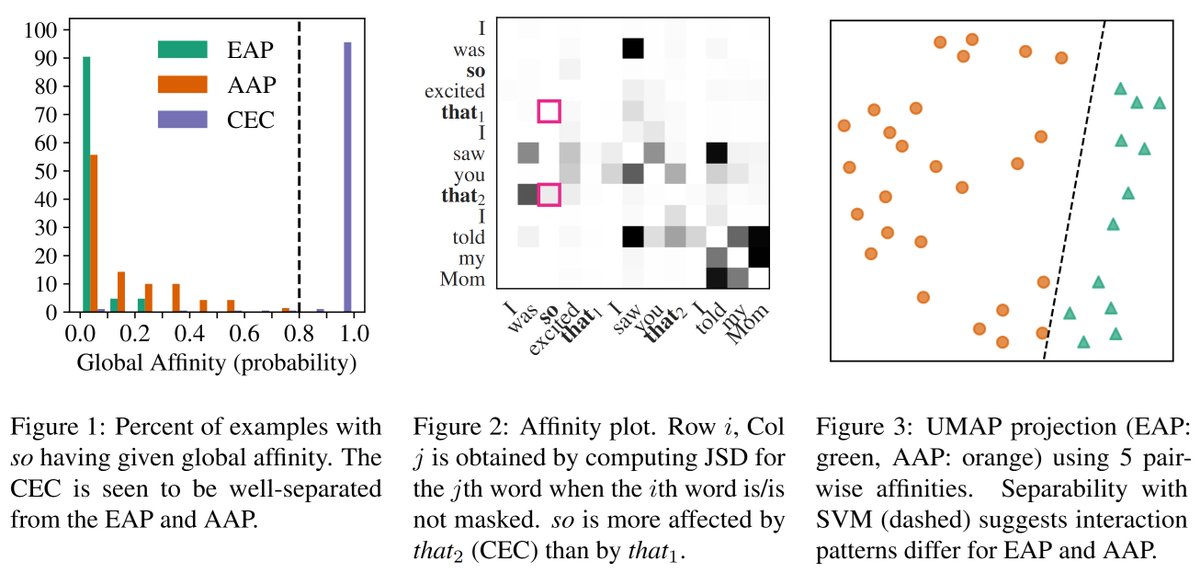

✨New paper ✨ RoBERTa knows the difference between "so happy that you're here", "so certain that I'm right" and "so happy that I cried"! Exciting result (and more) from Josh Rozner along with Cory Shain, Kyle Mahowald and myself, go check it out!

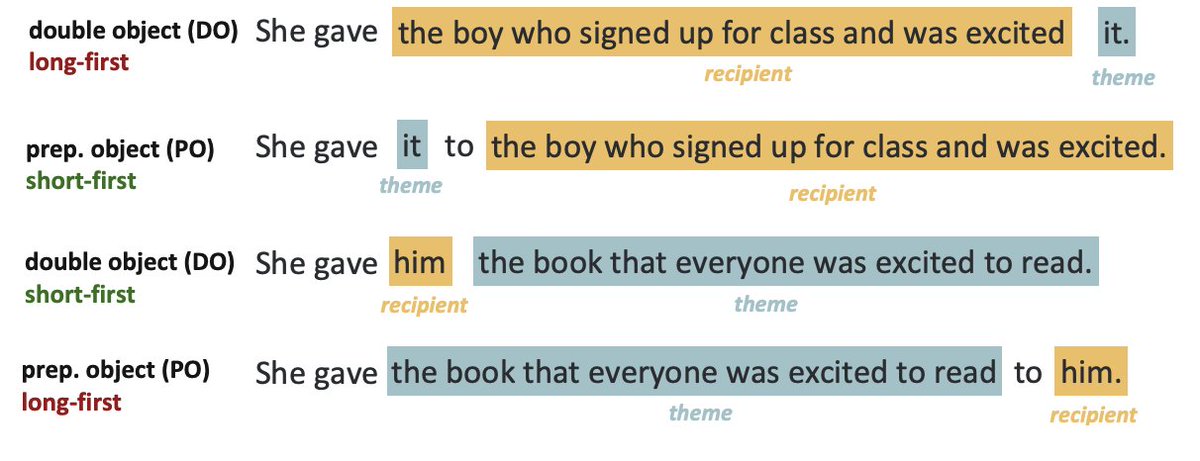

Models have preferences like giving inanimate 📦 stuff to animate 👳 Is it that they just saw a lot of such examples in pretraining or is it generalization and deeper understanding? alphaxiv.org/pdf/2503.20850 Qing Yao Kanishka Misra 🌊 Leonie Weissweiler Kyle Mahowald

Our Goldfish models beat out Llama 3 70B, Aya 32B, and Gemma 27B for 14 languages 🤩 Really highlights the blessings of monolinguality! Great paper Jaap Jumelet Leonie Weissweiler - this will enable some extremely exciting work!

For this week’s NLP Seminar, we are thrilled to host Leonie Weissweiler to talk about Rethinking Linguistic Generalisation in LLMs When: 4/10 Thurs 11am PT Non-Stanford affiliates registration form (closed at 9am PT on the talk day): forms.gle/Ecc39jiuVMviby…

Thrilled to share that our paper is out in PNASNews today! 🎉 We show that linguistic generalization in language models can be due to underlying analogical mechanisms. Huge shoutout to my amazing co-authors Leonie Weissweiler, David Mortensen, hinrich schuetze, and Janet Pierrehumbert!

Do LLMs learn language via rules or analogies? This could be a surprise to many – models rely heavily on stored examples and draw analogies when dealing with unfamiliar words, much as humans do. Check out this new study led by Valentin Hofmann to learn how they made the discovery 💡

Excited to see our study on linguistic generalization in LLMs featured by University of Oxford News!

BabyLMs first constructions: new study on usage-based language acquisition in LMs w/ Leonie Weissweiler, Cory Shain. Simple interventions show that LMs trained on cognitively plausible data acquire diverse constructions (cxns) babyLM 🧵

New study from the lab! Joshua Rozner (w/Leonie Weissweiler) shows that human-scale LMs still learn surprisingly sophisticated things about English syntax.