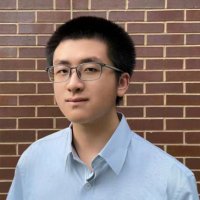

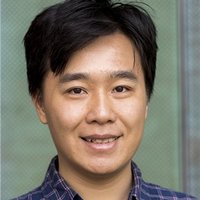

Liyuan Liu (Lucas)

@liyuanlucas

Researcher @MSFTResearch | prev. @dmguiuc

Working on deep learning heuristics (aka tricks)

He/him

ID: 3745471758

https://liyuanlucasliu.github.io 01-10-2015 07:32:59

144 Tweet

802 Followers

502 Following

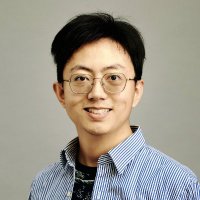

Check our Knowledge Flow blog: We develop a new axis of test-time scaling by doing iterative refinement on a "knowledge" list for reasoning tasks! Notably, we find that updating what is wrong is more effective than recording what is right. Great job led by Yufan Zhuang!

Daniel Han, glad you liked the post! You're spot on to suspect lower-level implementation issues. That's exactly what we found in the original blog. The disable_cascade_attn finding (Sec 4.2.4) was the symptom, but the root cause was that silent FlashAttention-2 kernel bug

"Dense Backpropagation Improves Pretraining for sMoEs" is accepted at NeurIPS Conference! We show that we can proxy inactive experts with a cheap estimator, and that doing this in pretraining improves performance without requiring HPO or compute overhead.