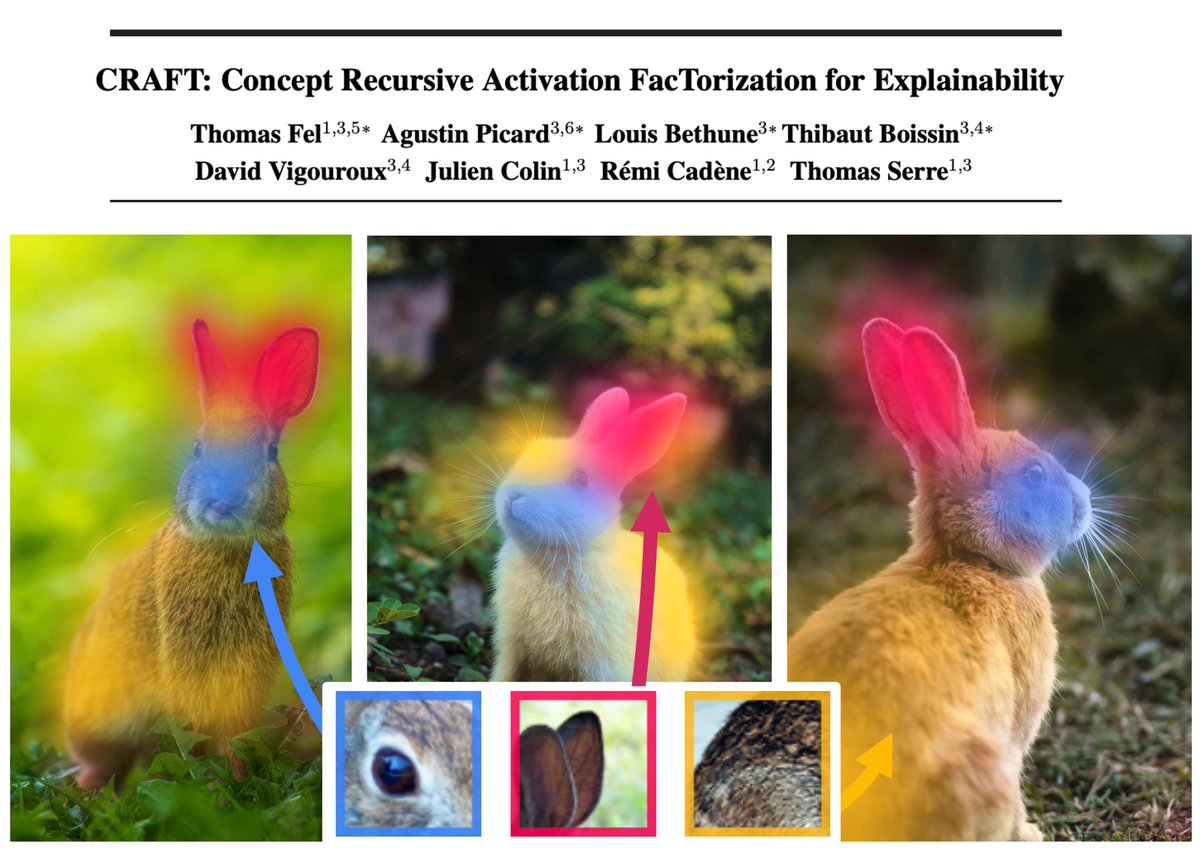

Louis Béthune

@louisbalgue

Please constrain the Lipschitz constant of your networks.

ID: 1279550919898791938

https://louis-bethune.fr 04-07-2020 23:01:37

62 Tweet

116 Followers

199 Following

We are looking for a research engineer to work on domain adaptation and transfer learning École polytechnique near Paris. Come with us to do research, open source Python software and benchmarks. Contact me by email if interested. Please RT (free users need to help each other).

New work on Kernel regression on distributions arxiv.org/abs/2308.14335 where we prove that Rate of convergence is faster ! Applications to forecast distributional variability of 2016 US presidential election. ANITI Toulouse Louis Béthune François Bachoc

📢 *PhD opening* at Centre Inria de l'Université Grenoble Alpes ! Edouard Pauwels, Samuel Vaiter and myself are looking for a student to work with us on learning theory for bilevel optimization, in particular, the implicit bias in bilevel optimization. If interested, please reach out!

Amitis Shidani Samira Abnar Harshay Shah Alaa El-Nouby Vimal Thilak🦉🐒 and Scaling Laws for Forgetting and Fine-Tuning (E-2708) with Louis Béthune, David Grangier, Eleonora Gualdoni, Marco Cuturi, and Pierre Ablin 🔗 icml.cc/virtual/2025/p…