Louis Kirsch

@louiskirschai

Driving the automation of AI Research. Research Scientist @GoogleDeepMind. PhD @SchmidhuberAI. @UCL, @HPI_DE alumnus. All opinions are my own.

ID: 416132515

http://louiskirsch.com 19-11-2011 08:25:30

312 Tweet

1,1K Followers

779 Following

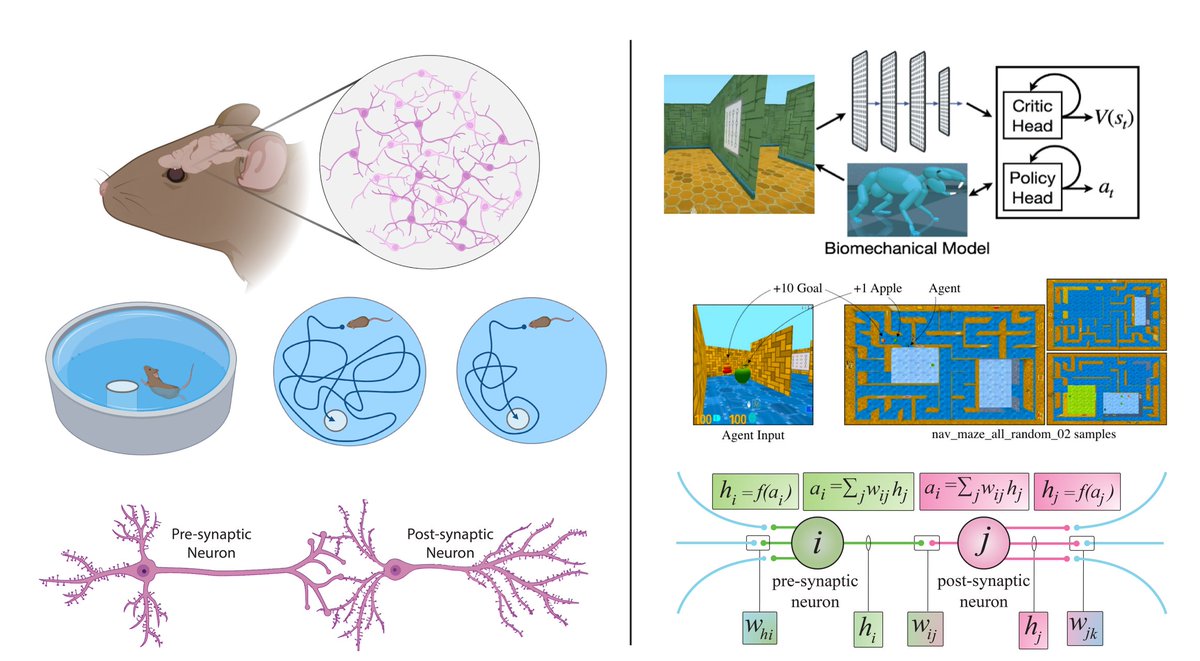

Excited to share our new survey paper of meta-RL! 📊🤖🎊 arxiv.org/abs/2301.08028 Many thanks to my co-authors for the hard work, Risto Vuorio, Evan Liu, Zheng Xiong, Luisa Zintgraf, Chelsea Finn, Shimon Whiteson Highlights in the thread below!

Amazing that Jürgen Schmidhuber gave this talk back in 2012, months before AlexNet paper was published. In 2012, many things he discussed, people just considered to be funny and a joke, but the same talk now would be considered at the center of AI debate and controversy. Full talk:

Had a great time presenting at #ICML2024 alongside Vincent Herrmann & Louis Kirsch. But the true highlight was Jürgen Schmidhuber himself capturing our moments on camera! #AI #DeepLearning

AI Scientists will drive the next scientific revolution 🚀 Great work towards automating AI research Chris Lu Robert Lange Cong Lu Jakob Foerster hardmaru Jeff Clune

Revisiting Louis Kirsch et al.’s general-purpose ICL by meta-learning paper and forgot how great it is. It's rare to be taken along on the authors' journey to understand the phenomenon they document like this. More toy dataset papers should follow this structure.