MMLab@NTU

@mmlabntu

Multimedia Laboratory @NTUsg, affiliated with S-Lab.

Computer Vision, Image Processing, Computer Graphics, Deep Learning

ID: 1394997810584428547

http://www.mmlab-ntu.com 19-05-2021 12:46:26

69 Tweet

1,1K Followers

18 Following

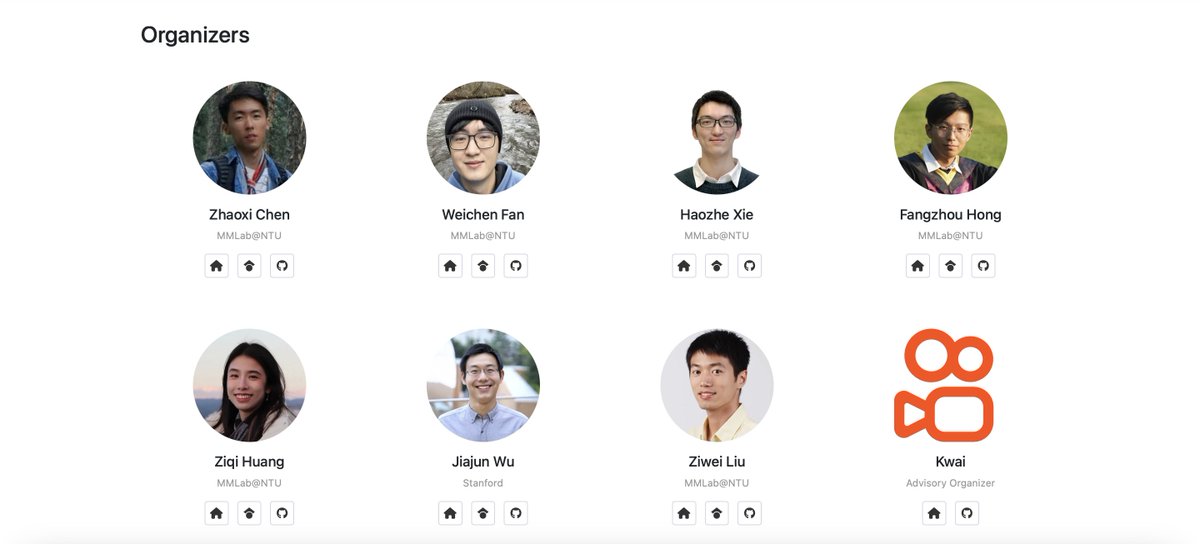

Congrats to Guangcong Wang Jianyi Wang and Zhaoxi Chen from MMLab@NTU!

Chase Lean Try StableSR, a diffusion model-based upscaler. We paid extra efforts to maintain fidelity. Code and model: github.com/IceClear/Stabl….

🔥🔥We are excited to announce #Vchitect, an open-source project for video generative models Hugging Face 📽️LaVie (Text2Video Model) - Code: github.com/Vchitect/LaVie - huggingface.co/spaces/Vchitec… 📽️SEINE (Image2Video Model) - Code: github.com/Vchitect/SEINE - huggingface.co/spaces/Vchitec…

The Upcoming AI talk: 🌋LLaVA🦙 A Vision-and-Language Approach to Computer Vision in the Wild by Chunyuan Li Chunyuan Li More info: mailchi.mp/1242f078b2b1/a… Subscribe us: mailchi.mp/4417dc2cde83/t…